With the growing need for digital innovation and creative use of technology in science, technology, engineering, and mathematics (STEM) professions, elementary teachers are being asked to infuse computational thinking (CT) concepts across subject areas (Barr & Stephenson, 2011; Wiebe et al., 2020; Wing, 2006).

A recent review of published research revealed that 52 countries have integrated CT in their primary school curricula (Tang et al., 2020). This increasing emphasis on CT as a critical skill in PK-12 STEM teaching and learning (Committee on STEM Education, 2018) underscores the pressing need to develop teachers’ readiness to integrate CT in their classrooms. However, there is limited research on the effective preparation of teachers to conceptualize and facilitate CT, especially with regard to the use of technology for problem solving (Li et al., 2020; Mason & Rich, 2019; Yadav et al., 2017). There is also a need for more knowledge on how to assess a teacher’s emergent understanding of CT concepts (Angeli & Giannakos, 2020; Poulakis & Politis, 2021; Tang et al., 2020).

Recent research on assessment in CT teacher education is largely based on self-reported measures or traditional tests (Kong et al., 2020; Mouza et al., 2018) with an emphasis on noncognitive traits such as self-efficacy (Adler & Beck, 2020; Boulden et al., 2021; Rich et al., 2021). Tang et al. (2020) also reported on the lack of focus in CT assessments on the dispositions that support and enhance CT skills. These dispositions include confidence in dealing with complexity, persistence in working with difficult problems, and tolerance for ambiguity (Computer Science Teachers Association [CSTA] & International Society for Technology in Education [ISTE], 2011).

The increasing sophistication of interactive instructional technology presents a unique opportunity to reimagine assessment of CT in PK-12 teacher education. Building a reflective, process-oriented approach to assessment can provide evidence of growth in both the construction of CT content knowledge and the development of CT dispositions.

This paper presents an application of the Authentic Integrated Online Assessment Model (Galanti et al., 2021b), using editable, real-time educational tools to design portfolio-based summative assessments in graduate CT courses for elementary teachers. This model is based on extant STEM TPACK (technology, pedagogy, and content knowledge) frameworks (Mishra & Koehler, 2006; Niess, 2005) and was originally developed in the context of an online PK-12 mathematics teacher education course.

By leveraging technology to pivot away from static summative assessments of STEM knowledge, instructors can emphasize iterative evaluation of dynamic representations of STEM reasoning. The following research question guided the study: How does authentic integrated assessment support CT learning in an online graduate course for practicing elementary teachers?

Theoretical Framework

With the dramatic shift in instructional delivery models in both PK-12 education and postsecondary education during the COVID-19 pandemic, educators were faced with the challenge of developing summative assessments for online learning environments that recognized learning as both a social and a cognitive process (Hodges et al., 2020). They needed to leverage the dynamic nature of the digital environment in new and creative ways. Assessment could be framed as a continuous process with a component of self-regulation (Rapanta et al., 2020).

As university STEM teacher educators with previous experience in synchronous online teaching, we saw a unique opportunity to extend the digital formative assessment strategies we had been using prior to the COVID-19 pandemic in our mathematics courses for practicing teachers. We redesigned our traditional summative assessments to reduce outside-of-class work expectations and to model the use of digital tools to iteratively represent and revise mathematical understandings in an online environment.

The summative assessment redesign became the basis for our theorization of an Authentic Online Integrated Assessment Model (Galanti et al., 2021b) for deepening conceptual understanding of teachers as online mathematics learners. The model builds iterative representations and revisions of mathematical thinking as a summative assessment of learning. We enacted this model in our online mathematics courses for K-12 teachers using collaborative real-time presentation platforms (e.g., Google Slides) for in-class problem solving and digital interactive notebooks (DINbs) for outside-of-class reflection and revision.

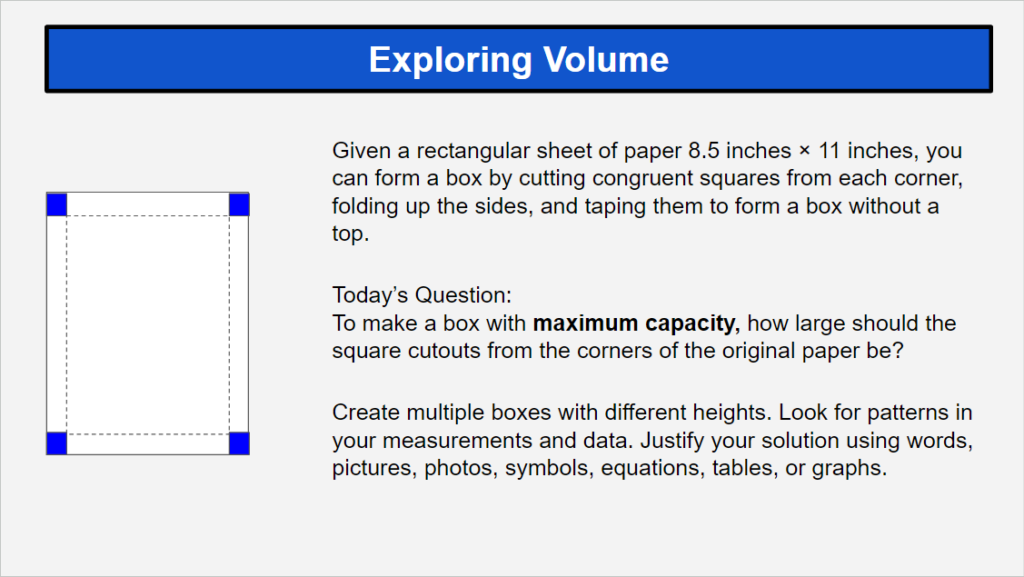

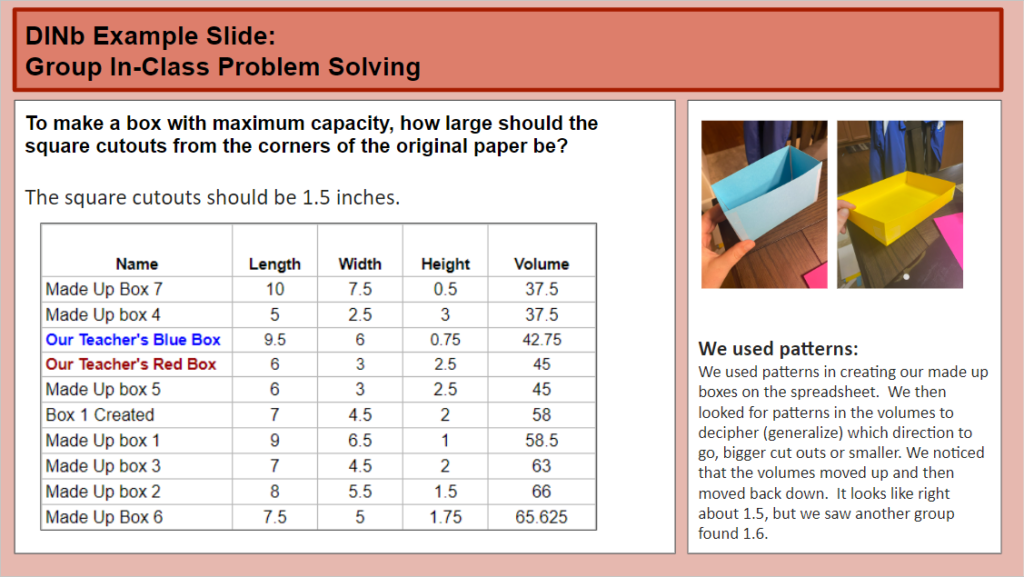

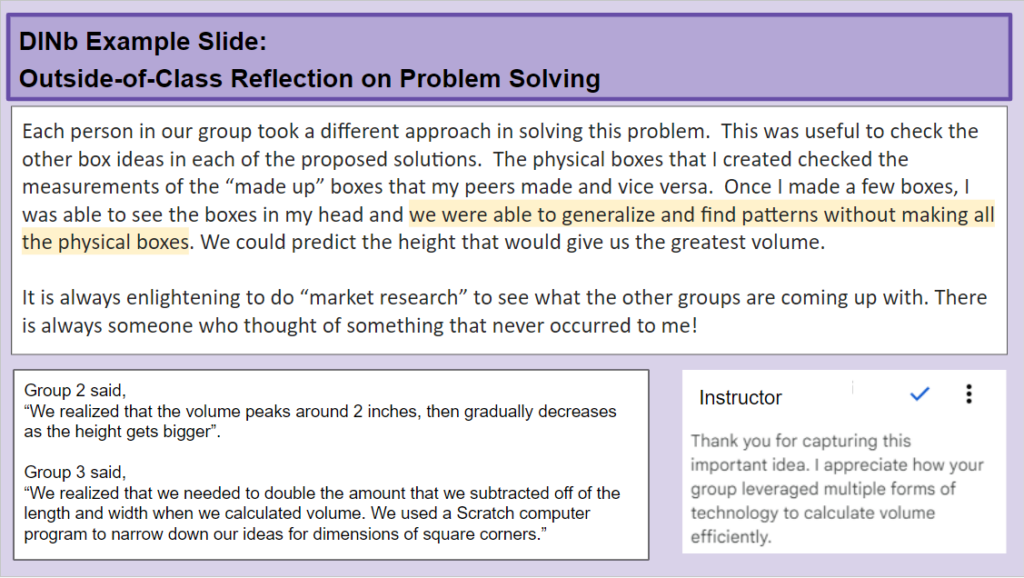

Teachers in our courses were provided with Google Slides templates with task prompts to record their in-class collaborative STEM problem solving. Each teacher transferred their in-class group work to their own DINb in a course online shareable file storage drive, where they could elaborate on their solutions. (See Figures 1-3 for an example set of DINb slides with teacher work from a geometry and measurement task). The interactive nature of Google Slides and its version history allowed us to see how teachers’ mathematical thinking evolved. The comment feature provided an efficient structure for connecting feedback to specific entries on the slides. Teachers could also reflect upon the mathematical ideas of other groups. Use of the DINb as an assessment structure was designed to make teacher thinking visible, to provide opportunities for formative feedback, and to make evaluation of ideas more equitable (as recommended in Baker et al., 2021).

Figure 1

Geometry and Measurement Task – Prompt

Figure 2

Geometry and Measurement Task – In-Class Collaborative Problem Solving

Figure 3

Geometry and Measurement Task – Outside of Class Reflection

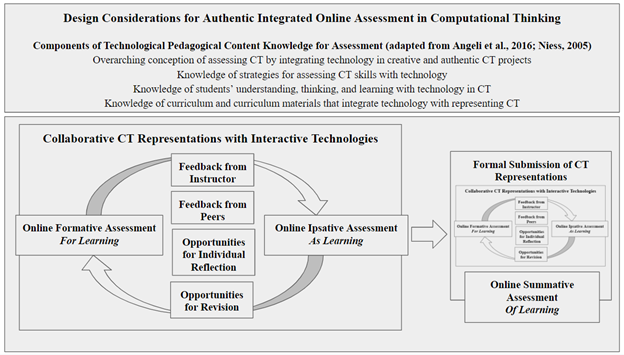

Because CT has been conceptualized as a problem-solving approach that uses computer science in mathematical contexts (Lee et al., 2020; Weintrop et al., 2016), we further theorized that we could apply the Authentic Integrated Online Assessment Model in an online CT course for elementary teachers (see Figure 4). This model offers a response to the gap in the literature on assessment of teachers’ knowledge of CT and readiness to integrate CT in classroom instruction. The following paragraphs describe the theoretical foundation of the model and the relevance of this model for assessing teachers’ CT knowledge and dispositions.

Figure 4

Model for Authentic Integrated Online Assessment in Computational Thinking

Authentic Integrated Online Assessment as a Synthesis of Assessment Functions

Drawing on prior conceptualizations of formative, summative, and ipsative assessment functions, we describe three key design elements in our Authentic Integrated Online Assessment model. First, we follow Wiggins’ (2006) definition of authentic assessment as the active implementation of multiple opportunities for formative feedback. Students engage in problem solving in the same ways that practitioners within the context of a STEM discipline would approach their work. A portfolio of problem solving becomes the summative assessment. Second, we take up Hattie’s (2012) conceptualization of formative feedback as supporting and scaffolding students in a way that they can then “act upon in terms of where they are going, how they are going there, and where they might go next” (p. 127). Third, we integrate self-referential, or ipsative, assessment (Hughes et al., 2014) as a bridge between formative assessment of student progress and summative assessment as a final judgment of student learning. Ipsative assessment, or “a self-awareness of progress toward a learning goal” (p. 31) empowers online learners to build self-awareness of their ongoing process of learning by comparing earlier work and current work (Hughes, 2011).

In the Authentic Integrated Online Assessment model (illustrated in Figure 4), iterative cycles of assessment for learning (formative) and assessment as learning (ipsative) combine collaborative sensemaking and individual reflection and revision. These cycles are captured in a DINb as a summative assessment of learning. The DINb documents the CT learning process across multiple class sessions. With a shift in focus away from traditional summative assessments of knowledge, the model redefines what it means to be successful as a CT learner and by extension a CT teacher.

The digital learning environment is especially supportive of the integration of these three assessment functions (summative, formative and ipsative) as an ongoing process because of interactive technology, real-time feedback, and version tracking. Our prior research on the use of DINbs as authentic integrated assessments in online mathematics courses suggests that teachers can deepen their technological pedagogical content knowledge (also known as technology, pedagogy, and content knowledge, or TPACK) from their positions as content learners (Galanti et al., 2021a). Teachers’ experiences with DINbs as portfolios of their iterative thinking revealed the importance of feedback, revision, and reflection. Cycles of formative and ipsative assessments throughout the course emphasized that mathematics learning is an ongoing process. Because of the complementary relationship between CT and mathematical thinking (Pérez, 2018), we theorized that the learning affordances of DINbs as assessments in our online mathematics courses could also be realized in an online graduate CT course.

Authentic Integrated Online Assessment for CT Teaching and Learning

While the research community has not adopted a singular definition of CT in PK-12 education, there is a growing emphasis on CT as the skills and practices of computer science that help us to explain and interpret the world (Denning & Tedre, 2019). Shute and colleagues (2017) define CT as a “conceptual foundation required to solve problems effectively and efficiently (i.e., algorithmically, with or without the assistance of computers) with solutions that are reusable in different contexts” (p. 115). Their six facets of CT (decomposition, abstraction, algorithms, debugging, iteration, and generalization) provided a conceptual foundation for curriculum design, collaborative task facilitation, and reflection in our CT course for elementary teachers in this study.

The Authentic Integrated Online Assessment Model is especially appropriate for eliciting evidence of emergent understanding of these six facets of CT because it creates digital representations of collaborative problem-solving tasks with an emphasis on multiple instances of feedback and reflection. Building a portfolio of CT artifacts as a summative assessment parallels the constructionist origins of CT (Papert, 1980). The development of a student’s problem-solving proficiency is assessed through the creation of computational artifacts (Lodi & Martini, 2021). Papert (1980) emphasized the tangibility and shareability of these artifacts as “objects-to-think-with” (p. 23).

Students engage in complex problem solving and think about their learning through computer programming. Knowledge is constructed when students produce these computational artifacts. With the DINb as an enactment of our assessment model, teachers construct knowledge for CT teaching and learning.

Enacting the Model Using DINbs

The use of interactive, real-time presentation software (e.g., Google Slides) for the DINb makes it possible for teachers to create evidence of CT. As teachers record and reflect on their problem solving, they are documenting both the process and products of CT inside and outside of structured class time. The DINb documents a continual learning process across multiple class sessions. Consistent with the Authentic Integrated Online Assessment model, the DINb synthesizes the formative, summative, and ipsative assessment functions in an authentic way. It transfers in-class collaborative problem solving to an individual document and fosters outside-of-class reflection and revision.

As instructors provide ipsative feedback using Google Slides comment features within the editable DINb slides, they encourage teachers to engage in productive struggle as part of a CT sensemaking journey. This concept of ipsative assessment is especially transformative in the context of CT teacher education, as the emphasis shifts away from completion and correctness of computer programs for teachers who may be anxious about learning and teaching CT (Bell et al., 2016; Reding & Dorn, 2017). The summative submission of the DINb at selected points during the CT course documents individual and collaborative learning experiences and formative/ipsative cycles of feedback and revision. Teachers can take ownership of their CT progress within their DINbs, and the cyclical assessment focus can counter a teacher’s fears about their lack of programming experiences (Lytle et al., 2019).

We further assert that DINbs as a form of authentic online assessment are epistemologically aligned with Papert’s (1980) conceptualization of computer programs as objects of knowledge construction. As teachers have the opportunity to express, test, and revise multiple representations of CT in their DINbs, the digital slides themselves become computational artifacts. As their instructors facilitate cycles of feedback and reflection within DINbs, teachers are using technology both to capture and refine their CT understandings. Because DINbs are artifacts of CT sensemaking, they also support assessment of the CSTA and ISTE (2011) dispositions of confidence in dealing with complexity, persistence in working with difficult problems, and tolerance for ambiguity.

Methods

This study was based on the principles of qualitative content analysis (Bryman, 2008) used by Poulakis and Politis (2021) in their study of approaches to CT assessment. The research aimed to describe how authentic online assessment as enacted in the DINb supports teachers’ CT learning. Teachers’ textual responses to survey questions about their experiences with the DINb were the primary unit of content analysis, and the emergent categories became the basis for a selection of illustrative evidence of CT learning from teachers’ DINbs. Analysis of this evidence was used to narrow the broad categories of learning experiences from teachers’ survey responses into a set of themes revealing how the DINb supported their learning.

Participants and Context

This research was conducted in a synchronous online 16-week graduate CT course at a university in the southeastern United States. The first author was the instructor, and students in the course were teachers. All teachers (N = 15) identified as female and were enrolled in the university graduate certificate program in elementary STEM education. Nine of the teachers worked in public elementary schools in a large urban district (kindergarten, n = 1; Grade 3, n = 5; and Grade 5, n = 3). Four of the teachers worked in K-8 public charter schools as a kindergarten teacher, Grade 3 teacher, administrator, and media specialist. The remaining participants were a former middle school teacher and a prospective elementary teacher. Pseudonyms were used in reporting the results to maintain the confidentiality of participant data.

The participants engaged with a variety of programming environments during the course, with an emphasis on the six facets of CT as defined by Shute et al. (2017). Drawing on prior research on CT pedagogies for elementary teachers (Kotsopoulos et al., 2017; Umutlu, 2021), the instructor designed weekly small group problem-solving activities for breakout rooms in a Zoom videoconference application, with an emphasis on algorithmic thinking, decomposition, abstraction, tinkering, and remixing code. The teachers made their thinking visible in the form of screenshots, verbal explanations, and hyperlinks to code in weekly class sets of Google Slides.

While we had experience with integrating real-time collaborative problem-solving and video conferencing platforms in previous online STEM courses (Baker & Hjalmarson, 2019), our addition of DINbs to the CT course created new opportunities for integrating formative, ipsative, and summative assessment of teachers’ content and pedagogical content knowledge.

Because of the simultaneous editing capability of Google Slides, evidence of each groups’ CT was available to all teachers to reference during class and after class. Each teacher copied her small group collaborative task slides into her individual DINb at the end of class. The teachers were encouraged to independently reflect on and revise their small group task representations outside of class. The instructor provided weekly formative feedback on each DINb using comments in Google Slides. The teachers responded to feedback questions from the instructor prior to submission of the DINb as a summative assessment of their CT learning. The DINbs were submitted at summative assessments two points during the course.

Data Collection and Analysis

We used a general inductive approach (Liu, 2016; Thomas, 2006) to qualitative content analysis, familiarizing ourselves with the raw data from course artifacts and allowing themes to emerge. A first cycle of thematic coding (Saldaña, 2021) was used to analyze midcourse and end-of-course responses to survey questions about the use of a DINb from both a learning perspective and a teaching perspective. These data sources were organized in a matrix (Merriam, 2009) to identify themes and relationships between them.

The first-cycle thematic codes (e.g., time to complete unfinished work, not giving up) were grouped into categories (e.g., productive struggle) of self-reported learning. Additional course artifacts (e.g., “Dear Instructor” letters, DINb slides, discussion posts about questioning and assessment, descriptions of evolving CT conceptualizations) were analyzed sequentially to capture changes in teachers’ conceptions of CT learning and teaching over time and to triangulate the first-cycle thematic coding. As we analyzed these course artifacts, we completed a second cycle of coding of the DINb slides to condense the first-cycle codes into themes of how teachers were learning with DINbs as a synthesis of formative, ipsative, and summative assessment functions.

Results

The first cycle of thematic coding of teachers’ responses to the midcourse and end-of-course surveys about their experiences as learners and as teachers resulted in five organizing categories (i.e., Conceptual Understanding, Extending Learning Beyond Class, Productive Struggle, Tinkering as Learning, and Process Over Product). The teachers appreciated being able to capture their synchronous in-class collaborations in DINb Google Slides and to continue building their conceptual understandings outside of class with instructor formative feedback.

The DINb structure allowed teachers to share their sensemaking about how they were learning about CT concepts of algorithms, abstraction, and evaluation. They described how the DINb normalized struggle as productive, because they could always revise their thinking in later weeks as they gained new insights. Teachers valued tinkering with technology as a pedagogical experience in CT (Kotsopoulos et al., 2017) because they could make mistakes as a part of their sensemaking. They could make their process of CT visible in Google Slides using a chain of comment exchanges with the instructor, and they could always revise the DINb as a product of their problem solving.

In the second cycle of coding, we analyzed teachers’ work in the DINbs to narrow these general categories of how teachers were learning CT and focus on how the structure of the Authentic Integrated Online Assessment Model supported this learning. Teachers were able to (a) represent and extend their CT knowledge, (b) share their unfinished thinking, and (c) converse with their instructor in the DINbs. These three themes are consistent with teachers’ end-of-course reflections on DINbs as authentic assessments that captured their growth through collaboration, communication, and ipsative reflection in the context of CT.

The following sections offer illustrative evidence of each of these themes using evidence of two teachers’ learning in three CT tasks. Excerpts from Laura’s (Grade 3 teacher) and Jackie’s (Grade 5 teacher) DINbs are presented as purposefully selected sequences of cycles of formative and ipsative feedback from small group and individual slides. Although all participating teachers’ DINbs contained evidence of the three themes of how teachers were learning CT, these excerpts were selected as clear and cohesive examples of alignment of survey responses and the text of the DINbs. Evidence of CT learning includes Google Slides entries, comment exchanges with the instructor, and relevant language from end-of-course survey reflections.

Representing and Extending CT Content and Pedagogical Content Knowledge

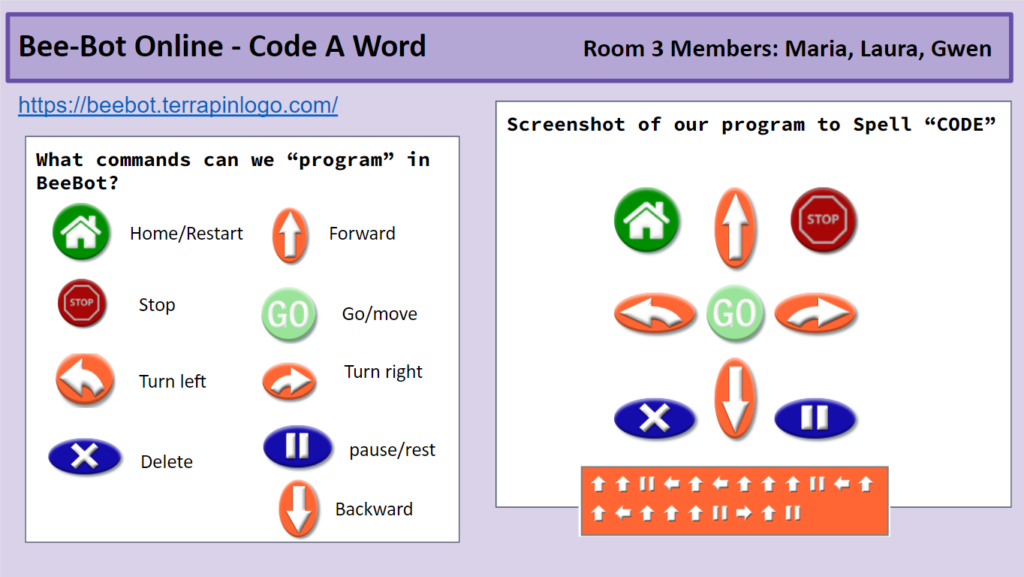

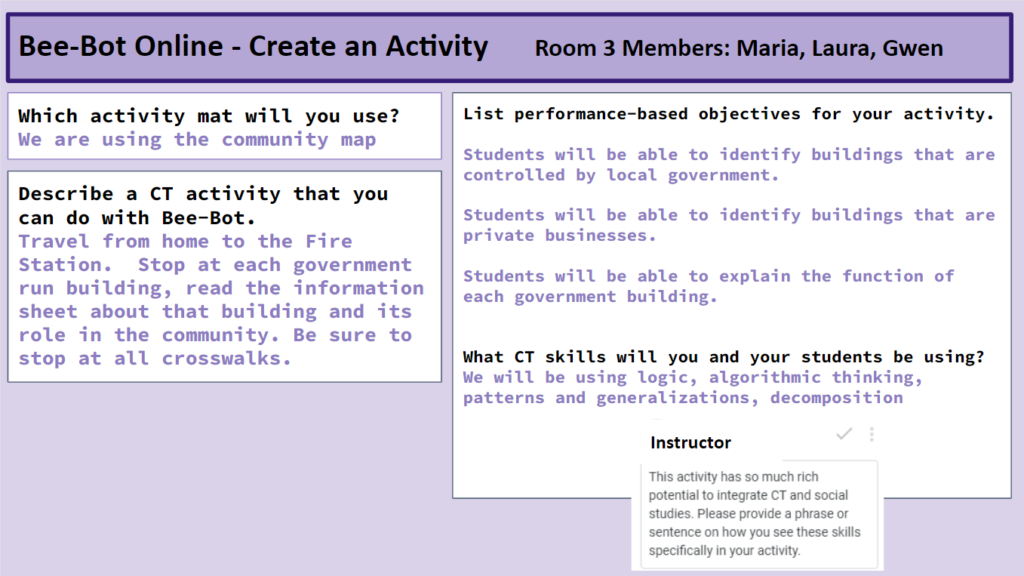

The teachers valued the DINb as a digital tool by which they could represent their in-class CT in multiple ways (links to code, screenshots, explanation of problem-solving strategies, and noticings of others’ work). Within a “Code a Word” task on physical computing during Week 4 of the course, the teachers tinkered with a Bee-Bot® online emulator (https://beebot.terrapinlogo.com/) and described its functionality. They also designed a classroom activity using Bee-Bot® to connect to elementary-level curriculum. Three teachers (Laura, Maria (K-6 school administrator), and Gwen (Grade 3 teacher)) worked collaboratively to command the Bee-Bot® to spell a word on an alphabet mat, and they documented algorithmic thinking, debugging, iteration, and evaluation as they completed the task (see Figures 5 and 6).

In these group problem-solving slides, the teachers represented their CT content knowledge by using a screen-based emulator as an abstraction of the robot’s physical motion. They provided formative feedback to one another as they worked collaboratively in the Zoom videoconferencing breakout room to describe their CT process and recognize the unique contributions of individual group members. They captured Maria’s additional use of a paper record of their algorithm as a debugging strategy. The slides provide further evidence of the group’s CT pedagogical content knowledge as the teachers created a classroom activity integrating CT and social studies government topics using the Bee-Bot® emulator.

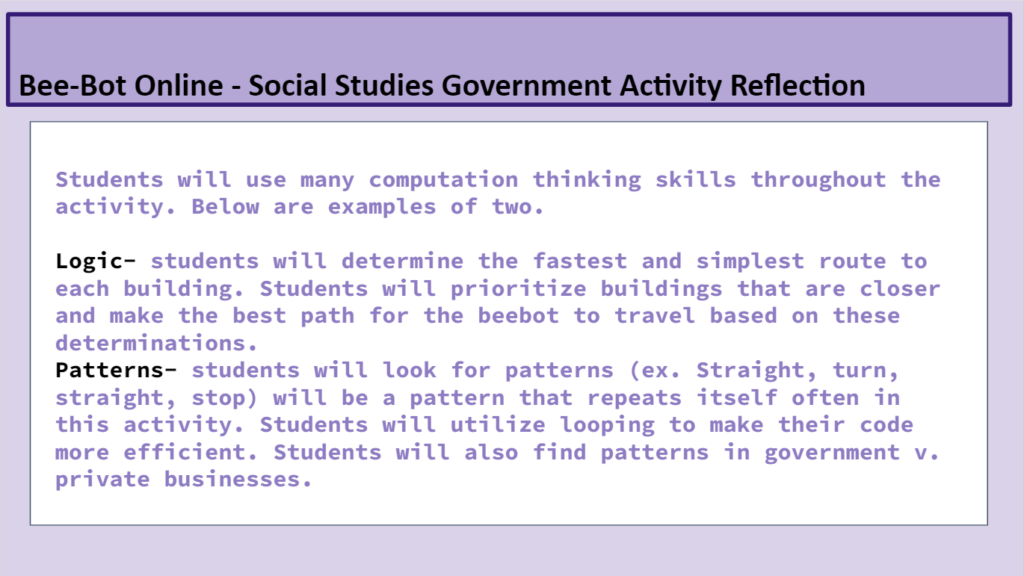

Laura extended her pedagogical content knowledge in her individual DINb by responding to her instructor’s comment prompt during the week after the in-class activity. Her instructor had commented on the Google slide, “Add a phrase or sentence to explain where you see CT within this activity.” Laura created an additional slide (see Figure 7) with a deep description of how students would use logic and patterns as they developed algorithms to reason about the difference between private and government businesses.

Figure 5

Group Slide in Laura’s DINb: Representing CT Content Knowledge

Figure 6

Group Slide in Laura’s DINb: Representing CT Pedagogical Content Knowledge

Figure 7

Individual Slide in Laura’s DINb: Extending Knowledge of CT Skills

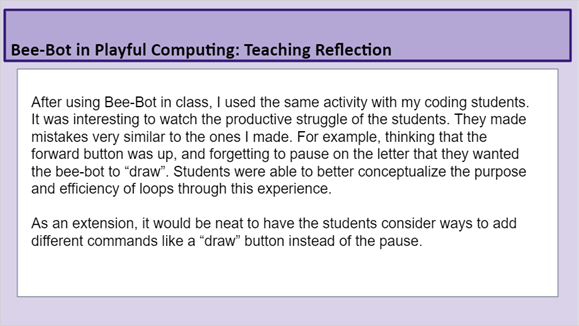

Laura added a fourth slide related to the activity to reflect on her decision to use the “Code a Word” activity with her own Grade 3 students in an after-school coding class (see Figure 8). Because Laura was able to see struggle as productive in her own CT learning, she was comfortable with allowing her students the same space to tinker and explore. She extended her CT by describing how she would increase her own students’ CT opportunities.

Figure 8

Individual Slide in Laura’s DINb: Extending CT Pedagogical Content Knowledge

Laura’s end-of-course reflection provided a window on the value of the DINb as an assessment structure:

The DINb allowed me space to digest activities, my challenges, and my growth. The space to sit alone with something after learning it in class was a really powerful way of further developing concepts and ideas. The comments from my instructor were helpful in developing confidence as a computational thinker!

Her DINb contained evidence of three cycles of feedback and revision related to the Bee-Bot® “Code a Word” task as (a) small group sense-making with in-class peer formative feedback; (b) Laura’s individual response to out-of-class instructor formative feedback about CT, and (c) unprompted reflections on her classroom implementation with subsequent summative feedback from the instructor. These three cycles of feedback supported Laura’s ipsative assessment of her own CT learning.

Sharing and Revising Unfinished Thinking

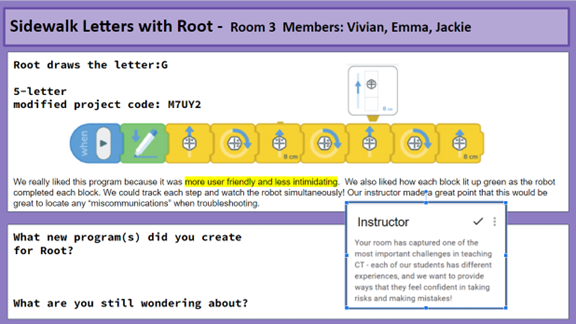

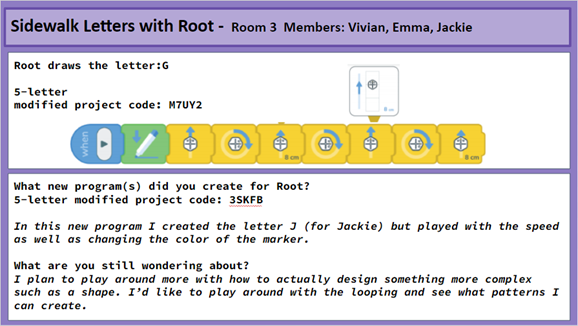

In a second problem solving task during Week 4, the teachers built on their earlier experiences with the Scratch block-based programming language (www.scratch.mit.edu) to explore a graphical, drag-drop coding platform and virtual Root® robot simulator (https://code.irobot.com/#/) for prereaders. The task was adapted from an early elementary TrafficBot “Hour of Code” lesson (https://edu.irobot.com/learning-library/traffic-bot). The instructor sought to deepen teachers’ understanding of sequencing and repetition in programming with an adapted “use-modify-create” pedagogical approach (Lee et al., 2011).

Teachers were provided with starter code for Root® to draw select letters. They examined pictures of code to identify patterns and to predict output. They modified the code on the simulator by replacing repeated code with loops. Teachers were also prompted to provide hyperlinks to their modified programs in progress within Google Slides so that other teachers and the instructor could provide feedback on their code.

Jackie transferred her group’s progress on the task to her DINb (see Figure 9). Her group did not complete the extension portion of the lesson in the time allowed during the synchronous Zoom breakout session. The instructor’s formative feedback affirmed the progress that the group had made in their thinking about CT content and pedagogy, and Jackie shared her continued thinking about the CT possibilities with the Root® simulator in her revisions to the group problem solving slide (see Figure 10). The additional italicized text represents the ideas that Jackie added as she worked to deepen her CT understanding. She wrote another program for Root® on her own and shared the link with her instructor, and she envisioned how she could create new patterns with her growing programming knowledge.

Figure 9

Original Group Slides in Jackie’s DINb: Sharing Unfinished Thinking About CT Content

Figure 10

Individual Slide in Jackie’s DINb: Revising Unfinished Thinking About CT Content

Teachers said that they did not feel the pressure to submit a static set of correct code at the end of each class; they could add pictures and screenshots of their work in progress. They often communicated their intentions to continue exploring programs beyond class time and described this additional work in their DINbs with hyperlinks to their revised programs. Their shared understanding that speed and correctness were not the focus of the CT tasks was emphasized in cycles of formative and ipsative feedback within the DINbs. These authentic assessment cycles empowered the teachers to persist in complex problem solving because unfinished thinking was expected and supported.

We found evidence of two cycles of feedback and revision related to the TrafficBot “Hour of Code” task in Jackie’s DINb as (a) small group sense-making with in-class peer formative feedback and out-of-class instructor formative feedback about CT, and (b) Jackie’s revisions of incomplete thinking and additional programming efforts with subsequent summative feedback from the instructor. Jackie elaborated on the value of a DINb as a technology-based ipsative assessment of her progress as a CT learner in her end-of-course reflection:

Being able to go back and tinker more with a concept I wasn’t understanding was powerful for me. It showed me that the learning wasn’t done and that if I practice more, I really can master the concept. I also like the digital aspect because it means you can constantly add on and work anywhere. Being able to go in and watch the changes in my thinking and also see my growth is also rewarding.

Jackie also appreciated the quality of feedback cycles as more than simply suggestions of possible changes. She often responded to instructor comments in Google Slides with additional wonderings about CT. Jackie’s perspective on the value of feedback was echoed by other teachers in their reflections on the usefulness of the DINb.

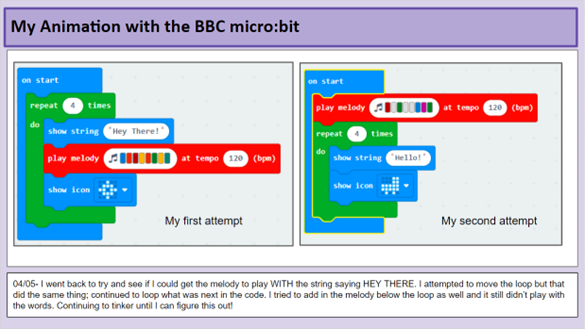

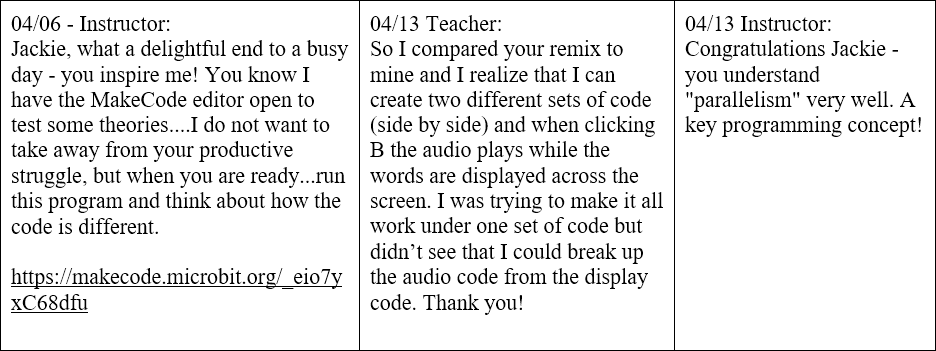

Encouraging Teachers to Converse About CT

The DINb also created a virtual space for advancing CT content and pedagogical content knowledge through text exchanges between the instructor and the teacher as evidenced in a Week 11 creative coding activity using the BBC micro:bit® simulator with Microsoft MakeCode® (https://makecode.microbit.org/#editor). The teachers followed another use-modify-create sequence with starter code for a ”Shining Sunbeams” animation project (https://microbit.org/projects/make-it-code-it/shining-sunbeams/) as they created their own individual animations with an input, a loop, and music.

Jackie grappled with the linearity of a block-based programming sequence and her desire to coordinate sound and display. She represented her two attempts to program the BBC micro:bit® simulator to play sound while the display was changing during class (see Figure 11) and expressed her intent to persist in achieving her programming goal. During the Zoom class session, her peers provided verbal feedback that they were struggling with the same issue. The DINb representation of the CT process became the start of a multiday exchange between the instructor and the teacher in Google Slides comments (see Figure 12).

By framing her formative feedback as a collaborative effort to answer a programming question, the instructor encouraged Jackie to persist and extend her learning beyond the class sessions. By recreating and remixing Jackie’s code as part of her Google Slide commenting, the instructor created an additional formative-ipsative feedback cycle.

Figure 11

Individual Slide in Jackie’s DINb: Starting a CT Conversation

Figure 12

Sequence of Google Slides Comments in Jackie’s DINb

Jackie reflected on the conversational nature of comment feedback within this activity and its importance in emphasizing CT as a process. She described her later use of the DINb structure as an authentic assessment in her own virtual Grade 5 classroom.

Leaving comments as students are working versus giving them feedback and it being final, I think, promotes a positive environment where students aren’t afraid to not grasp a concept immediately. The idea of productive struggle is so evident in this assignment. I began using a concept like this with my virtual Grade 5 class and was amazed at just how much I was missing before. This activity created an open environment within my class where students weren’t afraid to “fail”. It was always an opportunity to grow through tinkering.

Jackie’s experiences with the DINb positively impacted not only her CT learning but her teaching practice. Her use of this assessment model changed her interactions with her own Grade 5 students.

Discussion

The DINb as an enactment of the adapted Authentic Integrated Online Assessment Model provided both the instructor and the teachers with a technological window on CT learning. The representation and extension of CT in editable Google Slides supported teachers’ development of the disposition of persistence in working with difficult problems and tolerance for ambiguity. The DINb normalized productive struggle and unfinished thinking, and it encouraged more tinkering and reflection in response to instructor questions. Documenting in-class problem solving in editable Google Slides fostered continuing conversations about CT outside of class. Because the course summative assessments were portfolios of these iterative representations of their own CT interactions, the teachers felt accountable for their own learning.

Unlike traditional portfolio assessments which are often a systematic collection of products that demonstrate mastery of course objectives, the DINb is an authentic computational artifact. It reflects the very dispositions that are at the center of the practice of CT. The formative/ipsative feedback cycles in the weekly revisions of the DINb provided an affirmation that CT content and pedagogical content knowledge were not something to be acquired but, instead, to be developed over time.

Teachers’ weekly additions and revisions to their DINb in response to formative feedback increased their tolerance for ambiguity in the form of incomplete activities. The DINb encouraged persistence as they revisited and revised their thinking over the weeks of the course. The teachers’ productive interactions with Google Slides within their DINbs also deepened their TPACK for creative and meaningful assessment in their own PK-12 classrooms.

The foregrounding of ipsative assessment in the Authentic Integrated Online Assessment Model elevated the goal of continuing growth throughout the CT learning process. As teachers reviewed their representations and extensions of collaborative work and individual reflection over the course, they saw evidence of their deepening understanding of the six facets of CT with each new problem-solving experience. They appreciated the opportunity to leave thinking unfinished with the expectation that they could revisit ideas with instructor support. The commenting conversations inspired comfort with not knowing in the moment and confidence that they would be able to know in the future.

Taken together, the three themes that emerged in our analysis revealed the unique affordances of the DINb as authentic assessment in online STEM teacher education. By leveraging technology to capture teachers’ iterative constructions of CT content and pedagogical content knowledge, the teachers had evidence of their own growth as computational thinkers. They also saw the importance of providing iterative opportunities for reasoning and sensemaking in the STEM classroom.

Conclusions and Implications

The DINb represents a novel use of general instructional technology in the form of Google Slides as an authentic online assessment. This study offers evidence of the DINb as a synthesis of formative, ipsative, and summative assessment functions with the important potential to help teachers and their students to truly see themselves as computational thinkers. By foregrounding the processes of CT over the products of CT, the DINb builds the dispositions that teachers need to construct TPACK for CT integration. Assessing the process of CT learning offers a worthwhile contrast to traditional assessments of CT as a set of skills (Fields et al., 2019, Tang et al., 2020).

The teachers in this study recognized that they were still constructing their own CT knowledge. Yet, the CT dispositions the teachers demonstrated in iteratively constructing their DINbs can build their agency to integrate CT activities in their own classroom instruction as they continue to learn CT. Use of a DINb can increase PK-12 teacher readiness to integrate CT and technology in classroom activities.

As the STEM education community continues to advocate for CT integration to increase the depth of learning in PK-12 classrooms (Hjalmarson et al., 2020; Rich et al., 2020; Weintrop et al., 2016), there is a need for rigorous teacher professional development experiences (English, 2016). These experiences should emulate the very same dispositions that PK-12 students will need to value CT as part of their STEM learning journey. Future research on the use of a DINb as an authentic STEM assessment should also extend the context of this study beyond synchronous online CT learning for practicing teachers.

The interplay of editable digital documents and easy access to online cycles of instructor feedback can also change the ways in which instructors assess mathematics problem solving and scientific inquiry in preservice teacher education, asynchronous online STEM learning environments, and PK-12 classrooms. When teachers use authentic assessments, they see evidence of their students’ evolving CT. They will also see their students’ confidence in dealing with complexity, persistence in working with difficult problems, and tolerance for ambiguity. Leveraging the power of technology to reveal these problem-solving dispositions will make STEM education more accessible to all students.

References

Adler, R. F., & Beck, K. (2020). Developing an introductory computer science course for pre-service teachers. Journal of Technology and Teacher Education, 28(3), 519–541. https://www.learntechlib.org/primary/p/214351/

Angeli, C., & Giannakos, M. (2020). Computational thinking education: Issues and challenges. Computers in Human Behavior, 105, Article 106185. https://doi.org/10.1016/j.chb.2019.106185

Baker, C. K., Galanti, T. M., Kraft, T., & Morrow-Leong, K. (2021). Building powerful mathematical thinkers with dINBs. Mathematics Teacher: Learning and Teaching PK-12, 114(10), 750–758. https://doi.org/10.5951/MTLT.2020.0342

Baker, C. K., & Hjalmarson, M. (2019). Designing purposeful student interactions to advance synchronous learning experiences. International Journal of Web-Based Learning and Teaching Technologies, 14(1), 1–16. https://www.igi-global.com/gateway/article/214975

Barr, V., & Stephenson, C. (2011). Bringing computational thinking to K-12: What is involved and what is the role of the computer science education community? ACM Inroads, 2(1), 48–54. https://doi.org/10.1145/1929887.1929905

Bell, T., Duncan, C., & Atlas, J. (2016). Teacher feedback on delivering computational thinking in primary school. In Proceedings of the 11th Workshop in Primary and Secondary Computing Education (pp. 100-101). https://doi.org/10.1145/2978249.2978266

Boulden, D. C., Rachmatullah, A., Oliver, K.M., & Wiebe, E. (2021). Measuring in-service teacher self-efficacy for teaching computational thinking: Development and validation of the T-STEM CT. Education and Information Technologies, 26, 4663–4689. https://doi.org/10.1007/s10639-021-10487-2

Bryman, A. (2008). Social research methods. Oxford University Press.

Committee on STEM Education. (2018). Charting a course for success: America’s strategy for STEM education. National Science and Technology Council. https://eric.ed.gov/?id=ED590474

Computer Science Teachers Association and International Society for Technology in Education. (2011). Computational thinking leadership toolkit. https://cdn.iste.org/www-root/2020-10/ISTE_CT_Leadership_Toolkit_booklet.pdf

Denning, P. J., & Tedre, M. (2019). Computational thinking. MIT Press.

English, L. D. (2016). STEM education K-12: Perspectives on integration. International Journal of STEM Education, 3, Article 3. https://doi.org/10.1186/s40594-016-0036-1

Fields, D. A., Lui, D., & Kafai, Y. B. (2019). Teaching computational thinking with electronic textiles: Modeling iterative practices and supporting personal projects in exploring computer science. In S. Kong & H. Abelson (Eds.), Computational thinking education (pp. 279–294). Springer.

Galanti, T. M., Baker, C. K., Morrow-Leong, K., & Kraft, T. (2021a). Enriching TPACK in mathematics education: Using digital interactive notebooks in synchronous online learning environments. Interactive Technology and Smart Education, 18(3), 345–361. https://doi.org/10.1108/ITSE-08-2020-0175

Galanti, T. M., Baker, C., Kraft, T., & Morrow-Leong, K. (2021b). Using mathematics digital interactive notebooks as authentic integrated online assessments. In M. Niess, & H. Gillow-Wiles (Eds.), Transforming teachers’ online pedagogical reasoning for teaching K-12 students in virtual learning environments (pp. 470–493). IGI Global.

Hattie, J. A. (2012). Visible learning for teachers: Maximizing impact on learning. Routledge. https://doi.org/10.4324/9780203181522

Hjalmarson, M. A., Holincheck, N., Baker, C. K., & Galanti, T. M. (2020). Learning models and modeling across the STEM disciplines. In C. Johnson, M. J. Mohr-Schroeder, T. Moore, & L. English (Eds.), The handbook of research on STEM education (pp. 223–233). Routledge. https://doi.org/10.4324/9780429021381-21

Hodges, C., Moore, S., Lockee, B., Trust, T., & Bond, A. (27 March, 2020). The difference between emergency remote teaching and online learning. https://er.educause.edu/articles/2020/3/the-difference-between-emergency-remote-teaching-and-online-learning

Hughes, G. (2011). Towards a personal best: A case for introducing ipsative assessment in higher education. Studies in Higher Education, 36(3), 353–367. https://doi.org/10.1080/03075079.2010.486859

Hughes, G., Wood, E., & Kitagawa, K. (2014). Use of self-referential (ipsative) feedback to motivate and guide distance learners. Open Learning, 29(1), 31–44. https://doi.org/10.1080/02680513.2014.921612

Kong, S. C., Lai, M., & Sun, D. (2020). Teacher development in computational thinking: Design and learning outcomes of programming concepts, practices and pedagogy. Computers & Education, 151, Article 103872. https://doi.org/10.1016/j.compedu.2020.103872

Kotsopoulos, D., Floyd, L., Khan, S., Namukasa, I. K., Somanath, S., Weber, J., & Yiu, C. (2017). A pedagogical framework for computational thinking. Digital Experiences in Mathematics Education, 3(2), 154–171. https://doi.org/10.1007/s40751-017-0031-2

Lee, I., Grover, S., Martin, F., Pillai, S., & Malyn-Smith, J. (2020). Computational thinking from a disciplinary perspective: Integrating computational thinking in K-12 science, technology, engineering, and mathematics education. Journal of Science Education and Technology, 29, 1-8. https://doi.org/10.1007/s10956-019-09803-w

Lee, I., Martin, F., Denner, J., & Coulter, R. (2011). Computational thinking for youth in practice. ACM Inroads 2(1), 32–37. https://doi.org/10.1145/1929887.1929902

Liu, L. (2016). Using generic inductive approach in qualitative educational research: a case study analysis. Journal of Education and Learning, 5(2), 129-135. https:/doi.org/10.5539/jel.v5n2p129

Li, Y., Schoenfeld, A. H., diSessa, A. A., Graesser, A. C., Benson, L. C., English, L. D., & Duschl, R. A. (2020). On computational thinking and STEM education. Journal for STEM Education Research, 3(2), 147. https://doi.org/10.1007/s41979-020-00044-w

Lodi, M., & Martini, S. (2021). Computational thinking, between Papert and Wing. Science & Education, 30, 883-908. https://doi.org/10.1007/s11191-021-00202-5

Lytle, N., Cateté, V., Boulden, D., Dong, Y., Houchins, J., Milliken, A., Isvik, A., Bounajim, D., Wiebe, E., & Barnes, T. (2019, July). Use, modify, create: Comparing computational thinking lesson progressions for STEM classes. In Proceedings of the 2019 ACM Conference on Innovation and Technology in Computer Science Education (pp. 395-401). https://doi.org/10.1145/3304221.3319786

Mason, S. L., & Rich, P. J. (2019). Preparing elementary school teachers to teach computing, coding, and computational thinking. Contemporary Issues in Technology and Teacher Education, 19(4), 790-824. https://citejournal.org/volume-19/issue-4-19/general/preparing-elementary-school-teachers-to-teach-computing-coding-and-computational-thinking

Merriam, S. B. (2009). Qualitative research: A guide to design and implementation. Jossey-Bass.

Mishra, P., & Koehler, M. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054.

Mouza, C., Yadav, A., & Ottenbreit-Leftwich, A. (2018). Developing computationally literate teachers: Current perspectives and future directions for teacher preparation in computing education. Journal of Technology and Teacher Education, 26(3), 333–352. https://www.learntechlib.org/p/184602/

Niess, M. L. (2005). Preparing teachers to teach science and mathematics with technology: Developing a technology pedagogical content knowledge. Teaching and Teacher Education, 21(5), 509–523. https://doi.org/10.1016/j.tate.2005.03.006

Papert, S. (1980). Mindstorms: Children, computers and powerful ideas. Basic Books.

Pérez, A. (2018). A framework for computational thinking dispositions in mathematics education. Journal for Research in Mathematics Education, 49(4), 424–461. https://doi.org/10.5951/jresematheduc.49.4.0424

Poulakis, E., & Politis, P. (2021). Computational thinking assessment: Literature review. In T. Tsiatsos, S. Demetriadis, A. Mikropoulos, & V. Dagdilelis (Eds.), Research on e-learning and ICT in education: technological, pedagogical, and instructional perspectives (pp. 111–128). Springer. https://doi.org/10.1007/978-3-030-64363-8_7

Rapanta, C., Botturi, L., Goodyear, P., Guardia, L., & Koole, M. (2020). Online university teaching during and after the Covid-19 crisis: Refocusing teacher presence and learning activity. Postdigital Science Education, 2, 923–945. https://doi.org/10.1007/s42438-020-00155-y

Reding, T. E., & Dorn, B. (2017). Understanding the” teacher experience” in primary and secondary CS professional development. In Proceedings of the 2017 ACM Conference on International Computing Education Research (pp. 155–163). https://doi.org/10.1145/3105726.3106185

Rich, K. M., Yadav, A., & Larimore, R. A. (2020). Teacher implementation profiles for integrating computational thinking into elementary mathematics and science instruction. Education and Information Technologies, 25(4), 3161–3188. https://doi.org/10.1007/s10639-020-10115-5

Rich, P. J., Mason, S. L., & O’Leary, J. (2021). Measuring the effect of continuous professional development on elementary teachers’ self-efficacy to teach coding and computational thinking. Computers & Education, 168, Article 104196. https://doi.org/10.1016/j.compedu.2021.104196

Saldaña, J. (2021). The coding manual for qualitative researchers. Sage.

Shute, V. J., Sun, C., & Asbell-Clarke, J. (2017). Demystifying computational thinking. Educational Research Review, 22, 142–158. https://doi.org/10.1016/j.edurev.2017.09.003

Tang, X., Yin, Y., Lin, Q., Hadad, R., & Zhai, X. (2020). Assessing computational thinking: A systematic review of empirical studies. Computers & Education, 148, Article 103798. https://doi.org/10.1016/j.compedu.2019.103798

Thomas, D. R. (2006). A general inductive approach for analyzing qualitative evaluation data. American Journal of Evaluation, 27(2), 237–246. https://doi.org/10.1177/1098214005283748

Umutlu, D. (2021). An exploratory study of pre-service teachers’ computational thinking and programming skills. Journal of Research on Technology in Education, 54(5), 754–768. https://doi.org/10.1080/15391523.2021.1922105

Yadav, A., Stephenson, C., & Hong, H. (2017). Computational thinking for teacher education. Communications of the ACM, 60, 55-62. https://doi.org/10.1145/2994591

Weintrop, D., Beheshti, E., Horn, M., Orton, K., Jona, K., Trouille, L., & Wilensky, U. (2016). Defining computational thinking for mathematics and science classrooms. Journal of Science Education and Technology, 25(1), 127–147. https://doi.org/10.1007/s10956-015-9581-5

Wiebe, E., Kite, V., & Park, S. (2020). Integrating computational thinking in STEM.In C. C Johnson, M. J. Mohr-Schroeder, T. J. Moore, & L. D. English (Eds.), The handbook of research on STEM education (pp. 196–209). Routledge.

Wiggins, G. (2006, April 3). Healthier testing made easy: The idea of authentic assessment. Edutopia Magazine. https://www.edutopia.org/authentic-assessment-grant-wiggins

Wing, J. M. (2006). Computational thinking. Communications of the ACM, 49(3), 33–35. https://doi.org/10.1145/1118178.1118215

![]()