Fostering student engagement is of highest relevance for higher education instructors, as it leads to improved learning outcomes for students (see Bond & Bedenlier, 2019), is linked to improved persistence, retention, and achievement (Finn, 2006; Kuh, Cruce, Shoup, Kinzie, & Gonyea, 2008), and relates to students’ involvement within their institution (e.g., Junco, 2012). With the additional focus of higher education institutions on developing students’ 21st-century skills’ (Claro & Ananiadou, 2009; Oliver & Jorre de St Jorre, 2018), the use of educational technology to enhance these skills, as well as student engagement, has received increased attention in research and practice (e.g., Redecker, 2017).

The field of education has been particularly interested in researching the use and impact of educational technology (Bond, Buntins, Bedenlier, Zawacki-Richter, & Kerres, 2020), given the need for pre- and in-service teachers to develop ICT skills and knowledge for application in the classroom and to develop student ICT skills (OECD, 2018). However, teacher candidates have been shown to have particular difficulties in meaningfully using and seizing the advantages of digital technology for teaching and learning (Tondeur et al., 2012), and preparing them for the use of educational technology is an ongoing challenge for teacher educators (Liu, 2016; Ping, Schellings, & Beijaard, 2018; Tondeur et al., 2019).

Agreement exists on the complexity and multidimensionality of the concept of student engagement (Appleton, Christenson, & Furlong, 2008; Ben-Eliyahu, Moore, Dorph, & Schunn, 2018). However, ongoing disagreement and misunderstanding remains (Azevedo, 2015; Buckley, 2017; Zepke, 2018), especially in educational technology research (Bond, 2020; Bond et al., 2020; Henrie, Halverson, & Graham, 2015).

Despite some arguments to the contrary (e.g., Fredricks, Filsecker, & Lawson, 2016; Reeve & Tseng, 2011), student engagement has three generally accepted dimensions; cognitive, affective and behavioral (Fredricks, Blumenfeld, & Paris, 2004). Within each dimension are several facets (or indicators) of engagement and disengagement (see Appendix A), which are experienced on a continuum (Coates, 2007; Payne, 2017), depending on their activation (high or low) and valence (positive or negative; Pekrun & Linnenbrink-Garcia, 2012). Drawing on previous research (see Bond & Bedenlier, 2019), the following understanding of student engagement guided the investigation in this study:

Student engagement is the energy and effort that students employ within their learning community, observable via any number of behavioral, cognitive or affective indicators across a continuum. It is shaped by a range of structural and internal influences, including the complex interplay of relationships, learning activities and the learning environment. The more students are engaged and empowered within their learning community, the more likely they are to channel that energy back into their learning, leading to a range of short and long term outcomes, that can likewise further fuel engagement. (Bond et al., 2020, p. 3).

While we identified 27 literature and systematic reviews on the topic of educational technology and student engagement published up to and including 2018 (see Appendix D for a list and Bond et al., 2020, for a comprehensive examination), only one addressed preservice teachers specifically (Atmacasoy & Aksu, 2018). Another review (on the use of simulations in preservice teacher education) was later identified in an updated search.

This review, however, touched only upon individual facets of student engagement, while primarily focusing on interpersonal skills in the context of classroom management (Theelen, van den Beemt, & den Brok, 2019). Therefore, the present article focuses on a subset of data from a larger systematic review, exploring literature on student engagement and educational technology in higher education. Subsets within the systematic review were created based on the field of study classification by UNESCO Institute for Statistics (2015), making education one field of study consisting of, for example, teacher education and educational science. The review described here, therefore, sought to answer the following questions:

- What are the characteristics of (countries, educational settings, study population, and technology tools used) and methods used in research on student engagement and educational technology in higher education within the field of education, and how do they compare to the larger corpus?

- How is educational technology research theoretically grounded within the field of education?

- Which facets of student engagement and disengagement have been shown to be affected as a result of using educational technology in the field of education?

Method

To gain an insight into how educational technology affects student engagement within the field of education, a systematic review was undertaken using an explicit, transparent, and replicable search strategy (as in Gough, Oliver, & Thomas, 2012). To ensure more current technology was included in the review, the search strategy was directed by defined inclusion/exclusion criteria (see Table 1).

Table 1

Final Inclusion/Exclusion Criteria

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Published between 2007-2016 | Published before 2007 |

| English language | Not in English |

| Higher education | Not higher education |

| Empirical, primary research | Not empirical, primary research (e.g., review) |

| Indexed in ERIC, Web of Science, Scopus or PsycINFO | Evaluation or a description of a tool |

| No educational technology | No learning setting |

| Educational technology | No student engagement |

| Student engagement | |

The review protocol, including a thorough description of the method used, the search string development, article selection strategy, and the full data set are all available open access and stored on ResearchGate (https://www.researchgate.net/project/Facilitating-student-engagement-with-digital-media-in-higher-education-ActiveLeaRn). Likewise, the method and systematic review journey is discussed in detail in Bond et al. (2020) and Bedenlier, Bond, Buntins, Zawacki-Richter, & Kerres (2020b), which are also available open access. Therefore, an abridged version of the method is provided here.

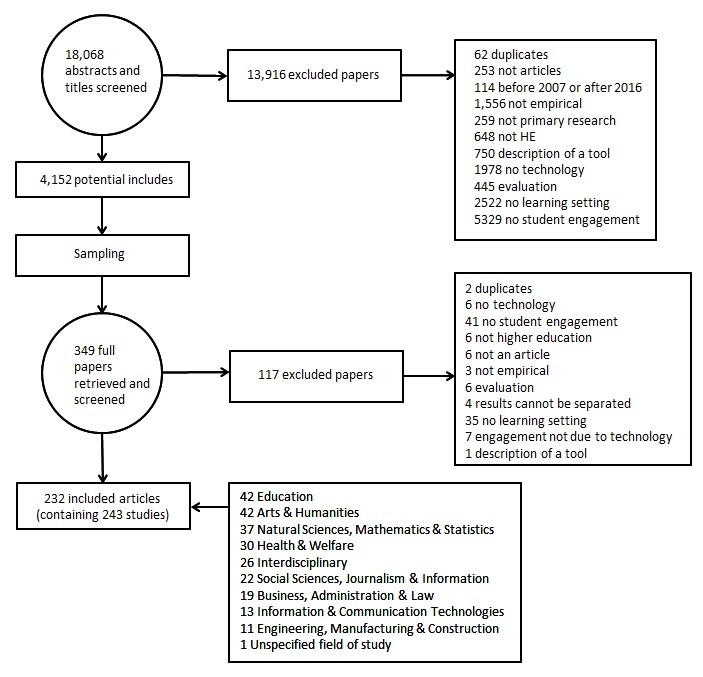

After screening 18,068 titles and abstracts, 4,152 potential articles remained (see Figure 1). Due to time constraints, as well as the extraordinarily large number of relevant articles in the population, we decided to draw a sample from this corpus (Buntins, Bond, Bedenlier, Zawacki-Richter, & Kerres, 2018). We used the method of sample size estimation (Kupper & Hafner, 1989), and using the R Package MBESS (Kelley, Lai, Lai, & Suggests, 2018).

We sampled 349 articles, accepting a 5% error range, a percentage of a half and an alpha of 5%, which were then stratified by publishing year, given that educational technology has become more differentiated within the last decade and student engagement has become more prevalent (Zepke, 2018). We followed the usual systematic review process of using a Boolean search string alongside stringent inclusion/exclusion criteria, but decided to pursue a further method to reduce the sample size to a smaller unbiased sample.

To ensure interrater reliability, two researchers screened the first 100 articles on full text and reached an agreement of 88% on inclusion/exclusion. Discrepancies were then discussed, and an agreement was reached on the remaining 12%. Further comparison screening ensued in order to increase the level of reliability.

Following screening the articles on full text, 232 articles remained for data extraction, containing 243 studies. These articles were then coded using a comprehensive coding scheme, including codes to extract information on the execution and study design (e.g. methodology and study sample), as well as information on the mode of delivery, learning scenario (including broader pedagogies such as social collaborative and self-determined learning and specific pedagogies such as flipped learning) and educational technology used.

Specific examples of student engagement and disengagement were also coded under facets of cognitive, affective, or behavioral (dis)engagement, which were identified following an extensive literature review (see Appendix A). The resulting evidence map provides more detailed information on the 243 articles, as well as the method used (see Bond et al., 2020). Likewise a systematic review on the arts and humanities articles from the overall sample was undertaken (see Bedenlier, Bond, Buntins, Zawacki-Richter, & Kerres, 2020a).

Given the large number of educational technology tools and applications identified across the 243 studies, Bower’s (2016) typology of learning technologies (see Appendix B) was employed. While some tools could be classified as more than one type according to the typology, “the type of learning that results from the use of the tool is dependent on the task and the way people engage with it rather than the technology itself,” and therefore, “the typology is presented as descriptions of what each type of tool enables and example use cases rather than prescriptions of any particular pedagogical value system” (p. 774). (See Bower, 2015, for a deeper explanation of each category.)

Overall Sample Description

The studies in the overall corpus were undertaken within 33 different countries, with most studies being undertaken within the United States (35.4%, n = 86), United Kingdom (10.7%, n = 26) and Australia (7.8%, n = 19). Few studies in the sample originated from mainland Europe, Africa, the Middle East, and South America. Studies were predominantly conducted within universities (79%, n = 191), followed by nonspecified institutions (10%, n = 24) and colleges (8.2%, n = 21). The most studied participant group was undergraduate students (60%, n = 146), followed by postgraduate students (14%, n = 33) and a combination of undergraduate and postgraduate students (9%, n = 41).

The researched study disciplines are depicted in the PRISMA flow chart (see Figure 1). Quantitative methods were the most frequently employed (42%, n = 103), followed by mixed methods (35%, n = 84) and qualitative methods (23%, n = 56). Quantitative data collection methods were the most prevalent, with surveys the most frequently used (65%, n = 157), followed by ability tests (40%, n = 97), and log data (26%, n = 62). The most frequently employed qualitative method was document analysis (22%, n = 53), such as analyzing student blog and discussion forum postings, followed by interviews (15%, n = 36) and focus groups (10%, n = 24).

Blended learning (45%, n = 109) was the most researched mode of delivery, followed by distance education (30%, n = 72) and face-to-face instruction (23%, n = 55). Social-collaborative learning (SCL) was the most often employed learning scenario (58.4%, n = 142), followed by self-directed learning (SDL; 43.2%, n = 105) and game-based learning (5.8%, n = 14). Across the corpus, more than 50 different educational technology tools were used, with the top five most frequently researched being Learning Management Systems (LMS; n = 89), discussion forums (n = 80) and videos (n = 44). Following a modified version of Bower’s (2016) educational tools typology, 17 broad categories of tools were identified (see Appendix B), which revealed that text-based tools (57%, n = 138), knowledge organization and sharing tools (43%, n = 104), and multimodal production tools (37%, n = 89) were the most investigated categories.

This review was designed to explore various facets of engagement that were not necessarily labelled as such, but almost all of the studies in the corpus (93%, n = 225) lacked a definition of student engagement. Of the 18 (7%) articles that provided a definition, the most popular was that of active participation and involvement in university life and learning, followed by interaction, and time and effort. Less than half of the studies (41%, n = 100) were guided by a theoretical framework, with studies drawing on social constructivism (n = 18), the Community of Inquiry model (n = 8), Sociocultural Learning Theory (n = 5), and the Community of Practice model (n = 4).

Behavioral engagement was the most reported dimension of student engagement (86%, n = 209), followed by affective engagement (67%, n = 163) and cognitive engagement (56%, n = 136). The top 10 most frequently identified engagement facets evenly distributed across all three dimensions. Appearing in more than 100 studies each and doubling the amount of the next most frequently reported facets were participation/interaction/involvement (49%, n = 118), achievement (44%, n = 106), and positive interactions with teachers and peers (41%, n = 100). Student disengagement was considerably less identified across the corpus, with the most often facets being frustration (14%, n = 33), opposition/rejection (8%, n = 20), and disappointment and other affective disengagement (7% each, n = 18).

Given the large number of studies within the corpus and the fact that different fields of study appeared to vary meaningfully in regard to the educational technology tools employed and student engagement facets identified, we decided to provide syntheses of research findings according to disciplinary field. In doing so, researchers and practitioners within those disciplines are informed about research that pertains more to their own field. Therefore, this article focuses on the 42 education studies within the corpus (see Appendix E).

Results

In this section, results are described, including study characteristics, educational settings, and technology used. The rate of studies that included a definition of student engagement, as well as the percentage of studies that were guided by a theoretical framework, are then discussed, followed by an overview of the student engagement and disengagement facets affected by educational technology.

Study Characteristics

The 42 education studies included 27 studies from the field of preservice teacher education (64.3%), two studies that included students from other education fields, and two studies that also included in-service teachers (see Appendix C). Another 10 studies stemmed from general educational technology courses (23.8%), four addressed in-service teachers (9.5%), and one study focused on early childhood education (2.4%). In the case of two studies, the exact field of study could not be elicited from the articles. In another two studies, some of the participants were unclear (each 9.5%).

The 42 studies were sourced from 41 articles, with Hew (2015) reporting on two independent studies from the field of general education technology. Studies in this subsample were cited 41.43 times on average (SD = 58.93) and, with 32 of the 41 articles (78.1%) the majority was published in an interdisciplinary journal. Only nine articles (22.0%) appeared in disciplinary journals. In contrast, the overall sample had a share of only 49.8% interdisciplinary journals, making the education sample deviate from the corpus quite starkly in this aspect. However, Appendix C also reveals that the interdisciplinary journals are mainly educational technology journals.

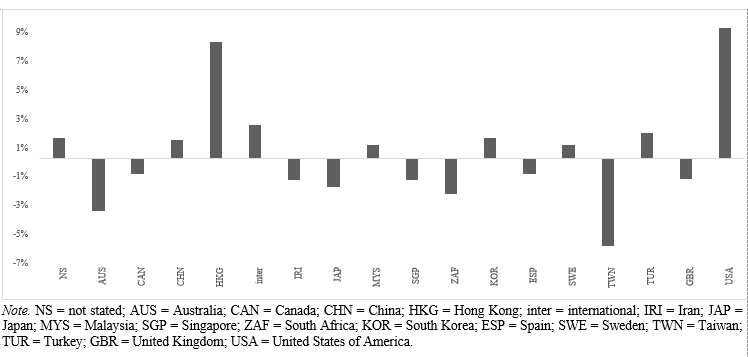

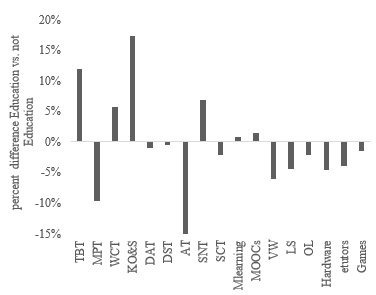

Geographical characteristics. Most of the studies in this sample were undertaken within the US (42.9%, n = 18), followed by seven studies each from Hong Kong and the UK (9.5%). Compared to the overall sample, studies in education originated from the US (9.0%) and Hong Kong (8.0%) considerably more often than in the other fields of study (see Figure 2). By contrast, for Taiwan, studies in Education (n = 1) are considerably less frequent than studies from other fields in the overall sample (-6.1%). This also applies to Australia (n = 2), which has less studies in Education (-3.7%) than it does in other fields of study.

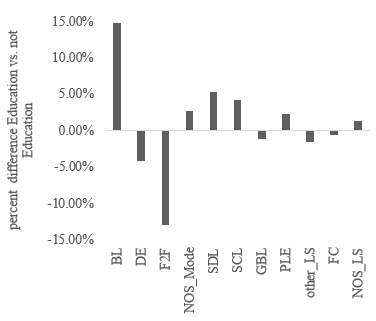

Educational settings. With 57.1% (n = 24), over half of the investigated courses used a blended learning format, followed by courses offered online (26.2%, n = 11), and face-to-face courses (12.0%, n = 5). In the case of another 7.1% of studies (n = 3), the extent to which online elements were integrated into the course was not clearly identifiable.

Most studies used SCL (62.0%, n = 26), and almost half of the studies used elements of SDL (48.0%, n = 20). In 14.3% (n = 6), a relatively high number of studies, the learning scenario was not specified. While game-based learning (GBL) and personal learning environments (PLE) were found in two studies each (5.0%), the flipped classroom approach (FL) was used in one study only (2.4%), which also used SCL (see Table 2).

Table 2

Co-occurrence of Learning Scenarios Across the Sample (n = 42)

| Learning Scenario | SDL | SCL | GBL | PLE | Other LS | FC | NOS | |

|---|---|---|---|---|---|---|---|---|

| Number of studies | 20 | 26 | 2 | 2 | - | 1 | 6 | |

| SDL | 0.60 | 0 | 1 | - | 1 | 0 | ||

| SCL | 0.41 | - | 0 | 0.50 | - | 0 | 0 | |

| GBL | 0.33 | 0.42 | 0 | - | 0 | 0 | ||

| PLE | 0.40 | 0.40 | 0 | -- | - | 0 | 0 | |

| other_LS | 0.33 | 0.67 | 0 | 0 | - | - | - | |

| FC | 0.50 | 0.17 | 0 | 0 | 0 | - | 0 | |

| NOS | 0 | 0 | 0 | 0 | 0 | 0 | - | |

| Sum Not Education | 85 | 116 | 12 | 5 | 3 | 6 | 26 | |

| Note. SDL = self-directed learning; SCL = social collaborative learning; GBL = game-based learning; PLE = personal learning environments; other LS = other learning scenario; FC = flipped classroom; NOS = learning scenario not specified. | ||||||||

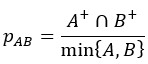

In order to determine how often learning scenarios occurred together, the number of common occurrences were calculated relative to the maximum possible number of common occurrences, which we reported in another article as follows:

In concrete terms, this means that in a contingency table, the cell that indicated how often two learning scenarios occurred together is used (A+ ^ B+) and the number in this cell was determined by the smaller number of respective learning scenarios (A ^ B). Expressed as a formula,

In 60% of possible cases, SCL and SDL were used in combination (n = 12). In both studies that used PLEs, SDL was also used, and in one of these two studies SCL was also found. In comparison to the non-education studies in the overall corpus, the proportion of blended learning studies was higher by 14.9% in the education sample, whereas online and face-to-face settings were less often employed (see Figure 3).

Furthermore, both SDL (5.3%) and SCL (4.2%) occurred a little more frequently, compared to the overall corpus. This result, then, also accounts for the fact that SDL and SCL often appeared in combination in the field of education. Across the non-education corpus, SCL and SDL were jointly found in only 41% of possible cases.

Study population. Studies in education investigated undergraduate students in 64.3% of studies (n = 27), and 33.3% of studies looked at graduate students (n = 14). Four of these studies focused on both undergraduate and graduate students, and five further studies did not specify a study level. The distribution of the level of study within the education sample did not deviate significantly from that of the overall sample (X² = 4.984, p > 0.05), although the share of postgraduate students in education is higher than in the overall group (12.4%).

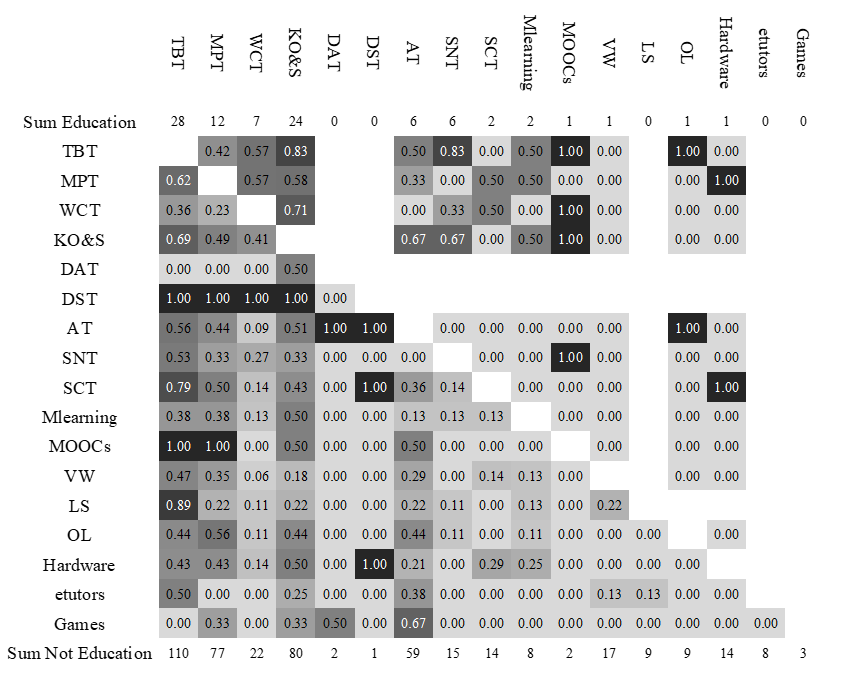

Technology tools use. The educational technology most frequently used across the education studies (see Figure 4) was text-based tools (66.7%, n = 28), followed by knowledge organization and sharing tools (57.1%, n = 24) and multimodal production tools (29.0%, n = 12). Website creation tools were used in seven studies (16.7%), whereas assessment tools and social networking toolswere used in six studies each (14.3%).

The combination of tools that occurred most frequently was that of text-based tools with either knowledge organization and sharing tools (20 of 24 studies) or social networking tools (five of six studies) in 83% of possible cases. Website creation tools and knowledge organization and sharing tools were used in combination in 71% of possible cases (five of eight studies). Both combinations of website creation tools with assessment tools, as well as website creation tools with social networking tools, occurred in 67% of possible cases (four of six studies).

When comparing these findings to that of the non-education studies in the corpus (see Figure 5), it is interesting to see that in education, knowledge organization and sharing tools were used above average (by 17.3%), as were text-based tools (by 11.9%) and social networking tools (by 6.9%). In contrast, assessment tools (15.1%) and multimodal production tools (9.7%) were employed below average in education.

In regard to the combination of tools, text-based tools and knowledge organization and sharing tools were not only most often used, but also most often used together. Text-based tools and multimodal production tools were less often employed jointly in education research than in the non-education studies.

Website creation tools and knowledge organization and sharing tools were rarely used together in non-education studies (41% of possible cases). Finally, in education, multimodal production tools were used above average in combination with website creation tools, assessment tools, and knowledge organization and sharing tools.

Methodological characteristics. The combination of quantitative and qualitative methods occurred in 40.5% of the studies (n = 17), whereas 33.3% employed qualitative methods only (n = 14), and the remaining 26.2% of studies used solely quantitative methods (n = 11). Thus, the share of mixed and qualitative studies was higher than in the overall sample (7.2% and 12.4%, respectively), but not significantly so (X² = 5.987, p = .050).

The two most frequently used data collection methods were surveys and document analysis (both n = 21, 50%), followed by log data (n = 12, 28.6%). While more studies in this sample employed qualitative rather than quantitative methods, the number of other qualitative methods used, such as interviews (n = 11, 26.2%), observations (n = 8, 19.0%), and focus groups (n = 5, 11.9%), was surprisingly small.

Document analysis within education made up 40% of the studies that used the method within the whole sample, which indicates its popularity in understanding student perceptions through rich, thick text. Examples of document analysis included that of Cook and Bissonette (2016), whose study of preservice teacher education used Twitter to enhance collaboration. They utilized a hashtag during the course to enable easy tweet archiving and data retrieval and then used grounded theory to identify emergent themes.

Surveys used by qualitative studies in the sample were all self-made and often included course evaluations (e.g., Cook & Bissonnette, 2016) or short questionnaires on student opinions of using technology within their course (e.g., Leese, 2009). Quantitative studies predominantly used previously validated questionnaires, such as variations on the Learning Style Inventory by Smith and Kolb (1985; e.g., Index of Learning Styles, Felder & Solomon, 1994, as cited in Chen & Chau, 2016), Rovai’s (2002) Classroom Community Scale (e.g., Cheng & Chiou, 2014), and Keller and Subhiyah’s (1993) Course Interest Survey (e.g., Kim & Keller, 2011).

Theoretically Grounding Research on Student Engagement and Educational Technology

Of the 42 studies within this corpus, 18 (43%) did not use a theoretical framework, which has been recognized as an issue within previous literature and systematic reviews (e.g., Kaliisa & Picard, 2017; Lundin et al., 2018), and with the larger corpus of this systematic review. Of the 57% (n = 24) that did, four drew on Garrison, Anderson, and Archer’s (2000) Community of Inquiry framework (Gray & DiLoreto, 2016; Hemphill & Hemphill, 2007; Teng, Chen, Kinshuk, & Leo, 2012; Whipp & Lorentz, 2009); three on social constructivism (Cook & Bissonette, 2016; Coole & Watts, 2009; Ikpeze, 2007); and two studies on Wenger’s (1998) Community of Practice (Chandra & Chalmers, 2010; Ruane & Lee, 2016).

In the article by Hew (2015), which includes two studies, both Helsing, Drago-Severson, and Kegan’s (2004) constructive-development theory, and Hofstede’s (2011) cultural dimensions classification were applied. Also reflective of the larger corpus were the number of studies that did not include a research question (36%, n = 15).

Interestingly, only five studies (12%) included a definition of student engagement, which is now considered necessary to have in any empirical research on engagement (Boekaerts, 2016). Park and Kim (2015) defined engagement as multifaceted and multidimensional, using the three dimensions of behavioral, cognitive, and emotional (affective), whereas Bolden and Nahachewsky (2015) considered engagement to have only affective and behavioral components. Both Bolden and Nahachewsky (2015) and Gray and DiLoreto (2016) defined engagement as participation and involvement in the learning process, while Boury, Hineman, Klentizin, and Semich (2013) defined it as interaction with others (especially peers) and with meaningful tasks.

On the other hand, Hatzipanagos and Code (2016) defined engagement as the time and effort that students spend on learning activities and stressed its distinction from motivation. The variation in these five definitions is a prime example of how disparate the field’s notion of engagement is (Henrie et al., 2015), and reiterates the fuzzy character of the concept (see Bond & Bedenlier, 2019).

Student Engagement and Educational Technology in the Field of Education

The 42 studies in this corpus were coded on facets of behavioral, affective, and cognitive engagement. Overall, 37 studies (88.1%) included evidence of behavioral (see Table 3), 36 (85.7%) resulted in affective and 29 (69%) in cognitive engagement, with 28 studies (66.7%) identifying all three engagement dimensions. The six most frequently cited facets of engagement were positive interactions with peers/teachers, participation/involvement, learning from peers, confidence, enjoyment, and achievement (see Table 4). Some theorists have considered that achievement is an outcome of engagement, rather than an aspect of it (e.g., Kahu, 2013), but we made the decision to code achievement as a facet of engaged learning.

Table 3

Student Engagement Frequency Descriptive Statistics

| Type | Frequency | Relative Frequency | M | SD |

|---|---|---|---|---|

| Behavioral Engagement | 37 | 0.88 | 1.73 | 1.10 |

| Affective Engagement | 36 | 0.86 | 2.44 | 2.30 |

| Cognitive Engagement | 29 | 0.69 | 2.76 | 1.92 |

| Overall | 3 | 0.07 | 1 | - |

Table 4

Top Five Engagement Facets Across the Three Dimensions (n = 42)

| Rank | BE | n | % | AE | n | % | CE | n | % |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Participation/interaction/ involvement | 21 | 50.0% | Positive interactions with peers/teachers | 24 | 57.1% | Learning from peers | 16 | 38.1% |

| 2 | Confidence | 12 | 28.6% | Enjoyment | 11 | 26.2% | Deep learning Self-regulation Positive self-perceptions & self-efficacy | 8 | 19.0% |

| 3 | Achievement | 11 | 26.2% | Enthusiasm Sense of connectedness | 7 | 16.7% | Critical thinking | 7 | 16.7% |

| 4 | Positive conduct Effort Attention | 4 | 9.5% | Interest | 6 | 14.3% | Operational reasoning Positive perceptions of teacher support | 5 | 11.9% |

| 5 | Assume responsibility | 3 | 7.1% | Positive attitude towards learning Motivation | 5 | 11.9% | Staying on task/focus | 4 | 9.5% |

| Note. BE = Behavioral engagement; AE = affective engagement; CE = cognitive engagement. | |||||||||

Three studies (7%) found that educational technology enhanced engagement overall but did not specify the dimensions or facets being referred to. These studies were then coded separately from the other facets. For example, Gray and DiLoreto (2016) developed the Student Learning and Satisfaction in Online Learning Environments Instrument (SLS-OLE), including Student Engagement on a scale from 1.00 to 6.00. They did not explicitly define which facets or domains were being measured, although their definition of student engagement included behavioral and affective aspects. Engagement, then, in this postgraduate educational leadership course was rated by 187 students to have a mean of 4.9783 (SD = .86155), with the study finding a strong and significant relationship between student engagement and learner interaction (r = .72, p < .01).

Behavioral engagement and educational technology. The most frequently reported dimension of engagement, although arguably also the most frequently measured due to being manifested in actions that can be observed, was behavioral engagement, with seven different facets identified as a result of educational technology (see Table 4). By far the most cited instance of behavioral engagement was participation/interaction/involvement(50%, n = 21), present in 50% of studies using text-based tools, knowledge organization and sharing tools, and social networking tools and in 66.7% of studies involving assessment tools (see Table 5).

Table 5

Relative Frequency (Percentages) of Behavioral Engagement Facets by Technology Type

| Facets | All | TBT | MPT | WCT | KO&S | AT | SNT |

|---|---|---|---|---|---|---|---|

| Participation/Interaction/Involvement | 50.0 | 50.0 | 41.7 | 42.9 | 50.0 | 66.7 | 50.0 |

| Confidence | 28.6 | 39.3 | 25.0 | 28.6 | 41.7 | 16.7 | 16.7 |

| Achievement | 26.2 | 25.0 | 25.0 | 14.3 | 20.8 | 66.7 | 16.7 |

| Following Rules | 9.5 | 14.3 | 0.0 | 0.0 | 12.5 | 0.0 | 16.7 |

| Effort | 9.5 | 3.6 | 16.7 | 0.0 | 4.2 | 0.0 | 16.7 |

| Attention | 9.5 | 7.1 | 16.7 | 0.0 | 8.3 | 16.7 | 33.3 |

| Assume Responsibility | 7.1 | 7.1 | 8.3 | 0.0 | 8.3 | 33.3 | 16.7 |

| Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organization and sharing tools; AT = assessment tools; SNT = social networking tools. | |||||||

Participation was captured in several studies by means of frequency data related to access to discussion boards (e.g., Gibbs & Bernas, 2008; Hemphill & Hemphill, 2007; Teng et al., 2012). Coole and Watts (2009) found their preservice teacher candidates’ participation ranged from passive consumption of posts (51%, n = 79) to forming professional communities (9%, n = 14).

Achievement was also found in 66.7% of studies using assessment tools where, for example, students who used a question-embedded online interactive video environment spent more time and interacted more with learning material, as well as scoring significantly higher learning results (Vural, 2013). Chen and Chiou (2014) also showed higher mean exam scores of students enrolled in a course using online discussion boards, compared to students attending a solely face-to-face course.

Students in an Introductory Educational Technology course also showed increased behavioral engagement with a Virtual Tutee System, with an improving trend in reading performance found (Park & Kim, 2015). However, while Hatzipanagos and Code (2016) found quizzes significantly more likely to be completed when they were mandatory, students were more likely to engage in peer-to-peer sharing of resources when open badge participation was optional.

Social networking tools such as Twitter allow students to interact with a wider audience outside of the classroom and engage within broader communities of practice, leading to increased enjoyment (Cook & Bissonette, 2016; Saadatmand & Kumpulainen, 2013). More closed environments, such as Ning (Arnold & Paulus, 2010) and Facebook groups (Deng & Tavares, 2013), can enable vibrant discussions, where less confident students can feel more at ease to contribute. Cheng, Su, Zhange, and Yang (2015) found that undergraduate education students’ initiative and prompt interaction in discussions were directly linked to their overall course achievement. Cheng and Chau (2016) also found that participation in networked learning and materials development, rather than using LMS to access course materials, were more likely to promote achievement and satisfaction. Jabbour (2014) found that, not only did mobile learning stimulate interaction between peers, but it also resulted in improved learning outcomes.

Using technology within preservice teacher courses, enabled students to feel more confident with their technology skills and to feel more confident with their ability to then apply those skills once teaching their own students. Studies that used asynchronous text-based tools, such as discussion forums (e.g., Smidt, Bunk, McGrory, Li, & Gatenby, 2014) and wikis (e.g., Chandra & Chalmers, 2010), and website creation tools such as blogs (e.g., Granberg, 2010), were particularly confidence-building for students, due to the ability to compose posts and edit them prior to posting, which also promoted deeper reflection and attention.

Students also reported exerting more effort with tasks that were authentic and held meaning for them personally (e.g., Bolden & Nahachewsky, 2015). First and third year preservice teachers in an undergraduate education program found using a peer mentoring discussion forum through Blackboard to be an excellent tool for sharing classroom experiences, concerns and achievements (Ruane & Lee, 2016). Student interactions were dense, and they were able to develop confidence in sharing with their peers, alongside their growing teaching confidence, developing language of empathy, and connectedness.

In this case, the facilitators did not moderate participation, asking only that students post two to three times per week, which allowed a more student-led discussion. This approach was appreciated also by students in the study by Hew (2015). The study by Sharma and Tietjen (2016) found that discussions were more frequent, open, and equal when students participated within one course blog, as opposed to having individual blogs and project groups, but that instructor feedback and presence within online environments is an important factor in student participation (Lee & Lee, 2016).

Affective engagement and educational technology. Educational technology had a positive effect on 11 different facets of affective engagement in this sample (see Table 6). Of these, positive interactions with peers/teachers was by far the most cited affective facet (57.1%, n = 24) and the highest overall, with enthusiasm an important factor in developing trust within group work situations (Bulu & Yildirim, 2008). Studies that used social networking tools (n = 6) reported particularly high levels of positive interaction and enjoyment.

Table 6

Relative Frequency (Percentages) of Affective Engagement by Technology Type

| Affect | All | TBT | MPT | WCT | KO&S | AT | SNT |

|---|---|---|---|---|---|---|---|

| Enthusiasm | 16.7 | 7.1 | 16.7 | 0.0 | 16.7 | 33.3 | 33.3 |

| Interest | 14.3 | 10.7 | 8.3 | 0.0 | 16.7 | 16.7 | 16.7 |

| Sense of belonging | 9.5 | 14.3 | 0.0 | 14.3 | 16.7 | 0.0 | 33.3 |

| Positive interactions | 57.1 | 57.1 | 50.0 | 57.1 | 54.2 | 33.3 | 83.3 |

| Positive Attitude | 11.9 | 7.1 | 8.3 | 0.0 | 8.3 | 16.7 | 0.0 |

| Connectedness | 16.7 | 17.9 | 33.3 | 14.3 | 25.0 | 16.7 | 33.3 |

| Pride | 9.5 | 7.1 | 16.7 | 14.3 | 8.3 | 0.0 | 16.7 |

| Satisfaction | 9.5 | 7.1 | 8.3 | 0.0 | 8.3 | 0.0 | 16.7 |

| Wellbeing | 9.5 | 10.7 | 0.0 | 0.0 | 12.5 | 0.0 | 16.7 |

| Enjoyment | 26.2 | 17.9 | 8.3 | 14.3 | 25.0 | 33.3 | 66.7 |

| Motivation | 11.9 | 10.7 | 8.3 | 0.0 | 12.5 | 16.7 | 16.7 |

| Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organization and sharing tools; AT = assessment tools; SNT = social networking tools | |||||||

Positive interactions also occurred using simple communication forms such as email to address students in a personalized way (Alcaraz-Salarirche, Gallardo-Gil, Herrera-Pastor, & Serván-Núñez, 2011), and wikis, which were seen as having “helped the instructors better understand the students and it helped the students better understand the experience” (Boury et al., 2013, p. 76) during an international teaching placement.

Students were found to be very supportive of others in a web-based course and interacted more with the instructor than in the corresponding face-to-face course (Mentzer, Cryan, & Teclehaimanot, 2007). Hexom and Menoher (2012) argued that in online learning settings the quality of interaction was also related to the length of a course, which enabled a more personal relationship between students and the instructor to develop, as more opportunities existed that allowed for interactions (p. 149).

Using social networking tools that students are already familiar with, such as Facebook (e.g., Deng & Tavares, 2013; Lee & Lee, 2016) and Twitter (e.g., Saadatmand & Kumpulainen, 2013), removed technological skill barriers and encouraged more informal networks to develop (Cook & Bissonette, 2016), with community developing easier when students could link contributions to specific student profiles (Arnold & Paulus, 2010). Apps with push notifications meant that responses to student queries were quickly answered, enabling them to easily “seek support” and “solve problems” with each other (p. 172), leading to an enhanced sense of connectedness and belonging.

Enjoyment was also found in 33.3% of studies using assessment tools, with Park and Kim’s (2015) study of a Virtual Tutee System finding that students were more likely to experience enjoyment, and less likely to experience boredom and anger. One student said, “I have really been enjoying these posts. I have not posted a thread because what I have read has answered a lot of my questions” (Ruane & Lee, 2016, p. 91). Enjoyment does not, therefore, automatically mean active participation. Mixed results were found in the study by Grimley, Green, Nilsen, and Thompson (2012), whose participants predominantly stated that they enjoyed the computer game that was used for instructional purposes. Closer analysis of high and low achieving students revealed different levels of concentration and individual perceptions of study success (p. 635).

Interest is a facet that was investigated, for example, in its relation to concentration when using mobile learning (Yang, Li, & Lu, 2015). One student in a TESOL course found it particularly effective when students were allowed to drive the discussion in a weekly class forum, being responsible for choosing an article, and asking their peers questions, as these activities related discussion directly to their own interests (Smidt et al., 2014). These studies highlight the importance of meaningful tasks.

Students in a blended course were more motivated to contribute to the wiki if other students contributed (Yusop & Basar, 2014), and Kim and Keller’s (2011) study on the use of motivational and volitional email messages in preservice teacher education found that, while they did not have a significant effect on motivation, students’ volition and attitudes toward technology improved.

Cognitive engagement and educational technology. Found slightly less in the studies in this sample, cognitive engagement was coded through 10 different facets (see Table 7), with the most predominant one being learning from peers. It was not surprising to see that learning from peers was found in 66.7% of studies using social networking tools, as opposed to only 16.7% of studies using assessment tools.

Table 7

Relative Frequency (Percentages) of Cognitive Engagement Facets by Technology Type

| Cognitive Engagement | All | TBT | MPT | WCT | KO&S | AT | SNT |

|---|---|---|---|---|---|---|---|

| Learning from peers | 38.1 | 46.4 | 50.0 | 57.1 | 50.0 | 16.7 | 66.7 |

| Deep Learning | 19.0 | 10.7 | 16.7 | 28.6 | 20.8 | 16.7 | 50.0 |

| Self regulation | 19.0 | 21.4 | 16.7 | 0.0 | 25.0 | 16.7 | 16.7 |

| Positive self perception & self efficacy | 19.0 | 21.4 | 33.3 | 42.9 | 25.0 | 16.7 | 33.3 |

| Follow through /care thoroughness | 19.0 | 14.3 | 25.0 | 28.6 | 20.8 | 33.3 | 33.3 |

| Critical Thinking | 16.7 | 17.9 | 8.3 | 14.3 | 20.8 | 16.7 | 16.7 |

| Operational reasoning | 11.9 | 7.1 | 33.3 | 14.3 | 12.5 | 0.0 | 0.0 |

| Positive perception of teacher support | 11.9 | 14.3 | 8.3 | 0.0 | 12.5 | 0.0 | 0.0 |

| Staying on task/focus | 9.5 | 7.1 | 16.7 | 0.0 | 4.2 | 16.7 | 0.0 |

| Investment in learning | 7.1 | 7.1 | 16.7 | 14.3 | 8.3 | 16.7 | 16.7 |

| Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organization and sharing tools; AT = assessment tools; SNT = social networking tools. | |||||||

The fact that students learned from their peers was apparent in a number of cases when the respective technology enabled students to share their work and, thereby, learn from others, such as via social networking sites (Arnold & Paulus, 2010). Bolden and Nahachewsky (2015) stated that students “benefitted from this sharing [of podcasts] by having the opportunity to celebrate achievement, collaboratively develop knowledge, represent selves and connect to others” (p. 22). Giving feedback on other groups’ work (Chandra & Chalmers, 2010), being able to follow what others are doing (Cook & Bissonnette, 2016) and essentially practicing what one of Ruane and Lee’s (2016) participants found to be “learning ‘from’ her classmates, as opposed to offering advice and ideas ‘to’ others” (p. 91), are activities this category revolves around.

Whereas learning from peers is clearly understood as a social process, deep learning was primarily documented – naturally – as an internal process. One preservice teacher candidate in Quinn and Kennedy-Clark (2015) said, “I took more in than I normally do” (p. 7), with reference to using prerecorded lectures in the context of flipped learning. Eighty-one percent of students in the course (n = 29) approved of this concept as such.

A student in the study by Deng and Tavares (2013) stated that the mere fact of concisely typing one’s thoughts out led to thoroughly recapitulating course content (p. 171). The system used in the course (Moodle), however, was not perceived as encouraging engagement in the discussion forums per se, and the perceived benefit of contributing to the forums was also strongly related to their peers engaging.

Online discussions, as a means to foster dialogue within a course, were used in the study by Szabo and Schwartz (2011), who found statistically significant differences in critical thinking abilities between two student cohorts, one of which was offered in traditional mode and the other class added online discussions. Pre- and posttests revealed that critical thinking significantly increased for students in the latter course (p. 85).

Self-regulation is also one of the facets of cognitive engagement indicated in a number of studies, such as the one by Shonfeld and Ronen (2015), who investigated an online science education course enrolling excellent, average, and learning-disabled students. The study found that the latter group indicated a growth in their self-directed learning levels between pre- and postquestionnaire (p. 19).

The aspect of more personalized learning was stressed by a student in the study by Quinn and Kenney-Clark (2015), enabling the student to “go at your own pace” (p. 8) through controlling the recorded video lecture. Related to self-regulation is the category of follow-through, care, and thoroughness, which was expressed through students engaging in the nonrequired readings of their peers’ blogs. The course instructor thus concluded, “In my view, educational technology is most effective when students make it their own and initiate some use by themselves” (p. 194). This finding is similar to the result in the study by Bolden and Nahachewsky (2015) on music education, who found one student going back over her self-created podcast until she was satisfied with what she really wanted to express in this assignment (p. 25).

Positive self-perception and self-efficacy were reported in several studies regarding students’ learning how to confidently use technology. Examples include the following:

- Szabo and Schwartz’ (2011) study leading a student to want to apply technology in their own teaching,

- Saadatmand and Kumpulainen’s (2013) reporting one of their student’s competency increase in using tools through jointly working with her peers in personal learning environments,

- Chandra and Chalmers (2010) having one student feeling more competent about knowing wikis and confidently setting them up (p. 47), and

- Bolden and Nahachewsky’s (2015) mature student growing accustomed to using new software (p. 24).

Thus, while other areas of positive self-perception were also reported in the studies, students’ knowledge about technology and their use of it emerged as one of the biggest challenges that students could overcome.

Student Disengagement and Educational Technology in the Field Of Education

Studies in this sample were also coded on 12 different facets of behavioral, affective, and cognitive disengagement. Overall, 17 studies (41%) resulted in affective, 12 (29%) in behavioral, and 10 (24%) in cognitive disengagement (see Table 8). The five most frequently cited disengagement facets were frustration, disappointment, worry/anxiety, avoidance, and half-hearted/task incompletion(see Table 9).

Table 8

Student Disengagement Frequency Descriptive Statistics

| Disengagement | Frequency | Relative Frequency | M | SD |

|---|---|---|---|---|

| Behavioral | 12 | 0.29 | 1.83 | 1.11 |

| Affective | 17 | 0.41 | 2.00 | 1.12 |

| Cognitive | 10 | 0.24 | 2.00 | 0.82 |

Table 9

Top Five Disengagement Facets Across the Three Dimensions

| Rank | BD | n | % | AD | n | % | CD | n | % |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Half-hearted or task incompletion | 5 | 11.9% | Frustration | 8 | 19.0% | Avoidance | 5 | 11.9% |

| 2 | Unfocused or inattentive Distracted | 3 | 7.1% | Disappointment | 7 | 16.7% | Opposition or Rejection Pressured Other | 4 | 9.5% |

| 3 | | | | Worry or Anxiety | 6 | 14.3% | | | |

| 4 | | | | Other | 5 | 11.9% | | | |

| 5 | | | | Boredom | 3 | 7.1% | | | |

| Note. BD = Behavioral disengagement; AD = affective disengagement; CD = cognitive disengagement. | |||||||||

Behavioral disengagement and educational technology. Behavioral disengagement was indicated by only three facets (see Table 10), with the most prominent of these being half-hearted/task incompletion, particularly when using social networking tools. In these studies, engaging students in online discussion forums and on social media was difficult, due to students not wanting to share their ideas publicly (Deng & Tavares, 2013), not wanting to engage with other group members (Granberg, 2010; Ikpeze, 2007), or finding the extra online requirements onerous, as one student explained: “I am not going to spend more time with the online class than I have to. I will just write my post, fulfill the requirements, and then get on with my day.” (Smidt et al., 2014, p. 58). Students in a preservice teacher TESOL course found using Ning to chat with class members, especially while physically sitting in that class, to lack authenticity, although some students acknowledged that it helped less confident students to express their opinions (Arnold & Paulus, 2010).

Table 10

Relative Frequency (Percentages) of Behavioral Disengagement Facets by Technology Type

| Disengagement | All | TBT | MPT | WCT | KO&S | AT | SNT |

|---|---|---|---|---|---|---|---|

| Half-hearted | 11.9 | 14.3 | 8.3 | 28.6 | 16.7 | 0.0 | 33.3 |

| Unfocused | 7.1 | 0.0 | 8.3 | 0.0 | 4.2 | 0.0 | 0.0 |

| Distracted | 7.1 | 0.0 | 8.3 | 0.0 | 4.2 | 0.0 | 0.0 |

| Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organization and sharing tools; AT = assessment tools; SNT = social networking tools | |||||||

Students in a flipped learning preservice teacher literacy unit found themselves occasionally unfocused while watching videos lectures and easily distracted (Quinn & Kennedy-Clark, 2015), which highlights the importance of ensuring good quality video production and keeping videos short (see Akçayır & Akçayır, 2018). In a study on the use of mobile phone devices within an undergraduate education course, some students were observed using them to play games or message friends during class, rather than completing assigned tasks (Jabbour, 2014). Likewise, students found using a computer game enjoyable and useful in reinforcing learning concepts in an educational psychology unit, although some admitted difficulty staying focused during gameplay and others questioned “how much [they were] taking in” (Grimley et al., 2012, p. 633).

Affective disengagement and educational technology. Five affective disengagement facets were coded (see Table 11), with frustration being the most frequent (19.0%, n = 8). Frustration was expressed in various ways across the studies, relating often to technical aspects of the technology used, but also to the human interactions around them. Students in the study by Deng and Tavares (2013) used the words “very troublesome” and “totally difficult to use” when referring to Moodle (p. 170), or complained about “bugs” (Grimley et al., 2012, p. 633) in the system. One student was “openly frustrated about her group members’ lackluster attitude to the discussions” (Ikpeze, 2007, p. 395), and other students complained about the disorganized way a specific class was held (Whipp & Lorentz, 2009).

Table 11

Relative Frequency (Percentages) of Affective Disengagement Facets by Technology Type

| Disengagement | All | TBT | MPT | WCT | KO&S | AT | SNT |

|---|---|---|---|---|---|---|---|

| Frustration | 19.0 | 14.3 | 25.0 | 14.3 | 16.7 | 0.0 | 33.3 |

| Disappointment | 16.7 | 14.3 | 25.0 | 14.3 | 16.7 | 0.0 | 33.3 |

| Anxiety | 14.3 | 17.9 | 8.3 | 0.0 | 20.8 | 0.0 | 33.3 |

| Other | 11.9 | 10.7 | 8.3 | 0.0 | 16.7 | 16.7 | 33.3 |

| Boredom | 7.1 | 3.6 | 0.0 | 0.0 | 4.2 | 16.7 | 0.0 |

| Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organization and sharing tools; AT = assessment tools; SNT = social networking tools | |||||||

In the study by Abendroth, Golzy, and O’Connor (2011), one student tried to create a video by using too-advanced techniques and was frustrated when the video did not work as expected (p. 150). Students in another study reported that they always needed to rely on tutorials to be able to correctly use the video analysis tool that was part of the course set up (Shepherd & Hannafin, 2011, p. 201).

Disappointment also related primarily to the interaction with others, such as the impression that others were not overly interested in the given tasks (Bulu & Yildirim, 2008) or that discussion strands just “hanging in the air” (Granberg, 2010, p. 10). Interestingly, in two cases worry/anxiety appeared to be linked to the instructors’ presence in the Moodle environment (Deng & Tavares, 2013) or their facilitation of discussions (Hew, 2015), with students then feeling worried about not being knowledgeable enough and “posting the ‘wrong things’” (p. 30). One student also commented on the fact that boredom can arise when instructors – through overly regulating discussion boards – seem to rule out “any chance for creativity or individuality” (Smidt et al., 2014, p. 52).

Cognitive disengagement and educational technology. Only three cognitive facets of disengagement were coded in this sample (see Table 12), alongside the code other, with avoidance being the most prominent, followed closely by opposition/rejection and pressured. Student avoidanceof tasks was particularly seen in studies that involved social networking tools.

Table 12

Relative Frequency (Percentages) of Cognitive Disengagement Facets by Technology Type

| Disengagement | All | TBT | MPT | WCT | KO&S | AT | SNT |

|---|---|---|---|---|---|---|---|

| Rejection | 9.5 | 7.1 | 8.3 | 0.0 | 12.5 | 0.0 | 16.7 |

| Avoidance | 11.9 | 14.3 | 0.0 | 14.3 | 16.7 | 0.0 | 50.0 |

| Pressured | 9.5 | 14.3 | 0.0 | 0.0 | 16.7 | 0.0 | 16.7 |

| other | 9.5 | 10.7 | 8.3 | 14.3 | 4.2 | 0.0 | 16.7 |

| Note. TBT = text-based tools; MPT = multimodal production tools; WCT = website creation tools; KO&S = knowledge organization and sharing tools; AT = assessment tools; SNT = social networking tools | |||||||

Preservice teachers in a blended teachers of English to speakers of other languages (TESOL) course did not interact with other students on the class Ning site, beyond what was required (Arnold & Paulus, 2010). This finding also appeared in studies using institutional LMS, such as Blackboard (e.g., Ikpeze, 2007) and Moodle (e.g., Deng & Tavares, 2013), with some students citing family or work commitments as the reason for their lack of participation (Leese, 2009). In a study investigating preservice teacher perceptions of Moodle and Facebook (Deng & Tavares, 2013), students showed minimal interest in using the LMS, seeing it more as a tool to use for assimilative tasks, such as downloading course materials, whereas a Facebook group was “more immediate and direct than Moodle” and was easier to use as it was already “part of [their] lives” (p. 171).

In a study using Twitter (Cook & Bissonette, 2016), students found it difficult to move their ideas into a more public domain online, with one student saying, “I talk in class because it helps me learn, it’s expected, and I know my classmates are listening to me, but on Twitter I wasn’t always sure who I was talking to” (p. 102). This factor led to some students opposing use of the tool. Some students opposed the idea of engaging in online discussions, because they wanted to avoid confrontation resulting from miscommunication (Ikpeze, 2007), and others felt pressured when having to interact in a teacher facilitated discussion (Hew, 2015).

Students in two studies (Quinn & Kennedy-Clark, 2015; Smidt et al., 2014), however, believed that the use of too much technology, in general, was not a good thing. One student said that they would be “teach[ing] students in a real classroom in a real school, in person” (Smidt et al., 2014, p. 54) and, therefore, saw engaging with technology a waste of time, with one student in a blended course stating that they “didn’t apply for online distance uni[versity]” (Quinn & Kennedy-Clark, 2015, p. 7).

Discussion

This article reviewed 42 publications focused on how educational technology affects student engagement in higher education within the field of education. This subset was analyzed in relation to the larger systematic review corpus, in order to identify specific characteristics pertaining to educational technology use and student engagement in this field.

Study Contexts and Methodology

The vast majority of studies stemming from undergraduate teacher education confirms the focus of prior educational technology research on undergraduate students (e.g., Hew & Cheung, 2013). It could also be a reflection on the increasing realization of the importance of preservice education programs in preparing preservice teachers for integrating educational technology in the classroom (Admiraal et al., 2017; Mouza, 2019).

Unfortunately, five studies within the sample did not provide full information about the study level, so readers cannot know whether the study can be applied to their own context. More precise and explicit details about participants and study context (country, institution, study level, etc.) are needed in future empirical research.

A high number of studies employed qualitative and mixed methods; however, a comparatively low number of studies used interviews, observations, and focus groups. Given the complex nature of student engagement (see Bond & Bedenlier, 2019), using data collection methods that provide thick descriptions of how people perceive educational technology are important, rather than relying solely on statistical data, which focuses more on aspects of behavioral engagement (Fredricks et al., 2004; Henrie et al., 2015).

As was found in this review, enjoyment does not always equal active participation, nor does frequent accessing of course material necessarily equate to positive affective engagement. Caution should also be given toward relying on self-developed student evaluation surveys as the sole data source, as they can pose challenges regarding construct validity and problematic results, in turn (Döring & Bortz, 2016).

As with the overall sample and research in educational technology, generally (Bond, 2018; Bond, Zawacki-Richter, & Nichols, 2019), countries from the global south were underrepresented. Possibly, the search strategy and the sampling employed produced this result, as we focused on English-language publications, indexed within four databases. The inclusion of databases specific to those regions (e.g., African Journals Online, www.ajol.info) might have resulted in a higher proportion of included articles and should be considered vital when undertaking future reviews.

Grounding Education Research in Theory

As found in other reviews (e.g., Henrie et al., 2015), and within the larger corpus, student engagement remains an elusive and complex concept in the field of education, with only five studies (12%) in this sample including a definition of engagement. While this finding is possibly due to the search strategy employed (i.e., we did not search on the term “student engagement” explicitly), it may also be due to issues of differing conceptualization (Tai, Ajjawi, Bearman, & Wiseman, 2020).

Due to the ongoing disagreements surrounding this metaconstruct, articles investigating aspects of engagement must include a definition of the researchers’ understanding to enable the study results to be easily interpreted (Appleton et al., 2008; Christenson, Reschly, & Wylie, 2012). Studies focusing on just one aspect of engagement should also be related to the larger framework of student engagement (Bond & Bedenlier, 2019) and consider how they are connected, as engagement and disengagement exist on a continuum (Pekrun & Linnenbrink-Garcia, 2012).

Mirroring current conversations within the field of educational technology (e.g., Castañeda & Selwyn, 2018; Crook, 2019; Hew, Lan, Tang, Jia, & Lo, 2019), 43% of the articles in this sample did not use a theoretical framework. Those studies that did drew heavily on approaches related to constructivist theory and practice. The use of theories, including the Community of Inquiry framework, social constructivism and Communities of Practice, as well as then making use of social collaborative learning scenarios, is consistent with and reflective of an overarching trend in educational technology research, of giving students the opportunity to shape their learning processes (Bond et al., 2019). However, as emerged in some studies within the sample (e.g., Smidt et al., 2014), the use of technology should then also integrate with these approaches and not be overly prescriptive, so that students are encouraged to take responsibility for both their learning and their construction of meaning.

Also surprising here was that no articles in this sample used the Technological Pedagogical Content Knowledge (or technology, pedagogy, and content knowledge; TPACK) framework (Koehler & Mishra, 2005), which was developed in response to precisely this lack of theory guiding the integration of technology within education (Rosenberg & Koehler, 2015). Another systematic review (Voogt, Fisser, Pareja Roblin, Tondeur, & van Braak, 2013), using the exact same four databases as the present review, found and synthesized 44 empirical journal articles on the use and measurement of TPACK published up to 2011. This omission was likely due to either the sampling strategy or the lack of treatment of student engagement within the articles. Articles using TPACK might have been more focused on teacher agency and teacher professional development. An updated search for literature from 2017-2019 would likely find an increased number of articles using or investigating TPACK.

Educational Technology and Student Engagement and Student Disengagement

Across the 42 education studies, engagement was identified more often than disengagement, with behavioral and affective engagement being the two most prevalent dimensions. Establishing a causal relationship between an application of technology and a specific facet of engagement is not possible, but tentative conclusions can be drawn. Making learning in education a social endeavor, the facet most often found for cognitive engagement was learning from peers. This facet was related to a variety of tools, with social networking tools and website creation tools being the two tools with the highest values (see Table 7).

Facets of behavioral engagement were particularly evident when text-based tools, knowledge organization and sharing tools, and social networking tools were used. While participation/interaction/involvement and achievement were related to assessment tools, assessment tools were not overly conducive to promoting other behavioral engagement.

In terms of affective engagement, knowledge organization and sharing tools and social networking tools were particularly effective with, not surprisingly, social networking tools resulting in high numbers of studies indicating positive interactions with peers and teachers, as well as enjoyment. At the same time, social networking tools also emerged as a somewhat ambivalent tool, as it is also related to half-hearted as a facet of behavioral disengagement and to avoidance as a part of cognitive disengagement.

Thus, technology in education does not guarantee better learning per se (Tamim, Bernard, Borokhovksi, Abrami, & Schmid 2011) and might even be regarded as “unhelpful” (Selwyn, 2016, p. 1008) by students. Selwyn identified “distraction” and “difficulty” (p. 1010) as two major reasons why technology can be considered “unhelpful.” Distraction and difficulty also found in this review when students reported being distracted when watching educational videos (Quinn & Kennedy-Clark, 2015) or frustrated when technology was considered a burden and not an asset.

This review has shown that frustration was the facet of disengagement most often identified across the 42 studies (see Table 12). Students reported frustration related to the technical aspects of technology as such (e.g., Deng & Tavares, 2013; Shepherd & Hannafin, 2011), but the same feeling also arose due to their limited abilities to use certain functions of it (e.g., Abendroth et al., 2011). Extending this factor to other studies in the field, these findings reiterate the perceived challenge of educational technology use in teacher education, with teacher candidates often having a hard time adjusting to technology (Tondeur et al., 2012).

As studies in this review have also shown, students gain confidence when using technology as part of a course and are subsequently more inclined also to use technology upon entering the K-12 classroom (e.g., Chandra & Chalmers, 2010; Smidt et al., 2014). To acknowledge the ambivalence of educational technology use in this regard also then entails further consideration of disengagement, given the various conceptualizations that were identified in the systematic review by Chipchase et al. (2017), ranging from nonengagement/nonparticipation to a multifaceted construct. Future studies, then, must further delineate disengagement and further explore how educational technology affects disengagement, in order to provide a more balanced and holistic picture of technology use in education.

Deviating from the overall corpus, most of the scenarios in education were based on hybrid approaches, combining face-to-face formats with the use of digital tools or online elements. Interestingly, the flipped classroom as a distinct form of blended learning was only employed in one study in this corpus (Quinn & Kennedy-Clark, 2015). Hence, given the increased use of flipped learning in K-12 education and its positive effect on student engagement (e.g., Akçayır & Akçayır, 2018), integrating blended approaches more prominently in teacher education seems advisable.

As teacher candidates are also likely to implement flipped learning in their classrooms, exploring its implementation during preservice education can provide time for experimentation, tweaking, and feedback (Admiraal et al., 2017). Furthermore, courses that are solely offered online were less often explored in this education sample. Countries with established distance education programs (e.g., Australia) have been offering online teacher education courses for some time,but many other higher education systems might only be recently embarking on this journey (Qayyum & Zawacki-Richter, 2018). Therefore, further research is warranted into the use of educational technology within online teacher education across different contexts.

This review highlighted the importance of considering students’ technological skills and knowledge and providing students with adequate training and preparation in the tools being used in courses; otherwise, frustration and disengagement result. It is also important to help students overcome initial feelings of concern over sharing ideas and collaborating with peers, as this aspect of teaching is important and this review has shown that collaboration is an important factor leading to engagement.

While some studies reported that using social networking tools was considered a burden by students, participating in course discussions (e.g., through Facebook groups) and wider communities of practice (CoP) online (e.g., Twitter), led to feelings of connectedness, confidence and enjoyment. These results inspire the suggestion that introducing preservice teachers to online CoPs early might enable them to develop valuable networks with both beginning and established teachers. This strategy could help them feel less isolated while on placements and could be a valuable resource for them in their teaching careers. Healthy teaching communities can be found on Twitter, for example, that regularly share information about lesson ideas, upcoming professional development and career opportunities, and ideas for integrating technology in the classroom (see, e.g., hashtags #NQT for beginning teachers, #EduTwitter, and #MFLtwitterati for modern foreign language teachers). Therefore, future research exploring how to successfully integrate SNT into preservice education courses would be valuable.

Conclusion

This systematic review of a subset of studies (n = 42) from a larger review (see Bond et al., 2020), synthesized research investigating educational technology and student engagement in the field of education. Results revealed that behavioral engagement was by far the most affected domain, followed by affective and cognitive engagement. Disengagement was found less frequently; however, affective disengagement was promoted the most, with studies finding students experienced frustration, disappointment and worry or anxiety, in particular.

In the context of education, two approaches to the application of educational technology seem to be prominent in and characteristic for this field of study: (a) using technology to enhance communication and social exchange and (b) using technology for self-directed learning. The review also found that educational technology was particularly effective at enhancing behavioral and affective engagement when text-based tools, knowledge organization and sharing tools, and social networking tools were used, although some caution is needed when employing social networking tools, as they can also result in frustration and disengagement.

This review highlighted the need for studies to provide full study design information, and to align research with theory. Studies investigating student engagement must also include a definition in order to move conversations forward. Further, studies into how educational technology affects disengagement would be particularly useful. The review also highlighted a number of other research gaps, including further investigation of online education, postgraduate courses, and research exploring the use of educational technology by in-service teachers, such as the use of online communities of practice. Further research in contexts outside of the US, Hong Kong, and the UK would also provide further insight, with the use of qualitative methods particularly welcome.

While we made every effort to ensure that the review was carried out rigorously and transparently, a structural bias is nevertheless inherent, having only searched English language databases and included journal articles published from 2007 to 2016, due to the length of time it takes to conduct such a rigorous review (see Borah, Brown, Capers, & Kaiser, 2017). Furthermore, the decision to use a sampling technique on the overall sample may have led to important articles being left out of this review.

These limitations would need to be addressed in further research by widening the number of databases searched, as well as including researchers from other dominant academic languages (e.g., Spanish) and focusing the review either on a shorter time frame or on a particular field of study from the beginning (see Bedenlier, et al., 2020a).With the ever-evolving variety of educational technology tools available, a further update of this review to include research from the years 2017-2019 is also suggested, in order to gain further and more recent insight into successful teaching and learning with educational technology in the field of education.

Funding

This work was supported by the Bundesministerium für Bildung und Forschung (German Federal Ministry of Education and Research – BMBF), Grant No. 16DHL1007.

References

Abdool, P. S., Nirula, L., Bonato, S., Rajji, T. K., & Silver, I. L. (2017). Simulation in undergraduate psychiatry: Exploring the depth of learner engagement. Academic Psychiatry: The Journal of the American Association of Directors of Psychiatric Residency Training and the Association for Academic Psychiatry, 41(2), 251–261. https://doi.org/10.1007/s40596-016-0633-9

Abendroth, M., Golzy, J. B., & O’Connor, E. A. (2011). Self-created YouTube recordings of microteachings: Their effects upon candidates’ readiness for teaching and instructors’ assessment. Journal of Educational Technology Systems, 40(2), 141–159. https://doi.org/10.2190/ET.40.2.e

Admiraal, W., van Vugt, F., Kranenburg, F., Koster, B., Smit, B., Weijers, S., & Lockhorst, D. (2017). Preparing pre-service teachers to integrate technology into K–12 instruction: Evaluation of a technology-infused approach. Technology, Pedagogy and Education, 26(1), 105–120. https://doi.org/10.1080/1475939X.2016.1163283

Akçayır, G., & Akçayır, M. (2018). The flipped classroom: A review of its advantages and challenges. Computers & Education, 126, 334–345. https://doi.org/10.1016/j.compedu.2018.07.021

Alcaraz-Salarirche, N., Gallardo-Gil, M., Herrera-Pastor, D., & Serván-Núñez, M. J. (2011). An action research process on university tutorial sessions with small groups: Presentational tutorial sessions and online communication. Educational Action Research, 19(4), 549–565. https://doi.org/10.1080/09650792.2011.625713

Alrasheedi, M., Capretz, L. F., & Raza, A. (2015). A systematic review of the critical factors for success of mobile learning in higher education (University students’ perspective). Journal of Educational Computing Research, 52(2), 257–276. https://doi.org/10.1177/0735633115571928

Appleton, J., Christenson, S. L., & Furlong, M. (2008). Student engagement with school: Critical conceptual and methodological issues of the construct. Psychology in the Schools, 45(5), 369–386. https://doi.org/10.1002/pits.20303

Arnold, N., & Paulus, T. (2010). Using a social networking site for experiential learning: Appropriating, lurking, modeling and community building. The Internet and Higher Education, 13(4), 188–196. https://doi.org/10.1016/j.iheduc.2010.04.002

Atmacasoy, A., & Aksu, M. (2018). Blended learning at pre-service teacher education in Turkey: A systematic review. Education and Information Technologies, 23(6), 2399–2422. https://doi.org/10.1007/s10639-018-9723-5

Azevedo, R. (2015). Defining and measuring engagement and learning in science: Conceptual, theoretical, methodological, and analytical issues. Educational Psychologist, 50(1), 84–94. https://doi.org/10.1080/00461520.2015.1004069

Bedenlier, S., Bond, M., Buntins, K., Zawacki-Richter, O., & Kerres, M. (2020a). Facilitating student engagement through educational technology in higher education: A systematic review in the field of arts & humanities. Australasian Journal of Educational Technology, 36(4), 126–150. https://doi.org/10.14742/ajet.5477

Bedenlier, S., Bond, M., Buntins, K., Zawacki-Richter, O., & Kerres, M. (2020B). Learning by doing? Reflections on conducting a systematic review in the field of educational technology. In O. Zawacki-Richter, M. Kerres, S. Bedenlier, M. Bond, & K. Buntins (Eds.), Systematic reviews in educational research (pp. 111–127). Wiesbaden, GE: Springer Fachmedien Wiesbaden. https://doi.org/10.1007/978-3-658-27602-7_7

Ben-Eliyahu, A., Moore, D., Dorph, R., & Schunn, C. D. (2018). Investigating the multidimensionality of engagement: Affective, behavioral, and cognitive engagement across science activities and contexts. Contemporary Educational Psychology, 53, 87–105. https://doi.org/10.1016/j.cedpsych.2018.01.002

Betihavas, V., Bridgman, H., Kornhaber, R., & Cross, M. (2016). The evidence for ‘flipping out’: A systematic review of the flipped classroom in nursing education. Nurse Education Today, 38, 15–21. https://doi.org/10.1016/j.nedt.2015.12.010

Boekaerts, M. (2016). Engagement as an inherent aspect of the learning process. Learning and Instruction, 43, 76–83. https://doi.org/10.1016/j.learninstruc.2016.02.001

Bolden, B., & Nahachewsky, J. (2015). Podcast creation as transformative music engagement. Music Education Research, 17(1), 17–33. https://doi.org/10.1080/14613808.2014.969219

Bond, M. (2018). Helping doctoral students crack the publication code: An evaluation and content analysis of the Australasian Journal of Educational Technology. Australasian Journal of Educational Technology, 34(5), 168–183. https://doi.org/10.14742/ajet.4363

Bond, M. (2020). Facilitating student engagement through educational technology: Current research, practices and perspectives. (Doctoral thesis). University of Oldenburg, Germany. https://doi.org/10.13140/RG.2.2.24728.75524

Bond, M., & Bedenlier, S. (2019). Facilitating student engagement through educational technology: Towards a conceptual framework. Journal of Interactive Media in Education, 2019(1), 1–14. https://doi.org/10.5334/jime.528

Bond, M., Buntins, K., Bedenlier, S., Zawacki-Richter, O., & Kerres, M. (2020). Mapping research in student engagement and educational technology in higher education: A systematic evidence map. International Journal of Educational Technology in Higher Education, 17(1), 1–30. https://doi.org/10.1186/s41239-019-0176-8

Bond, M., Zawacki-Richter, O., & Nichols, M. (2019). Revisiting five decades of educational technology research: A content and authorship analysis of the British Journal of Educational Technology. British Journal of Educational Technology, 50(1), 12–63. https://doi.org/10.1111/bjet.12730

Borah, R., Brown, A. W., Capers, P. L., & Kaiser, K. A. (2017). Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry. BMJ Open, 7(2), e012545. https://doi.org/10.1136/bmjopen-2016-012545

Boury, T. T., Hineman, J. M., Klentzin, J. C., & Semich, G. W. (2013). The use of online technology to facilitate pre-service teachers’ engagement and cultural competency development during an international field placement: Reflections from Austria. International Journal of Information and Communication Technology Education, 9(3), 65–79. https://doi.org/10.4018/jicte.2013070105

Bower, M. (2016). Deriving a typology of Web 2.0 learning technologies. British Journal of Educational Technology, 47(4), 763–777. https://doi.org/10.1111/bjet.12344

Bower, M. (2015). A typology of Web 2.0 learning technologies. EDUCAUSE Digital Library. Retrieved from http://www.educause.edu/library/resources/typology-web-20-learning-technologies

Boyle, E. A., Hainey, T., Connolly, T. M., Gray, G., Earp, J., Ott, M., Lim, T., Ninaus, M., Ribeiro, C., & Pereira, J. (2016). An update to the systematic literature review of empirical evidence of the impacts and outcomes of computer games and serious games. Computers & Education, 94, 178–192. https://doi.org/10.1016/j.compedu.2015.11.003

Broadbent, J., & Poon, W. L. (2015). Self-regulated learning strategies and academic achievement in online higher education learning environments: A systematic review. The Internet and Higher Education, 27, 1–13. https://doi.org/10.1016/j.iheduc.2015.04.007

Brunton, J., & Thomas, J. (2012). Information management in systematic reviews. In D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews. (pp. 83–106). London, UK: SAGE.

Buckley, A. (2017). The ideology of student engagement research. Teaching in Higher Education, 23(6), 718–732. https://doi.org/10.1080/13562517.2017.1414789

Bulu, S. T., & Yildirim, Z. (2008). Communication behaviors and trust in collaborative online teams. Educational Technology & Society, 11(1), 132–147. Retrieved from https://pdfs.semanticscholar.org/5bce/a7b2be7b5c908c1e7d9f2a2d931c4b9d4789.pdf

Buntins, K., Bond, M., Bedenlier, S., Zawacki-Richter, O., & Kerres, M. (2018). Fuzzy concepts and large literature corpi: Addressing methodological challenges in systematic reviews. Research Synthesis 2018, University of Trier, Germany. Retrieved from https://www.researchgate.net/publication/325693933_Fuzzy_concepts_and_large_literature_corpi_Addressing_methodological_challenges_in_systematic_reviews

Castañeda, L., & Selwyn, N. (2018). More than tools? Making sense of the ongoing digitizations of higher education. International Journal of Educational Technology in Higher Education, 15(1). https://doi.org/10.1186/s41239-018-0109-y