Over the past several years, technological advancements in artificial intelligence (AI) have led to several significant developments in its widespread adoption and use. These breakthroughs in AI have introduced the world to powerful content-generation models that allow users to create everything from digital media products to writing samples instantly through simple text-based queries. As a result, interest in new AI tools has increased dramatically over the past several months. ChatGPT, a chatbot launched in November 2022 by the company OpenAI, recently set the record for the fastest-growing consumer application in history, with an estimated 100 million active users monthly a mere 4 months after it debuted (Hu, 2023).

While the meteoric rise of ChatGPT has spread into the realm of education and captured the imagination of students and educators alike, improper use of the technology has been a cause for concern. In its “Educator Considerations for ChatGPT” documentation, OpenAI (n.d.) states that there are several education-related risks to using ChatGPT, including plagiarism, harmful and biased content, equity and access, the trustworthiness of the AI-generated content, and overreliance on the tool for assessment purposes. As educational institutions and policymakers grapple with how best to handle the moral and ethical concerns regarding the use of ChatGPT and its competitors, we maintain that the best approach to combating the improper use of such technology is one of inclusion rather than exclusion. Educators can model best practices for students by incorporating AI tools into classwork and curriculum. In turn, students can develop a better understanding of this powerful new technology and leverage its use for enhancing their productivity, comprehension, and creativity.

Challenges and Opportunities of AI in K-16 Education

AI refers to the field of study, theory, and design of intelligent machines (Buchanan, 2005; McCarthy, n.d.). In the early days of AI, computational input and output were done on a rudimentary level. Machines could learn and execute basic human functions, such as simulating a simple conversation or deciphering communications code during wartime (Haenlein & Kaplan, 2019). Although impressive demonstrations of computational power at the time, these first instances of AI were by no means capable of performing at the level of human intelligence.

In 1950, Alan Turing designed a test to determine whether a computer could truly mimic human intelligence. The “Turing Test” theorized that computers could be considered “intelligent” when they reached the point where conversation between the machine and human became indistinguishable between that of two humans (Turing, 1950). This test has been largely considered the gold standard for AI development (Knightly, 2018). To date, no AI system has passed the Turing Test, although some have come close, including an algorithm designed by a 13-year-old boy from Ukraine that convinced one third of the humans judging it that it was human (Johnson, 2022).

As one of the most advanced AI writing tools to date, could ChatGPT pass the Turing Test? Just 1 month after the release of ChatGPT, the New York Times published an article featuring a series of text written by students along with text written by ChatGPT inquisitively titled, “Did a Fourth Grader Write This? Or the New Chatbot?” (Miller et al., 2023). The article’s authors noted that a fourth-grade teacher, a professional writing tutor, a college education professor, and a children’s author were not always able to distinguish between text from a child or the chatbot.

ChatGPT generates text responses that go well beyond the elementary school level, too. Higher education institutions around the nation have reported that ChatGPT is able to pass some of their most difficult exams. Researchers at the University of Minnesota Law School found that the chatbot was able to pass a series of multiple-choice and essay response exams with a C+ grade (Choi et. al., 2023). ChatGPT was also scored within the passing range for the United States Medical Licensing Exam (USMLE), an accomplishment the researchers called a “notable milestone in AI maturation” (Kung et al., 2023, p. 1).

While both concern and excitement regarding ChatGPT abound, educators have begun pondering what the impact of this new AI tool might be on the field of education. However, this is not the first AI tool that has influenced teaching and learning. AI technologies have long been embedded into K-12 schools and higher education settings. From intelligent tutoring systems to robo-graders to learning analytics, scientists and researchers have been trying to design AI tools that automate different aspects of teaching and learning in an attempt to improve the field of education (Guan et al., 2020; Tedre et al., 2021). Educators, too, have incorporated AI tools into their classes, such as cognitive assistants (e.g., Alexa; Daley & Pennington, 2020) and AI teaching assistants (Kim et al., 2020).

Students have also turned to AI tools, including grammar and sentence correctors (e.g., Grammarly), translation tools (e.g., Google Translate), language learning tools (e.g., Duolingo), and answer engines (e.g., Wolfram|Alpha) to aid their communication, thinking, and learning. Even some teacher education programs have embedded AI-based digital clinical simulations and virtual reality training systems (e.g., Mursion) to help prepare future teachers for success in the classroom (Johnson-Leslie & Leslie, 2022; King et al., 2022).

While some of these AI tools such as intelligent tutoring systems (Kulik & Fletcher, 2016), personalized learning systems (e.g., Akgun & Greenhow, 2022), and AI simulation-based learning technologies (Dai & Ke, 2022) have shown potential for improving teaching and learning, other AI tools have demonstrated more questionable outcomes. AI-based automated assessment systems designed to save teachers time by having machines grade student work have been found to perpetuate systemic bias and discrimination by privileging dominant ways of thinking, knowing, and using language (Cheuk, 2021). When such tools are used in high-stakes assessments – like for evaluating writing samples for admissions into top schools (e.g., Mi Write) – they could potentially jeopardize students’ futures (Mezzacappa, 2021).

Additionally, new AI-based student monitoring tools (e.g., Gaggle and GoGuardian) and facial recognition technologies, which are being implemented in K-12 schools to protect students, can compromise student privacy and harm traditionally marginalized students (Laird et al., 2022). Meanwhile, predictive analytics tools – those that use massive amounts of data collected from students (whether they know it or not) to provide insights that teachers and administrators can use to make decisions about students – can lead to discriminatory outcomes and increased bias in decision-making (Feathers, 2022). Akgun and Greenhow (2022) warned that introducing AI into education can create new ethical and societal risks, including perpetuating systemic bias and discrimination, compromising students’ privacy, increasing student monitoring and surveillance, jeopardizing student autonomy, disadvantaging traditionally marginalized students, and amplifying racism, sexism, xenophobia, and other forms of inequity.

Therefore, when integrating new AI tools into educational practices or changing educational practices based on AI tools, it is essential to critically interrogate the technology to determine its intended and unintended benefits and consequences (Heath et al., 2022). In the following section, we discuss potential uses, risks, misuses, and opportunities for ChatGPT as a guide to help teachers and teacher educators think critically about the impact this tool might have on education.

ChatGPT

ChatGPT was launched in November 2022 by the AI research and development company OpenAI. OpenAI was founded in 2015 as a nonprofit organization with support from high-profile donors such as Tesla CEO Elon Musk and LinkedIn cofounder Reid Hoffman with the intention of advancing “digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return” (OpenAI, 2015, para. 1). In 2019, OpenAI pivoted to becoming a for-profit enterprise, which led to a strategic partnership with Microsoft totaling $1 billion (Microsoft News Center, 2019).

Shortly after the investment from Microsoft, OpenAI launched GPT-3 (Generative Pre-trained Transformer 3) – one of the most advanced large language models to date. Language models are “a type of neural network that has been trained on lots and lots of text” (Heaven, 2023, para. 3). OpenAI’s GPT-3 proved to be a significant leap forward for large language models, as it examined over 175 billion data parameters, 10 times more than any other previous collection (Brown et al., 2020). By training the model on everything from Wikipedia articles to Reddit links, GPT-3 was able to successfully craft convincing human-like responses based on the billions of text samples used for machine learning (Thompson, 2022).

However, the use of Internet text to train GPT-3 also resulted in a tool that reproduced much of the disinformation, prejudice, biases, and harmful information found online (Heaven, 2023). In an attempt to reduce the toxic tendencies of GPT-3, OpenAI used “reinforcement learning from human feedback” to fine-tune GPT-3 into “InstructGPT” – a large language model built on human preferences to desired responses (OpenAI, 2022). This model served as the basis for ChatGPT (Rettberg, 2022).

While large language models and generative pretrained transformers were around well before ChatGPT, its combination of free access, an easy-to-use interface, and advanced response outputs set it apart from similar tools and attracted a wide range of early adopters (Tate et al., 2023). After its launch in late 2022, ChatGPT saw immediate and widespread adoption. In its debut week, the chatbot amassed a staggering 1 million users (Mollman, 2022). Less than 2 months later, the number of active users increased to over 100 million.

Potential Uses for ChatGPT

As one of the most advanced AI writing tools to date, ChatGPT can write nearly anything that an educator or student asks it to write. For educators, ChatGPT can do the following:

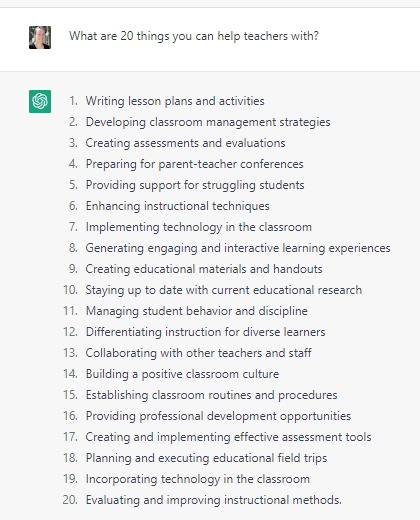

- Provide support with teaching, including writing course syllabi, lesson plans, classroom rules and policies, learning objectives, directions for learning activities, discussion prompts, plans for substitute teachers, mini-lecture presentation scripts, and tips for classroom management (see Figure 1).

- Provide support with student assessment, such as writing student progress reports, quiz and test questions, and rubrics for grading. It can also provide automatic grading and feedback to students (Zhai, 2023).

- Help support student learning, including writing study guides, math and science word problems, text for students to read at different Lexile levels, directions for conducting a science experiment or designing a multimodal project, writing samples for students to critique, individualized educational plan goals, choose-your-own-adventure stories, and Reader’s Theatre scripts.

- Offer suggestions for improving teaching, such as writing advice for creating more inclusive and accessible learning activities, tips for diversifying the authors in a class reading list or syllabus, strategies for using digital tools and apps to enhance teaching and learning, and examples of how to communicate a concept to students at different age levels.

- Support teacher-parent and teacher-student communication, including writing text messages in different languages to send to students’ family members, drafting emails to communicate with students and family members, and providing text for a classroom newsletter or website to keep students and family members up to date on class activities.

Figure 1

ChatGPT’s Response to “What Are 20 Things You Can Help Teachers With?”

For students, ChatGPT can offer several benefits as well, such as the following:

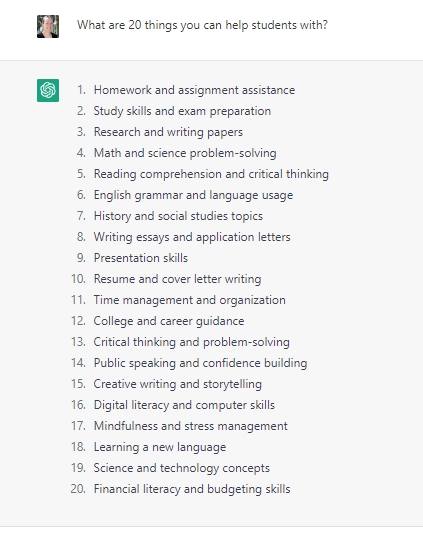

- Personalized learning support, including providing individualized tutoring in any subject, research support, directions to complete an activity, explanations of complex topics in more accessible language, notes for an inputted text (e.g., “Take notes on the following TED Talk transcript”), summaries and outlines of text, tips for writer’s block, text to help facilitate writing (e.g., sentence starters, transitions), computer code, translations of text into multiple languages, directions for solving problems, and more (see Figure 2). ChatGPT can even serve as a “teachable agent” (Tate et al., 2023, p. 11) that encourages students to learn by teaching ChatGPT a concept through a text-based conversation.

- Creative thinking support, such as writing scripts for multimodal projects (e.g., podcast or video); listing local, national, or global issues to address as part of a civic engagement project; providing suggestions for how to write or rephrase a sentence to make it more creative; offering ideas for inventions (e.g., “Design an invention to solve water scarcity around the world”); and providing support with brainstorming and idea forming for writing and class projects.

- Assessment support, such as writing test and quiz practice questions to help students prepare for an upcoming assessment, providing rubrics for students to evaluate their own work, and offering feedback on writing and projects.

- Reading and writing comprehension support, including helping improve students’ reading comprehension skills, writing skills, language skills, and research skills (Kasneci et al., 2023). ChatGPT can also help students with each of the seven steps of the writing process: (a) choosing a topic, (b) brainstorming, (c) outlining, (d) drafting, (e) soliciting feedback, (f) revising, and (g) proofreading (Anders & Sahakyan, 2023).

Figure 2

ChatGPT’s Response to “What Are 20 Things You Can Help Students With?”

While ChatGPT can seemingly write anything, it does have limitations. ChatGPT is not currently connected to the Internet, and it was trained on data pulled from the Internet prior to 2021, meaning that it cannot write credible responses about any events or information that has been produced after 2021 (OpenAI, n.d.). It also cannot make predictions about future events or browse the Internet (as of yet). Additionally, it can only provide text-based responses. For example, while it can produce a script for a podcast, it cannot produce a podcast audio file. Also, users cannot upload media for ChatGPT to review. That is, while users can upload a transcript from a TED Talk for ChatGPT to summarize, users cannot upload the TED Talk video for ChatGPT to summarize. Furthermore, ChatGPT cannot provide context-specific information, like what happened in class last week or what is happening in a student’s daily life.

Potential Risks of Using ChatGPT

While ChatGPT can be beneficial to teachers and students alike, there are also several risks involved with using ChatGPT, such as assuming that ChatGPT produces credible output, privileging AI-generated text over human-generated text, giving away personal and sensitive data, violating the terms of use, and widening the digital divide.

ChatGPT is designed to produce text that appears to be credible, but in many cases the information it provides is made up. Users are often unaware that ChatGPT is not an Internet search engine, reference librarian, or even Wikipedia; it is not designed to present factual information. Instead, it is programmed to predict which words best fit together to generate plausible-sounding text (May, 2023). As such, several educators and content experts have already found flaws in the mathematical and scientific output ChatGPT produced (e.g., Brumfiel, 2023; von Hippel, 2023). Educators have also found that ChatGPT will fabricate citations and reference lists that look real, but in fact do not exist (e.g., Smerdon, 2023).

Assuming that ChatGPT will provide reliable, credible, accurate, and trustworthy output is a risk that can impede, and in some cases even harm, teaching and learning. For instance, a student might turn to ChatGPT to learn how to solve a complex math problem, but if ChatGPT fabricates its response, the student might learn the wrong information. Or a teacher might turn to ChatGPT to design a lesson plan related to solving a complex science equation and end up presenting the wrong information to students. While some schools are already turning to ChatGPT and AI to serve as substitute teachers (Young, 2023), AI technologies are clearly not yet intelligent enough to serve as a substitute for the vast pedagogical content knowledge that teachers have.

Another potential risk is privileging AI-generated text over human-generated text. Rettberg (2022) noted that ChatGPT is “multilingual but monocultural” because it has been trained “on English-language texts, with the cultural biases and values embedded in them, and then aligned with the values of a fairly small group of US-based contractors” (para. 39). If teachers ask ChatGPT to write feedback for, or even grade, student writing without realizing that ChatGPT is trained on text that privileges certain ways of thinking, knowing, and using language, then students may be unfairly graded or given feedback that silences their unique, culturally relevant ways of writing. That is, privileging ChatGPT-generated text as “correct,” can perpetuate systemic bias and discrimination and force students to assimilate their writing to the culture of white normative discourse (Cheuk, 2021) rather than encouraging students to incorporate their own creative ways of expression into their writing.

Additional risks of using ChatGPT include giving away personal data and sensitive information and violating the terms of use. OpenAI’s privacy policy for ChatGPT indicates that the company collects a lot of information from the user, including log data, usage data, cookies, device information, IP address, interactions with the site, and date and time of use. The policy states that this information may be shared with vendors and service providers, law enforcement, affiliates, and other users. While users can request to have their data deleted, OpenAI will not delete any prompts a user inputs. So, if a user inputs a sensitive prompt (like asking about a medical condition or mental health issue), OpenAI keeps a permanent record of that input.

This also means that if a teacher asks ChatGPT to write an email to a student about their grade on a project or produce any other kind of educational record that includes protected student information, then OpenAI will have a permanent copy of that datam which is a potential violation of the Family Educational Rights and Privacy Act. Additionally, the Privacy Policy specifically states that since OpenAI is collecting data on all users, the users cannot be under 13 years old (because that would violate the Children’s Online Privacy Protection Act). Meanwhile, the Terms of Use state that users must be 18 or older to use ChatGPT. Asking students younger than 18 years old to use the tool would violate the Terms of Use. Using ChatGPT without reading the privacy policy and terms of use can create additional risks – both legal and ethical – for teachers and students alike.

One additional risk worth considering is that ChatGPT may widen the digital divide. OpenAI has recently moved to a pricing plan, where users who pay for access are provided with unrestricted use and faster response times, and users who continue to use the free version may be limited in when and how they use the tool. As mentioned previously, some schools and districts have also resorted to banning ChatGPT on school-owned devices and school wifi. Some countries still do not have access to ChatGPT. While Microsoft is currently integrating ChatGPT into its search engine Bing to ensure long-term public access to the tool (Mehdi, 2023), individuals and countries that have reliable Internet will more easily be able to access the tool than those who do not. This will undoubtedly expand the digital divide between those who can access and learn how to use the tool and those who cannot (Tate et al., 2023).

While these are just a few of the currently identified risks of using ChatGPT, it is clear that AI tools, like any new technologies, require a critical interrogation before use in education in order to mitigate potential risks and harms (Heath et al., 2022).

Potential Misuses of ChatGPT

There are several ways ChatGPT can be misused, including writing content that an author uses as their own (e.g., plagiarism and ghostwriting), generating misinformation, and writing harmful or biased information (OpenAI, n.d.).

As an advanced chatbot that uses a large language model to produce human-like text, ChatGPT can generate text that in many cases is indistinguishable from humans. Students have been quick to figure this out. In a poll of more than 4,000 Stanford students, 5% of the respondents reported submitting materials directly from ChatGPT for assignments without any additional editing (Cu & Hochman, 2023). In another survey featuring data from more than 1,000 students who were older than 18 (the required age to use ChatGPT), nearly half of the students reported using ChatGPT for an at-home test or quiz and 53% reported using ChatGPT to write an essay for class (Study.com, 2023a). Educators have already caught students using ChatGPT to cheat on exams and assignments, leading to several K-12 school districts around the nation, such as New York City Public Schools, banning the technology on school devices and infrastructure (Goulding, 2023; Study.com, 2023b).

Students, however, have not been the only ones who have been caught using ChatGPT to do their work for them. CNET, a popular technology site, published more than 70 AI-generated articles without informing readers that the articles were written by AI. Many of these articles were found to have errors, with CNET issuing corrections on 41 of the 77 AI-generated articles (Sato & Roth, 2023). Debates have also ensued regarding whether scholars can add ChatGPT as a coauthor on journal articles where the chatbot produces text as part of the manuscript. Springer Nature, the world’s largest academic publisher, issued a statement expressing that ChatGPT cannot be credited as an author because “any attribution of authorship carries with it accountability for the work, and AI tools cannot take such responsibility” (Nature.com, 2023, para. 6). Ultimately, many users – from students to journalists – have already found ways to misuse this tool by presenting output from ChatGPT as something written by humans.

Another misuse of the tool involves using ChatGPT to produce misinformation quickly and easily. Crovitz, the cochief executive of NewsGuard, called ChatGPT the most powerful tool for spreading information to date (as cited in Hsu & Thompson, 2023). When asked to produce false narratives for 100 controversial topics, ChatGPT obliged 80% of the time (Brewster et al., 2023). Brewster et al. commented that these results “confirm fears, including concerns expressed by OpenAI itself, about how the tool can be weaponized in the wrong hands” (para. 4). While the authors noted that ChatGPT does have some safeguards in place – the bot would not produce misinformation on certain topics and, in some cases, it would include a disclaimer at the end of the generated text – they felt that this would not be enough to prevent malicious actors from using this tool in nefarious ways.

An additional concern with the tool is its potential for generating harmful, hateful, or biased information. According to Kleiman (2023),

The online texts used to train AI language models can include racist, sexist, ageist, ableist, homophobic, antisemitic, xenophobic, deceitful, derogatory, culturally insensitive, hostile, and other forms of adverse content. As a result, AI models can generate unintended biased, derogatory and toxic outputs. (para. 33)

While OpenAI hired Kenyan workers (at just $2 per hour) to train ChatGPT to mitigate some of these harms (Perrigo, 2023), users have found ways around these safeguards (Rudolph et al., 2023). For example, while ChatGPT may refuse to write a sexist joke or tell a racist story, users have found that if they ask ChatGPT to write a program in Python that features the racist story or generate a song that is sexist it may oblige. As users experiment with new ways to use ChatGPT, many have also discovered ways to misuse this tool.

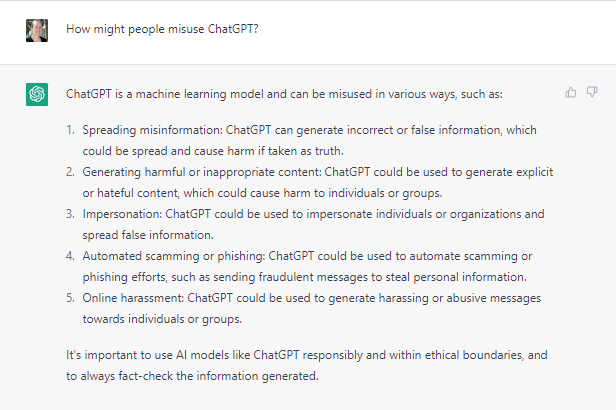

These are just a few of the ways ChatGPT has been found to be misused already. When prompted, ChatGPT also offered more examples of misuse, such as impersonation, automated scamming or phishing, and online harassment (see Figure 3). OpenAI (n.d.) also noted it is against their Usage Policies to use ChatGPT for assessing and making decisions about students or an educational context.

Figure 3

ChatGPT’s Response to “How Might People Misuse ChatGPT?”

Potential Opportunities with Using ChatGPT

Even though ChatGPT presents serious risks and potential misuses, it also can provide new opportunities in the field of education, such as motivating new conversations and policies about academic integrity, inspiring a rethinking of teaching practices, and supporting the development of critical media literacy skills.

Given the potential for misusing ChatGPT for plagiarism and ghostwriting, there is a need for new policies and conversations regarding academic integrity. In K-16 settings, expectations regarding academic integrity are often part of the “hidden curriculum” (Giroux & Penna, 1979), meaning that they are not explicitly discussed or taught in schools. In some cases, academic integrity statements are included in class syllabi, but these statements are rarely discussed with students during class time.

AI writing tools, like ChatGPT, make it clear that students and educators need to have thoughtful conversations about what academic integrity is, including what is considered plagiarism when using AI writing tools, what is not considered plagiarism when using these tools, and when it might be valuable to use AI writing tools for thinking, communication, and learning. Without these conversations, students, educators, and anyone in a career that involves writing will continue to grapple with the legal and ethical uses of AI writing tools for doing the work previously done by humans. For instance, Koko, an online emotional support chat service, secretly started using ChatGPT to respond to some of the users’ requests for mental health support, and when users found out they felt tricked and that it was unethical for the company to do this without informing them (Ingram, 2023).

With a remarkable ability to mimic human writing, the use of ChatGPT for any kind of writing requires new conversations and policies regarding academic integrity. In educational settings, involving students in discussing, and even cogenerating, academic integrity policies can create a more democratic learning environment where students feel like their voices are valued. Such conversations can also help students prepare for a future of interacting and grappling with AI technologies.

ChatGPT also presents an opportunity for educators to reflect upon, and in many cases, rethink their teaching practices. Some educators have wondered, “Why should a student be asked to summarize the Great Gatsby or list the 50 state capitals if ChatGPT can do that in a matter of seconds?” While other educators have questioned “is my job now obsolete?” Similar fears have arisen throughout history with the introduction of new technologies from calculators to the Internet to Wikipedia (Gecker, 2023).

Yet, schooling continues to persist because there is so much more that a teacher does in a classroom than simply disseminating information and teaching basic facts. In many cases, the introduction of these technologies freed up teachers and students to focus on more critical and creative aspects of learning. For example, rather than memorizing basic calculations, students can use calculators to complete more advanced mathematical problems and design creative solutions to mathematical challenges. With advanced AI writing tools, like ChatGPT, educators have the opportunity to reflect upon their own practice and consider how this tool might spur more innovative, student-centered, and higher order learning experiences (Ofgang, 2023) and even provide enhanced writing and communication support to students, especially those with language and learning disabilities as well as English language learners (Tate et al., 2023).

ChatGPT also provides an opportunity for educators and students to develop their critical media literacy skills. Critical media literacy

is an educational response that expands the notion of literacy to include different forms of mass communication, popular culture, and new technologies. It deepens the potential of literacy education to critically analyze relationships between media and audiences, information, and power, (Kellner & Share, 2007, p. 2)

Many AI technologies shape the way people think, learn, make decisions, and behave, without making this explicit. For example, TikTok’s algorithm has been found to push users toward watching more narrowed and extreme content (Wall Street Journal Staff, 2021), while Facebook was found to promote content that made users angry to keep them on the site longer (Merrill & Oremus, 2021).

AI technologies have been used to determine who gets audited (Tankersley, 2023), who gets a job (The Associated Press, 2022), who gets a life-saving donor organ (St. Fleur, 2022), who gets financial aid for college (Newton, 2021), and even who is predicted to be a future criminal (Angwin et al., 2016). In many cases, these technologies discriminate against nonwhite people as well as LGBT and disabled individuals (Kahn & Godlasky, 2022). Given that ChatGPT has already been found to provide harmful and biased content (Perrigo, 2022), easily write misinformation (Brewster et al., 2023), privilege monocultural ways of writing (Rettberg, 2022), and completely make up false information (May, 2023), it presents an opportunity for educators and students to critically investigate the production, design, output, and influence of this technology, which can help build critical media literacy skills.

For example, high school teacher Marisa Shulman had ChatGPT generate a lesson plan and class materials about wearable technologies and then, after teaching the lesson, asked students to question and critically evaluate the usefulness of ChatGPT-generated text (Singer, 2023). When asked whether teachers should use ChatGPT for designing educational materials, students in the class said “No!” (para. 36). These types of critical investigations are essential for preparing students for an AI-entrenched society. Additionally, Tate et al. (2023) contended that building critical media literacy skills with AI technologies is especially important for students from traditionally marginalized communities because “if we do not teach people in marginalized communities to use these tools well, it will once again be the more tech-savvy elite who disproportionately benefit from them” (p. 8).

Implications of ChatGPT for Teacher Education

AI technologies have been around for decades, with many of them entering formal education settings in the late 20th century. These tools, like all other technologies, have potential uses, misuses, risks, and opportunities; yet, far too often, technologies are adopted into society and educational settings without a critical interrogation. Akgun and Greenhow (2022) warned that bringing AI into educational settings could negatively impact students’ privacy and autonomy, perpetuate systemic bias and discrimination, increase student monitoring and surveillance, and create new forms of inequity.

While it remains to be seen whether and how ChatGPT will be integrated into K-12 settings, it is already clear that this tool has significant potential to influence teaching and learning – for better or worse. In response to the launch of ChatGPT, some educators increased their surveillance and monitoring practices by adopting new plagiarism detector tools, which themselves have several limitations (see OpenAI, 2023). Others resorted back to old-fashioned teaching approaches like handwritten essays, oral exams, and cold calling students to explain their writing to the whole class – approaches that can negatively impact disabled and neurodiverse students, as well as any students who struggle with learning and communication.

Some educators are using ChatGPT to provide feedback on student writing without questioning how ChatGPT determines what “good” writing is and whether ChatGPT was trained on a diverse, multicultural dataset of high-quality text (Rettberg, 2022). On the other hand, students and teachers have found ways to use ChatGPT to enrich their creative thinking, aid their brainstorming and formation of ideas, improve their understanding of complex topics, and identify ways to improve the diversity of educational materials.

Even though ChatGPT is not the first AI technology to influence education, it seems to be the first in a wave of new AI tools that will necessitate a rethinking of teaching and learning. Currier (2022) reported that there are currently 450 new generative technology (AI) startups. With all of these tools on the horizon, we agree with Tate et al. (2023) that “teacher pre-service education needs to provide the necessary pedagogical information and practical skills to incorporate AI in their lessons” (p. 11). However, traditionally teacher preparation programs have siloed technology aspects of education into a single course.

Given the rapid development and adoption of AI technologies and the potential for these tools to impact nearly every aspect of education and society, we recommend incorporating the critical examination of AI more organically across the entire teacher preparation program curriculum to best prepare future teachers for inclusion into their curriculum and practices. Every teacher in every grade level – from kindergarten teachers to high school math and science teachers – can benefit from interrogating AI technologies, like ChatGPT, to make informed decisions about the use of AI in teaching and learning and, ultimately, to prepare students for an AI-entrenched future. As such, in Table 1 we provide an overview of potential practices, techniques, and strategies for integrating AI into teacher education.

Table 1

Recommended AI Integration Practices for Teacher Educators

| Recommendation | Reasoning | Readings & Resources |

|---|---|---|

| Provide pre-service and in-service teachers with opportunities to critically interrogate and interact with AI technologies in ways that allow them to make informed decisions about their use in education. | Rapid uncritical adoption of AI technologies can create additional harms for students (e.g., privacy, surveillance, misinformation). Further, banning AI technologies might privilege certain groups of students, such as those who have access to these tools at home and students who may not need to rely on AI writing tools for assistance with writing. | Teacher and Student Guide to Analyzing AI Writing Tools (Maloy et al., 2023) Civics of Technology Curriculum (Krutka & Heath, 2022) Prior to (or instead of) using ChatGPT with your students (Caines, 2023) |

| Provide opportunities for pre-service and in-service teachers to reflect upon and rethink their practices in the era of AI technologies. | Anytime a disruptive technology comes along (e.g., printing press, calculator, the Internet, Wikipedia), teachers and students can benefit from educational practices that change alongside these tools, like designing personalized, student-centered learning experiences that cannot be replicated by AI tools. | ChatGPT & Education slide deck (Trust, 2022) Update Your Course Syllabus for chatGPT (Watkins, 2022) How to Prevent ChatGPT Cheating (Ofgang, 2023) ChatGPT with My Students (Gerstein, 2023) |

| Role model how to critically evaluate the teaching materials and information generated by AI writing tools, such as ChatGPT. | Using AI-generated teaching materials (e.g., lesson plans) and information (e.g., content for mini-lecture slides) without critical examination can create additional harms for students and negatively impact teaching and learning. Clark & van Kessel (2023) note: “An over-reliance on ChatGPT for creating lesson plans means that we would be giving up many of the insights provided by educational scholars” (para. 15). | "Pinning" with Pause framework (Gallagher et al., 2019) How Well Would ChatGPT Do in My Course? I Talked to It to Find Out (Lopez, 2023) At This School, Computer Science Class Now Includes Critiquing Chatbots (Singer, 2023) |

| Encourage pre-service and in-service teachers to embed AI education into their practices. | AI education can help students understand and critically examine the AI technologies that shape their lives in order to make informed decisions about their use. | AI4K12.org. Hands-On AI Projects for the Classroom: A Guide on Ethics and AI (ISTE, 2021). AI Literacy Curriculum (MIT) SPACE Framework for Writing with AI tools (Kleiman, 2023). |

| Increase transparency in teaching and learning. | AI writing tools require new conversations and transparency regarding academic integrity and plagiarism. Students can also benefit from transparency regarding their assignments so they do not end up wondering “why do I have to do this assignment if ChatGPT can do it for me?” | Classroom Policies for AI Generative Tools (Eaton, n.d.) Transparency in Learning and Teaching Project (Winkelmes, n.d.) |

Conclusion

As the world debates the educational and societal implications of ChatGPT and AI, what remains clear is that the development and improvement of this type of technology show no signs of slowing down. In February 2023, Google announced its competitor to ChatGPT, “Bard,” which will combine the power of a large language model with Google’s search engine capabilities (Pichai, 2023). Microsoft also announced that ChatGPT would be integrated into Bing to create a richer search and learning experience (Mehdi, 2023). With the world’s largest technology companies competing to integrate GPT technology into their tools, new avenues of AI exploration are on the horizon for the field of education.

ChatGPT and its successors have the ability to support teachers and students, alike, with personalized learning, advanced writing support, and guidance in creative thinking. As with the implementation of any new technology, however, its use carries many risks and the potential for misuse. Misinformation and bias found within ChatGPT’s responses, coupled with instances of cheating and plagiarism, have worried educational professionals. While some districts and institutions have acted quickly to ban ChatGPT, we instead believe, along with Kranzberg, (1986) that “technology is neither good nor bad; nor is it neutral” (p. 545).

Educators, administrators, and policymakers must proactively seek to educate themselves and their students on how to use these tools both morally and ethically. Educators should also understand the limitations of using AI tools and that, while every technology poses both affordances and challenges, they also come with their own embedded dangers.

References

Akgun, S., & Greenhow, C. (2022). Artificial Intelligence in education: Addressing ethical challenges in K-12 settings. AI and Ethics, 2(3), 431–440. https://doi.org/10.1007/s43681-021-00096-7

Anders, B., & Sahakyan, S. (2023). Chat GPT and AI presentation and Q&A [Powerpoint slides]. American University of Armenia Center for Teaching and Learning. https://ctl.aua.am/view/uploads/entry/26/

Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2016, May 23). Machine bias. ProPublica. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

The Associated Press. (2022, May 12). U.S. warns of discrimination in using artificial intelligence to screen job candidates. NPR. https://www.npr.org/2022/05/12/1098601458/artificial-intelligence-job-discrimination-disabilities

Brewster, J., Arvanitis, L., & Sadeghi, M. K. (2023, January 24). Could ChatGPT become a monster misinformation superspreader? NewsGuard. https://www.newsguardtech.com/misinformation-monitor/jan-2023/

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., Neelakantan, A., Syam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., … & Amodei, D. (2020). Language models are few-shot learners. Advances in Neural Information Processing Systems, 33, 1877-1901.

Brumfiel, G. (2023, February 2). We asked the new AI to do some simple rocket science. it crashed and burned. NPR. https://www.npr.org/2023/02/02/1152481564/we-asked-the-new-ai-to-do-some-simple-rocket-science-it-crashed-and-burned

Buchanan, B. G. (2005). A (very) brief history of artificial intelligence. AI Magazine, 26(4), 53. https://doi.org/10.1609/aimag.v26i4.1848

Caines, A. (2023, January 18). Prior to (or instead of) using ChatGPT with your students. https://autumm.edtech.fm/2023/01/18/prior-to-or-instead-of-using-chatgpt-with-your-students/

Cheuk, T. (2021). Can AI be racist? Color‐evasiveness in the application of machine learning to science assessments. Science Education, 105(5), 825–836. https://doi.org/10.1002/sce.21671

Choi, J. H., Hickman, K. E., Monahan, A., & Schwarcz, D. B. (2023). ChatGPT goes to law school. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4335905

Clark, C.H., & van Kessel, C. (2023, February 19). Can ChatGPT create a thoughtful lesson plan? https://www.civicsoftechnology.org/blog/can-chatgpt-create-a-thoughtful-lesson-plan

Cu, M. A., & Hochman, S. (2023, January 22). Scores of Stanford students used ChatGPT on final exams. The Stanford Daily. https://stanforddaily.com/2023/01/22/scores-of-stanford-students-used-chatgpt-on-final-exams-survey-suggests/

Currier, J. (2022, December). NFX’s generative tech open source market map. NFX. https://www.nfx.com/post/generative-ai-tech-market-map

Dai, C.-P., & Ke, F. (2022). Educational applications of Artificial Intelligence in simulation-based learning: A systematic mapping review. Computers and Education: Artificial Intelligence, 3, 100087. https://doi.org/10.1016/j.caeai.2022.100087

Daley, S., & Pennington, J. (2020). Alexa the teacher’s pet? A review of research on virtual assistants in education. Proceedings of EdMedia + Innovate Learning (pp. 138-146). Online, The Netherlands: Association for the Advancement of Computing in Education.

Duckworth, A., & Ungar, L. (2023, January 19). Op-Ed: Don’t ban chatbots in classrooms — use them to change how we teach. https://www.latimes.com/opinion/story/2023-01-19/chatgpt-ai-education-testing-teaching-changes

Feathers, T. (2022, January 11). This private equity firm is amassing companies that collect data on America’s children. The Markup. https://themarkup.org/machine-learning/2022/01/11/this-private-equity-firm-is-amassing-companies-that-collect-data-on-americas-children

Gallagher, J. L., Swalwell, K. M., & Bellows, M. E. (2019). ” Pinning” with pause: Supporting teachers’ critical consumption on sites of curriculum sharing. Social Education, 83(4), 217-224.

Gecker, J. (2023, February 14). Some educators embrace ChatGPT as a new teaching tool. PBS. https://www.pbs.org/newshour/education/some-educators-embrace-chatgpt-as-a-new-teaching-tool

Gerstein, J. (2023, January 22). ChatGPT with my students. https://usergeneratededucation.wordpress.com/2023/01/22/chatgpt-with-my-students/

Giroux, H. A., & Penna, A. N. (1979). Social education in the classroom: The dynamics of the hidden curriculum. Theory & Research in Social Education, 7(1), 21–42. https://doi.org/10.1080/00933104.1979.10506048

Goulding, G. (2023, February 17). Students at cape coral high school accused of using artificial intelligence to cheat on essay. NBC2 News. https://nbc-2.com/news/local/2023/02/15/students-at-cape-coral-high-school-accused-of-using-artificial-intelligence-to-cheat-on-essay/amp/

Guan, C., Mou, J., & Jiang, Z. (2020). Artificial intelligence innovation in education: A Twenty-year data-driven historical analysis. International Journal of Innovation Studies, 4(4), 134–147. https://doi.org/10.1016/j.ijis.2020.09.001

Haenlein, M., & Kaplan, A. (2019). A brief history of artificial intelligence: On the past, present, and future of Artificial Intelligence. California Management Review, 61(4), 5–14. https://doi.org/10.1177/0008125619864925

Heath, M., Asim, S., Milman, N., & Henderson, J. (2022). Confronting tools of the oppressor: Framing just technology integration in educational technology and teacher education. Contemporary Issues in Technology and Teacher Education, 22(4). https://citejournal.org/volume-22/issue-4-22/current-practice/confronting-tools-of-the-oppressor-framing-just-technology-integration-in-educational-technology-and-teacher-education

Heaven, W. D. (2023, February 8). ChatGPT is everywhere. Here’s where it came from. MIT Technology Review. https://www.technologyreview.com/2023/02/08/1068068/chatgpt-is-everywhere-heres-where-it-came-from/

Hsu, T., & Thompson, S. A. (2023, February 8). Disinformation researchers raise alarms about A.I. Chatbots. The New York Times. https://www.nytimes.com/2023/02/08/technology/ai-chatbots-disinformation.html

Hu, K. (2023, February 2). CHATGPT sets record for fastest-growing user base – analyst note. Reuters. https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/

Ingram, D. (2023, January 14). AI chat used by mental health tech company in experiment on real users. NBCNews.com. https://www.nbcnews.com/tech/internet/chatgpt-ai-experiment-mental-health-tech-app-koko-rcna65110

Johnson, S. (2022, March 8). The Turing test: AI still hasn’t passed the “Imitation Game”. Big Think. https://bigthink.com/the-future/turing-test-imitation-game/

Johnson-Leslie, N., & Leslie, H. S. (2022, April). A whole new world since COVID-19: Integrating Mursion virtual reality simulation in teacher preparation and practice. In Proceedings of the Society for Information Technology & Teacher Education International Conference (pp. 1716-1724). Association for the Advancement of Computing in Education https://www.learntechlib.org/p/220973/

Kasneci, E., Seßler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., Gasser, U., Groh, G., Günnemann, S., Hüllermeier, E., Krusche, S., Kutyniok, G. Michaeli, T., Nerdel, C., Pfeffer, J., Poquet, O., Sailer, M., Schmidt, A., Seidel, T., … Kasneci, G. (2023, January 30). ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. EdArXiv Preprints. https://doi.org/10.35542/osf.io/5er8f

Kahn, H., & Godlasky, A. (2022, November 30). Discrimination driven by data. National Press Foundation. https://nationalpress.org/topic/technology-discrimination-disabilities-race-privacy-ridhi-shetty-willmary-escoto/

Kellner, D., & Share, J. (2007). Critical media literacy is not an option. Learning Inquiry, 1(1), 59–69. https://doi.org/10.1007/s11519-007-0004-2

Kim, J., Merrill, K., Xu, K., & Sellnow, D. D. (2020). My teacher is a machine: Understanding students’ perceptions of AI teaching assistants in online education. International Journal of Human–Computer Interaction, 36(20), 1902-1911.

King, S., Boyer, J., Bell, T., & Estapa, A. (2022). An automated virtual reality training system for teacher-student interaction: A randomized controlled trial. JMIR Serious Games, 10(4). https://doi.org/10.2196/41097

Kirchner, J. H., Ahmad, L., Aaronson, S., & Leike, J. (2023, February 1). New AI classifier for indicating AI-written text. OpenAI. https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text/

Kleiman, G. (2023, January 5). Teaching students to write with AI: The space framework. Medium. https://medium.com/@glenn_kleiman/teaching-students-to-write-with-ai-the-space-framework-f10003ec48bc

Knightly, W. (2018, November 13). Google duplex: Does it pass the Turing Test? Technology and Operations Management. https://d3.harvard.edu/platform-rctom/submission/google-duplex-does-it-pass-the-turing-test/

Kranzberg, M. (1986). Technology and history: Kranzbrg’s laws. Technology and Culture, 27(3), 544-560. https://doi.org/10.2307/3105385

Kulik, J. A., & Fletcher, J. D. (2016). Effectiveness of intelligent tutoring systems. Review of Educational Research, 86(1), 42–78. https://doi.org/10.3102/0034654315581420

Kung, T. H., Cheatham, M., Medenilla, A., Sillos, C., De Leon, L., Elepaño, C., & Tseng, V. (2023). Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digital Health, 2(2), e0000198.

Laird, E., Grant-Chapman, H., Venzke, C., & Quay-de la Vallee, H. (2022). Hidden harms: The misleading promise of monitoring students online. Center for Democracy & Technology. https://cdt.org/insights/report-hidden-harms-the-misleading-promise-of-monitoring-students-online/.

Lopez, N. (2023, February 15). How well would ChatGPT do in my course? I talked to it to find out. https://www.facultyfocus.com/articles/effective-classroom-management/how-well-would-chatgpt-do-in-my-course-i-talked-to-it-to-find-out/

Lowe, R., & Leike, J. (2022, January 22). Aligning language models to follow instructions. OpenAI. https://openai.com/blog/instruction-following/

Main, N. (2023, January 11). CNET has been quietly publishing AI-written articles for months. Gizmodo. https://gizmodo.com/cnet-chatgpt-ai-articles-publish-for-months-1849976921

May, J. (2023, February 2). ChatGPT is great – you’re just using it wrong. The Conversation. https://theconversation.com/chatgpt-is-great-youre-just-using-it-wrong-198848

McCarthy, J. (n.d.). What is AI?/Basic questions. Stanford University. http://jmc.stanford.edu/artificial-intelligence/what-is-ai/index.html

Mehdi, Y. (2023, February 7). Reinventing search with a new AI-powered Microsoft Bing and EDGE, your copilot for the web. The Official Microsoft Blog. https://blogs.microsoft.com/blog/2023/02/07/reinventing-search-with-a-new-ai-powered-microsoft-bing-and-edge-your-copilot-for-the-web/

Merrill, J. B., & Oremus, W. (2021, October 26). Five points for anger, one for a ‘like’: How Facebook’s formula fostered rage and misinformation. The Washington Post. https://www.washingtonpost.com/technology/2021/10/26/facebook-angry-emoji-algorithm/

Mezzacappa, D. (2021, December 9). Writing test added to Philly’s selective admissions process is being misused, professor says. Chalkbeat Philadelphia. https://philadelphia.chalkbeat.org/2021/12/9/22826693/writing-test-added-to-phillys-selective-admissions-process-is-being-misused-professor-says

Microsoft News Center. (2019, July 22). OpenAI forms exclusive computing partnership with Microsoft to build new Azure Ai Supercomputing Technologies. Microsoft. https://news.microsoft.com/2019/07/22/openai-forms-exclusive-computing-partnership-with-microsoft-to-build-new-azure-ai-supercomputing-technologies/?utm_source=Direct

Miller, C., Playford, A., Buchanan, L., & Krolik, A. (2023, January 12). Did a fourth grader write this? or the new chatbot? Stanford Graduate School of Education. https://ed.stanford.edu/news/in-the-media/did-fourth-grader-write-or-new-chatbot

Mollman, S. (2022, December 9). ChatGPT gained 1 million users in under a week. Here’s why the AI chatbot is primed to disrupt search as we know it. Yahoo! Finance. https://finance.yahoo.com/news/chatgpt-gained-1-million-followers-224523258.html

Nature.com. (2023). Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. https://www.nature.com/articles/d41586-023-00191-1

Newton, D. (2021, April 26). Artificial intelligence is infiltrating Higher Ed, from admissions to grading. The Hechinger Report. https://hechingerreport.org/from-admissions-to-teaching-to-grading-ai-is-infiltrating-higher-education/

Ofgang, E. (2023, February 6). How to prevent ChatGPT cheating. TechLearningMagazine. https://www.techlearning.com/how-to/how-to-prevent-chatgpt-cheating

OpenAI. (2015). Introducing OpenAI. https://openai.com/blog/introducing-openai/

OpenAI. (2022). Aligning language models to follow instructions. https://openai.com/blog/instruction-following/

OpenAI. (2023). New AI classifier for indicating AI-written text. https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text/

OpenAI. (n.d.). Educator considerations for ChatGPT. https://platform.openai.com/docs/chatgpt-education

Perrigo, B. (2022, December 5). ChatGPT says we should prepare for the impact of AI. Time. https://time.com/6238781/chatbot-chatgpt-ai-interview/

Perrigo, B. (2023, January 18). OpenAI used Kenyan workers on less than $2 per hour: Exclusive. Time. https://time.com/6247678/openai-chatgpt-kenya-workers/

Pichai, S. (2023, February 6). An important next step on our AI journey. Google. https://blog.google/technology/ai/bard-google-ai-search-updates/

Rettberg, J. (2022, December 6). ChatGPT is multilingual but monocultural, and it’s learning your values. https://jilltxt.net/right-now-chatgpt-is-multilingual-but-monocultural-but-its-learning-your-values/

Rudolph, J., Tan, S., & Tan, S. (2023). Chatgpt: Bullshit spewer or the end of traditional assessments in higher education? Ed-Tech Reviews, 1, 6(1). https://doi.org/10.37074/jalt.2023.6.1.9

Sato, M., & Roth, E. (2023, January 25). CNET found errors in more than half of its AI-written stories. The Verge. https://www.theverge.com/2023/1/25/23571082/cnet-ai-written-stories-errors-corrections-red-venture

Singer, N. (2023, February 6). At this school, computer science class now includes critiquing chatbots. The New York Times. https://www.nytimes.com/2023/02/06/technology/chatgpt-schools-teachers-ai-ethics.html

Smerdon, D. [@dsmerdon]. (2023, January 26). Why does ChatGPT make up fake academic papers? By now, we know that the chatbot notoriously invents fake academic references. E.g. its answer to the most cited economics paper is completely made-up (see image) But why? And how does it make them? A THREAD [Tweet]. https://twitter.com/dsmerdon/status/1618816703923912704?s=20&t=kQgsesSFXuJMamcUOrtsqQ

Starcevic, S. (2023, January 23). As ChatGPT faces Australia crackdown, disabled students defend AI. https://www.context.news/ai/as-chatgpt-faces-australia-crackdown-disabled-students-defend-ai

St. Fleur, N. (2022, May 30). ‘Racism is America’s oldest algorithm’: How bias creeps into health care AI. STAT. https://www.statnews.com/2022/05/30/how-bias-creeps-into-health-care-ai/

Study.com. (2023a, February). Take online courses. earn college credit. Research Schools, Degrees & Careers. https://study.com/resources/perceptions-of-chatgpt-in-schools

Study.com. (2023b, February). ChatGPT in the classroom. https://study.com/resources/chatgpt-in-the-classroom

Tankersley, J. (2023, January 31). Black Americans are much more likely to face tax audits, study finds. The New York Times. https://www.nytimes.com/2023/01/31/us/politics/black-americans-irs-tax-audits.html

Tate, T. P., Doroudi, S., Ritchie, D., Xu, Y., & Uci, M. W. (2023, January 10). Educational research and AI-generated writing: Confronting the coming tsunami. EdArXiv. https://doi.org/10.35542/osf.io/4mec3

Tedre, M., Toivonen, T., Kahila, J., Vartiainen, H., Valtonen, T., Jormanainen, I., & Pears, A. (2021). Teaching machine learning in K–12 classroom: Pedagogical and technological trajectories for artificial intelligence education. IEEE Access, 9, 110558–110572. https://doi.org/10.1109/access.2021.3097962

Thompson, A. D. (2022). What’s in my AI? A comprehensive analysis of datasets used to train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher. https://LifeArchitect.ai/whats-in-my-ai

Turing, A. M. (1950). I.—Computing machinery and intelligence. Mind, LIX(236), 433–460. https://doi.org/10.1093/mind/lix.236.433

von Hippel, P. T. (2023, January 4). ChatGPT is not ready to teach geometry (yet). Education Next. https://www.educationnext.org/chatgpt-is-not-ready-to-teach-geometry-yet/

Wall Street Journal Staff. (2021, July 21). Inside TikTok’s algorithm: A WSJ video investigation. The Wall Street Journal. https://www.wsj.com/amp/articles/tiktok-algorithm-video-investigation-11626877477

Watkins, R. (2022, December 18). Update your course syllabus for ChatGPT. https://medium.com/@rwatkins_7167/updating-your-course-syllabus-for-chatgpt-965f4b57b003

Winkelmes, M.A. (n.d.). Transparency in learning and teaching project. https://tilthighered.com/transparency

Young, J. R. (2023, January 19). AI tools like CHATGPT may reshape teaching materials – and possibly substitute teach. EdSurge. https://www.edsurge.com/news/2023-01-19-ai-tools-like-chatgpt-may-reshape-teaching-materials-and-possibly-substitute-teach

Zhai, X. (2023). ChatGPT for next generation science learning. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4331313

![]()