Emerging technologies are those that may rapidly evolve as they are adopted and adapted in different environments. Veletsianos (2010) discussed the complex and sometimes ambiguous use of the term emerging technology and argued that “(‘new’ and ‘old’) emerging technologies are evolving organisms that experience hype cycles, while at the same time being potentially disruptive, not yet fully understood, and not yet fully researched” (p. 3).

In a later work, Veletsianos (2016) discussed his earlier definition of emerging technologies and explained that the environments in which the technologies and practices function are important to determining emergence. He further argued that emerging technologies are often new, but the newness of technology is not the defining characteristic. Instead, emerging technologies in learning are better defined by a context that is evolving in practice and purposes and that is not yet fully understood within that context through research. These technologies are seen as promising within the field of education, yet this promise remains largely unfilled.

When considering the integration of emerging technology in teaching and learning, many different aspects of this process are undoubtedly of interest. Virtual reality head-mounted displays (VR HMDs) are one such technology that presently meets Veletsiano’s definition of emerging technology in education. The term virtual reality (VR)refers to a wide range of technologies, in which an immersive digital simulation of a physical space allows users to interact as if they were present in that space.

Milman (2018) discussed a continuum of this technology, ranging from a real-world environment to fully immersive and artificial environments of VR, with augmented reality (AR) and mixed reality (MR) blending both real-world and artificial elements in different ways. Within the category of VR specifically, VR HMDs that are currently available are various models of goggle-like headsets, similar to that shown in Figure 1, through which a user views a digital display of the virtual space. VR HMD users can navigate within the virtual space by physical movement such as turning their head or interacting with a device such as a gamepad controller.

Figure 1 Man Using the Oculus Rift VR HMD

The potential for VR HMDs in education has been frequently discussed in a series of annual publications called The Horizon Reports, K-12 editions from 2015 through 2017, that described promising educational technologies and practices. Although no report was published in 2018, the potential and promise of mixed reality, including VR, was again noted in the 2019 Horizon Report with a particular emphasis on the promise for experiential learning. In the 2015 Horizon Report, the time-to-adoption for VR HMDs was estimated to be 4 to 5 years (Johnson et al., 2015).

In the subsequent three reports, VR HMDs were described as 2 to 3 years from adoption (Alexander et al., 2019; Becker et al., 2016; Freeman et al., 2017). Although this time-to-adoption estimate remained unchanged in the 2019 Horizon Report, the discussion of the educational affordances of mixed reality shifted each year, much like what Veletsianos (2016) described as a “negotiated relationship between the maturation of a technology/practice and the environment that surrounds it” (p. 9).

Although one leading use for this VR HMDs is for entertainment such as gaming, educators and others are exploring how these technologies may serve a useful purpose in the process of teaching and learning. Of particular interest are strategies to develop the knowledge and skills of educators in exploring and adapting to the continually changing technology landscape. To this end, the purpose of this study was to investigate the degree to which preservice teachers were able to integrate VR HMDs for educational purposes.

Review of Relevant Literature

Preservice teachers’ use of technology can be viewed from a number of perspectives that bring to light the complexity of means by which a preservice teacher develops skills, knowledge, and creativity to use technology for educational purposes effectively. The review of the literature builds on prior education-related VR technology research and perspectives on technology adoption and integration into teaching and learning.

VR in K-12 education

Immersive virtual reality in a HMD is a relatively new form of this technology and has not yet been the subject of extensive research in an educational context. Yet, VR HMDs bear resemblance in affordances and constraints with other emerging technologies that can influence the ways in which VR HMDs can be leveraged for educational purposes. Examples include technologies such as 3D virtual world environments for desktop computers or interactive physical spaces with digital images projected on walls, ceilings, and floors. These technologies are distinct from VR HMDs, yet aspects such as virtual space design or physical immersion may be similar. The educational purposes for which these technologies are used typically include simulations, gaming, and social interaction (Hew & Cheung, 2010; Merchant, 2014).

Virtual worlds are a different form of a 3-D digital environment that shares many commonalities with VR HMDs, although the mode of interaction is distinct. Whereas VR HMDs are immersive environments, virtual worlds are digital simulations of a space in which a user can navigate and interact, most commonly using a computer or mobile device to view the simulated space while navigating using a keyboard, game controller, or other methods.

These digitally simulated spaces are used for a variety of purposes such as gameplay, social interaction, exploration, and so forth. For example, Minecraft (http://www.minecraft.net) is a virtual world in which many interactive modes are available, including single-user exploration, virtual world construction and design, multiuser gameplay, or narrative story mode. The wide variety of possible activities have made virtual worlds appealing for educational purposes, and Minecraft is arguably the leading example of this.

Nebel et al. (2016) provided examples of the use of Minecraft in many different content areas and grade levels due to the adaptability of the virtual world as well as the modifiability of the software that led to the creation of an education-focused version (MinecraftEDU) and other modified versions. Minecraft is also an example of a virtual world that has since been made compatible with multiple VR HMD platforms (https://www.minecraft.net/en-us/vr/). As a result of ongoing development of this software to be compatible with multiple devices, a Minecraft user can interact within this virtual space in both immersive and nonimmersive technologies.

In a review of the empirical research prior to 2008 on the use of virtual worlds in educational contexts, Hew and Cheung (2010) asserted that “virtual worlds may be utilized as (1) communication spaces, (2) simulation of space (spatial), and (3) experiential spaces (‘acting’ on the world)” (p. 36). At that time, virtual worlds consisted largely of virtual spaces in which users could interact in real-time via text-based chat and were visually represented by an avatar. The educational purposes for which these were used in 2010 were generally modeled from real-world experiences that were designed within a virtual digital space.

For example, Hew and Cheung describe a study by Ligorio and van Veen (2006) in which students (9-15 years old) from seven European schools participated in learning activities in a common virtual world. Ligotio and van Veen reported that students exhibited an increased awareness of other cultures when collaborating with students from those cultures within the virtual world. The benefits to cultural exchange experiences was also described by Jowallah et al. (2018), who discussed the affordances of VR HMDs for immersive interactions within social and culturally relevant storytelling.

More recently, Merchant et al. (2014) conducted a meta-analysis of the learning outcomes in K-12 and higher education for desktop-based virtual reality in which 13 variables were examined separately for simulations, games, and virtual worlds. In each of the three meta-analyses, a majority of studies found statistically significant results indicating increased learning outcomes when using games, simulations, and virtual worlds, although studies with negative effects or non-significant results were also present in each category. Merchant et al. concluded that the results studies of VR game-based learning environments were more effective with effect sizes approximately twice that of studies of virtual worlds or simulations.

As with any emerging educational technology, a debate often arises as to whether the initial excitement and interest for the educational applications will lead to effective practice. In a 2014 Education Week article, Herold (2014) wrote about the promise of VR HMDs (specifically the Oculus Rift) for K-12 education and described a teacher using a prototype with K-12 students with special needs, as well as other potential educational uses. More recently, Herold and Molnar (2018) explained that “VR in education has been subject to hype cycles, with hyperbolic predictions of a classroom revolution falling flat in the real world” (para. 21).

Some of the common concerns with VR HMDs in the classroom include the high cost, limited availability of applications that are designed for education, and possible detrimental physical effects such as nausea or effects on eyesight. Low-cost VR HMD options such as Google Cardboard (https://vr.google.com/cardboard/), which use common mobile devices and low-cost headset, are often discussed when considering the costs of VR experiences in K-12 schools. The issues concerning the physical effects, however, remain largely unknown.

Technology Adoption and Acceptance

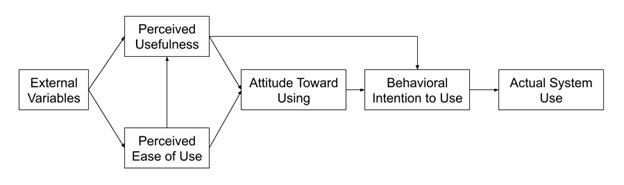

One widely discussed model of technology adoption, an individual’s intention to use technology for a specific purpose, is the Technology Acceptance Model (TAM; Davis, 1989; Davis et al., 1989). As depicted in Figure 2, the TAM describes the factors leading to the use of a technology system for a specific purpose. In this model, the actual system use is determined by the influence of external variables (e.g., user and system attributes), perceived ease of use, and perceived usefulness on a user’s attitude and intention to use the technology.

Figure 2 Depiction of the Technology Acceptance Model as Described by Davis et al., 1989

In a review of literature on the TAM, Marangunić and Granić (2015) described the ways that this model has been used to study many phenomena and that the theory itself has been revised and expanded by researchers over time. For example, Venkatesh and Davis (2000) tested and expanded the model using longitudinal data about four different technology systems and proposed an expanded TAM2 model to include additional variables. In the TAM2 model, the construct of perceived usefulness was influenced by subjective norms, job relevance, output quality, result demonstrability, and professional image. They additionally found that experience with a technology and the voluntariness of use exhibited an interaction effect on the intention to use a technology system.

Regarding technology acceptance in educational settings, the factors that influence perceptions of ease of use and usefulness are likely to be affected by different external variables than in other professional contexts. Bourgonjon et al. (2013) applied the TAM model when investigating the acceptance of game-based learning in educational settings by classroom teachers. That study found that the external factors influencing the acceptance of game-based learning included personal innovativeness and the number of other users who have accepted technology for a purpose. Additional factors of experience, learning opportunities, and subjective norms were also found to impact acceptance.

Aldunate and Nussbaum (2013) also explored teacher adoption of technology and concluded that teachers who were early adopters of technology, in general, were more likely to adopt a new technology regardless of the complexity of the technology that was being considered. In contrast, teachers who were not categorized to be early adopters were more likely to abandon a technology at some point in the acceptance process. This prior research suggests that innovation and early adoption are important factors in technology acceptance in educational settings; thus, the exploration of emerging technologies in teacher education programs can lead to insight into fostering these characteristics.

Technology Integration Into Teaching

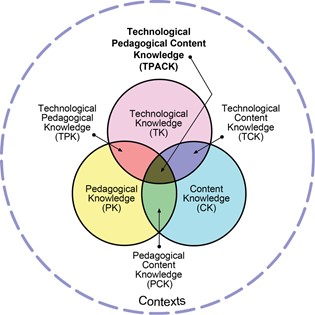

The factors that influence the use of technology are undoubtedly complex. The Technology, Pedagogy, and Content Knowledge (TPACK) framework was adapted to model the interaction of different forms of teacher knowledge that is necessary for meaningful technology integration. Mishra and Koehler (2006), along with others before them, asserted that the meaningful integration of technology into teaching and learning is largely influenced by a teacher’s knowledge within the domains of pedagogy, technology, and a content area. The TPACK model of teacher knowledge depicted in Figure 3 is an extension of Shulman’s (1986) pedagogical content knowledge model to include the domain of technological knowledge. The TPACK model exemplifies the interaction and intersection between the three knowledge domains that are engaged when a teacher seeks to leverage the affordances of technology to create meaningful learning experiences. The TPACK framework has served as a conceptual model in many research studies focusing on preservice and in-service teachers’ use of technology in teaching and learning.

Figure 3 Venn diagram depicting the TPACK framework. (Reprinted with permission from tpack.org)

Measuring Technology Integration

Tto understand the degree to which preservice teachers are able to develop teaching and learning activities that integrate VR HMDs, considering the level of technology integration is necessary, with respect to various aspects of the learning environment. The Technology Integration Matrix (TIM; https://fcit.usf.edu/matrix/matrix/) is a rubric designed to capture the extent to which technology is integrated into an instructional setting (Allsopp et al., 2007). The TIM is grounded in the constructivist perspective, and it provides a systematic method and a standard language to describe and evaluate technology integration in a specific lesson (Welsh et al., 2011).

Structurally, the TIM is a rubric with five dimensions of meaningful learning environments (active, collaborative, constructive, authentic, and goal-directed). When using the TIM, each of these dimensions of the learning environment is rated according to one of five levels of technology integration (entry, adoption, adaptation, infusion, and transformation). The TIM yields a distinct ordinal rating of the level of technology integration for each of the five dimensions.

The TIM was developed at the Florida Center for Instructional Technology at the University of South Florida. It was originally conceptualized by experts in the field who used literature to inform the theoretical core of the framework. The TIM is currently in its third version, and its development has been an iterative process of refinement using data from sources including focus groups, public surveys, field tests, usability studies, classroom observations, and structured interviews with teachers. The TIM is a valid and reliable instrument, as established by expert review and both qualitative and quantitative research studies (Allsopp et al., 2007; Barbour, 2014; Meigs, 2010). (For more information on how TIM was developed, see Harmes et al., 2016).

The TIM framework and tools have been adopted by several state agencies, and is included in the Instructional Technology resources at the Florida Department of Education (http://origin.fldoe.org/academics/standards/subject-areas/instructional-technology/) and the Technology Literacy resources at the Rhode Island Department of Education (http://www.ride.ri.gov/InstructionAssessment/InstructionalInitiativesResources/TechnologyLiteracy.aspx). In each case, the TIM framework and tools are used as resources for teachers and administrators who are evaluating technology integration and planning technology supports for their schools.

TIM tools have also been used for research purposes. For example, Liu et al. (2017) used the underlying TIM constructs to explore teachers’ confidence in, comfort with, and use of technology in the classroom. They found that teacher experience, access to technology, and the availability of high-quality support mediated the degree of technology integration into the classroom. In another research study, Barbour (2014) validated the use of the TIM as a measure of technology integration in the classroom and also as an indicator of student engagement. Finally, Kieran and Anderson (2014) researched the changes in preservice teachers’ planned use of student-centered technology and found that lesson planning skills improved significantly when preservice teachers were provided with the TIM as a guide for technology implementation.

The Kieran and Anderson (2014) study is important for two reasons. First, it highlighted the practical value of the TIM in a preservice teacher education context. In this case, the use of the TIM led to measurable improvements in lesson planning. Second, it demonstrated a high level of agreement between raters when multiple raters were used to score lesson plans. This agreement is a good indicator that the structure of the matrix is clear and that the dimensions and levels of the matrix are sufficiently described.

Purpose of the Study

Although TAM, TPACK, and TIM are multiple models for understanding and describing technology adoption and integration, emerging technology presents a unique challenge for preservice teachers. When technology is, as Veletsianos (2016) argued, in an evolving context, perceived as promising, yet not fully understood or researched, it is important to examine how these conditions may influence preservice teachers’ pedagogical planning to improve technology integration practices within a teacher education program.

The current state of VR HMDs as an emerging technology in education provided a unique opportunity to examine the ways in which preservice teachers use this technology in learning activities. During the period on which this study focused, VR HMDs evolved from a prototype technology into a commercially available one. As such, one particular area of interest was to examine the degree to which preservice teachers using different forms of VR HMDs would create learning activities with differing levels of technology integration. Such differences could reveal gaps in knowledge that could be addressed in the course activity design.

Additionally, the study context provided an opportunity to examine ratings of technology integration by students with varying academic experience. The course in which this study was conducted was required for preservice teachers, yet any student at the institution could enroll as well. The course enrollment included both preservice teachers at various stages in their academic careers (e.g., 1st year, 2nd year, etc.) as well as students majoring in noneducation fields (e.g., business, accounting, anthropology, etc.).

The differing academic experience of students in the course allowed for the comparison of preservice teachers who were at different stages in the teacher education program and a comparison of preservice teachers to noneducation majors. Such a comparison with noneducation majors would identify whether noneducation majors would identify the educational affordances of VR HMDs differently than preservice teachers did. This insight would be useful when revising the VR HMD course activities in the future using pedagogical approaches such as interdisciplinary team-based projects that could leverage the diversity of content knowledge for the benefit of all students in the course.

Methodology

This study used a quasi-experimental, nonequivalent group design to compare student work in a course activity that focused on the integration of VR HMDs in K-12 learning environments. During the time of the study, this VR HMD course activity was used in a single course section per year during three consecutive academic years. As such, the study compared intact samples of students who were enrolled in three course sections that were each taught by the same instructor. The instructor of the course is also the lead researcher of this study. During the period that was studied, the course design (topics, sequence, assignments, etc.) of the selected course sections was consistent, with the primary differences in course content being the advancement of technologies such as VR HMDs.

Study Site

This study was conducted within the college of education at a midsized public university in the midwestern United States. The institution offers undergraduate and graduate teacher education programs that lead to K-12 teaching licensure. Approximately 1,400 students are enrolled in these areas, with 40% majoring in early childhood education (preK-3), 32% in adolescent young adult education (Grades 7-12), 17% in middle childhood education (Grades 4-9), and 12% in inclusive special education (K-12).

One aspect of this location that is of particular relevance to this study is the availability of an onsite lab facility. It provided training and access to educational technologies, including some that at the time were advanced or experimental. This lab was open daily and was located within the college of education building for use by students in these programs. Examples of technologies that were available included VR HMDs, 3D printers, interactive whiteboards, tablet computers, digital cameras, and game consoles. This onsite lab was used in this study as the location of demonstrations of VR HMDs to the participants in this study.

Course Context

This study examined lesson planning artifacts that were created by students during a 4-week unit within an undergraduate course (three semester credit hours) focusing on technology integration into K-12 education. The course was required for all students in the college’s teacher preparation programs and is an elective for students majoring in other areas. The specific course activities in which the data were collected occurred between Week 8 through Week 11 of a 15-week academic term.

The course unit in which these activities occurred was focused on technology integration into learning activities. The course topics in the weeks prior to this unit were structured to provide students with foundational knowledge and skills, such as learning and instructional design theories and models and perspectives on technology integration. It also included examples of technology integration models that included both the TIM and TPACK models.

The 4-week unit on which this study focused required students to explore the potential integration of specific technologies in a K-12 educational context. Because the target audience for the course is primarily 1st- and 2nd-year preservice teachers who were at the beginning of the teacher preparation sequence, we could not assume that the students were familiar with teaching practices such as formal lesson planning. For this reason, the course assignments required that the students create various lesson proposal ideas that could be fully developed into more formal lesson or unit plans in subsequent courses in the teacher preparation program.

During the course unit focusing on emerging technologies in K-12 education, each student was required to attend a demonstration of a VR HMD in the onsite technology lab. In the week following this demonstration, the students were asked to develop a proposal for a learning activity that integrated a VR HMD for a subject area and manner of their own choosing. Although the course was offered each semester, the VR HMD activities were used only in course sections that were offered in the spring semester during the 3-year period.

VR HMD Demonstration Sessions

Students were introduced to a VR HMD during a 30-minute demonstration session scheduled for each student at the start of the course unit. The VR HMD demonstration sessions were conducted by a graduate research assistant and designed to be consistent for each student in each section of the course. These demonstration sessions were structured to provide three specific experiences: (a) a short, preselected demonstration to acclimate the participant to the VR HMD; (b) a demonstration of a virtual environment simulation in which the participant could freely explore; and (c) a VR game in which the participant could navigate using an input device (e.g., game controller).

Since data collection occurred over a 3-year period, both the VR HMD devices and the VR experiences available to participants changed over time. A list of VR experiences used in the demonstration sessions is provided in Table 1. During the 1st year, the Oculus Rift Development Kit 2 (https://youtu.be/OlXrjTh7vHc) was used for the VR demonstration sessions. The Samsung Gear VR (https://www.samsung.com/us/support/owners/product/gear-vr-2015) was used in the 2nd year, and the Oculus Rift VR headset (https://youtu.be/EiY2xYHzcus) was used during the 3rd year of the study. Because users of VR HMDs can experience motion sickness, eye strain, or other side-effects, the participants were permitted to end or pause the VR demonstration session at any time, although no participants chose to do so.

Table 1 List of VR Experiences Used in VR HMD Demonstration Sessions by Year

| Experience | Session |

|---|---|

| Year 1 | |

| Experience 1 | SightLine: The Chair https://store.steampowered.com/app/412360/SightLineVR/ |

| Experience 2 | Titans of Space https://youtu.be/dL3eBHPKGoU |

| Experience 3 | ChickenWalk https://youtu.be/esJ46uJpQwo |

| Year 2 | |

| Experience 1 | Jurassic World https://www.oculus.com/experiences/gear-vr/1096547647026443 |

| Experience 2 | Titans of Space https://youtu.be/dL3eBHPKGoU |

| Experience 3 | Smash Hit https://www.oculus.com/experiences/gear-vr/942006482530009/?locale=en_US |

| Year 3 | |

| Experience 1 | Introduction to Virtual Reality https://www.oculus.com/experiences/rift/1006887936048510 |

| Experience 2 | Titans of Space Plus https://youtu.be/dL3eBHPKGoU |

| Experience 3 | Lucky’s Tale https://www.oculus.com/experiences/rift/909129545868758/ |

Participants

The participants of this study were undergraduate students enrolled in different sections of a course focusing on technology and media literacy in educational contexts over a 3-year period. Table 2 shows the course enrollment as compared to the number of participants. Although no students in the course declined to participate in the study, some students did not complete the VR HMD assignment. The majority of students in the course in each year were pursuing a teacher education major. The course also included noneducation majors who were enrolled in the course as a part of an elective course sequence that focused on technology in education.

Table 2 Summary of Participants and Course Enrollment by Major

| Grade | Year 1 | Year 2 | Year 3 | |||

|---|---|---|---|---|---|---|

| Teacher Educ. | Other | Teacher Educ. | Other | Teacher Educ. | Other | |

| Freshman | 1 | 0 | 1 | 0 | 5 | 0 |

| Sophomore | 16 | 0 | 5 | 0 | 13 | 3 |

| Junior | 8 | 2 | 8 | 2 | 3 | 1 |

| Senior | 3 | 5 | 2 | 12 | 3 | 4 |

| Total Participants | 28 | 7 | 16 | 14 | 24 | 8 |

| Total Course Enrollment | 30 | 10 | 20 | 16 | 26 | 10 |

Instruments

In fall 2018, both the TIM-LP version of the TIM (Florida Center for Instructional Technology, n.d.) rubric and the Technology Integration Assessment Rubric (Harris et al., 2010) were tested to evaluate the lesson proposals. In a trial of the TIM and the Technology Integration Assessment Rubric, the extended descriptors that were available for the TIM were helpful in clearly assessing the level of technology integration. Although the Technology Integration Assessment Rubric is a valuable and useful tool, two rubric criteria of “Technology Selection” and “Fit” were not applicable to this study because the participants were not given a choice of the technology for which they developed a lesson proposal. As such, the TIM rubric was selected as the primary instrument to measure the level of technology integration that was evident in the lesson proposals.

Various web-based evaluative tools have been developed to use the TIM in different contexts. The TIM-LP version was used in this study and provided a systematic method of profiling the use of technology in a lesson plan. The TIM-LP is a modified version of the TIM, in which the descriptor for each cell uses wording that refers specifically to a lesson plan. Because the TIM is primarily designed to be used in a school setting to examine technology integration within a classroom, school, or district, the TIM-LP is designed to provide information to guide future lesson planning and school-based efforts to develop technology supports for teachers. The use of the TIM-LP to evaluate lesson planning artifacts for preservice teachers, however, is functionally similar to the use of the TIM-LP in a school setting.

Data Collection

The primary data for this study were derived from student work products from course assignments. At the beginning of each term, participants were informed that items submitted throughout the duration of the semester could be used for research purposes. Additionally, a letter of informed consent was presented to students and a link to an opt-out process was provided in the demographic survey that was provided after the VR demonstration sessions. The procedures for data collection were reviewed and approved by the appropriate Institutional Review Board and included the following data exported from the learning management system after the conclusion of the course:

- Lesson proposal artifacts that focused specifically on integrating a virtual reality head-mounted display (HMD) in a K-12 classroom-based activity, and

- Survey responses that included demographic information regarding the students’ current class standing and major (identified as either “Teacher Education” or “Noneducation major”).

Lesson proposal artifacts from course assignments and surveys were coded to include a unique ID number that was assigned to each student and used to match the lesson proposal artifacts with demographic survey responses. After the rating of each lesson proposal artifact was complete, the ratings were coded as numeric values and imported into SPSS 24 for further statistical analysis

The TIM-LP rubric was used as an analytical rubric (Perlman, 2002) that yielded a score for each lesson planning artifact on each TIM criterion. The two lead researchers trained a research assistant to conduct the scoring of all the lesson proposals using the TIM-LP with the extended descriptors available from the TIM website (https://fcit.usf.edu/matrix/matrix/). To ensure reliability in the scoring procedure, a random sample of 10 proposals was selected and scored by a research assistant and the lead researchers. A percent agreement consensus estimate (Stemler, 2004) exceeding 90% was achieved; thus, the remaining lesson proposals were scored using the TIM-LP rubric.

Data for the TIM ratings were entered into a spreadsheet to facilitate data analysis. For each dimension of a meaningful learning environment (i.e., active, collaborative, constructive, authentic, and goal-directed), the rater entered a level of technology integration (i.e., entry, adoption, adaptation, infusion, or transformation). The values for the level of technology integration were coded: Entry = 1, Adoption = 2, Adaptation = 3, Infusion = 4, Transformation = 5). The result of this process was a dataset that included five ratings indicating the level of technology integration across each of the dimensions of the learning environment for each lesson proposal that was submitted during the study period.

Data Analysis

A series of comparisons were conducted to determine the degree to which TIM ratings of lesson proposals differed according to the type of VR HMD that was used and also different student characteristics (e.g., academic year and major). For each of these analyses, the distribution of ratings across TIM levels (i.e., Entry, Adoption, Adaptation, Infusion, and Transformation) was compared for each TIM learning environment dimensions (Active, Collaborative, Constructive, Authentic, and Goal-Directed).

Statistical analyses were selected to compare the distribution of the TIM ratings for a series of comparisons. A two-way contingency table analysis with a Chi-square test was conducted to examine differences in ratings between years in which the VR HMD model differed. A similar two-way contingency table analysis with a Chi-square test was used to address the second research question to compare TIM ratings by academic class standing (lower- vs. upper-class students). Both analyses included only participants who were majoring in an academic program leading to K-12 teaching licensure. A third two-way contingency table analyses with Chi-square test included participants of all majors and compared TIM ratings of VR lesson proposals between teacher education majors and noneducation majors.

Results

Changes in TIM Ratings by Year

Different VR technologies were introduced during each of the years in which the course was offered because different technologies became publicly available. As shown in Table 3, the TIM rating level through all dimensions was limited to Entry, Adoption, or Adaptation. None of the lesson proposals focused on VR technology were rated at the levels of Infusion or Transformation during any of the three academic years. In general, the highest frequency of higher TIM ratings was observed in the first academic year when a prototype VR technology (Oculus Rift Development Kit 2) was used. Ratings on all TIM dimensions were largely limited to Entry or Adoption levels for Year 2 and Year 3 where the Samsung GearVR and Oculus Rift HMD, respectively, were used. Only in the Active TIM dimension for Years 1 and 2 did we observe ratings of lesson proposals that were above the Adoption level.

Table 3 Comparisons of Ratings of VR Technology Lessons TIM Level by Year for Participants in a Teacher Preparation Program

| TIM Dimension | TIM Level (%) | Chi-Square Test of Independence | |||

|---|---|---|---|---|---|

| n | Entry | Adoption | Adaptation | ||

| Active | X2(4) = 11.7[a] n = 66 p = .02 | ||||

| Year 1 | 28 | 32.1 | 35.7 | 32.1 | |

| Year 2 | 15 | 33.3 | 60.0 | 6.7 | |

| Year 3 | 23 | 43.5 | 56.5 | -- | |

| Collaborative | X2(2) = 0.17 n = 66 p = .92 | ||||

| Year 1 | 28 | 53.6 | 46.4 | -- | |

| Year 2 | 15 | 60.0 | 40.0 | -- | |

| Year 3 | 23 | 56.5 | 43.5 | -- | |

| Constructive | X2(4) = 9.3[a] n = 66 p = .053 | ||||

| Year 1 | 28 | 46.4 | 35.7 | 17.9 | |

| Year 2 | 15 | 33.3 | 66.7 | -- | |

| Year 3 | 23 | 43.5 | 56.5 | -- | |

| Authentic | X2(4) = 3.3[a] n = 66 p = .51 | ||||

| Year 1 | 28 | 21.4 | 71.4 | 7.1 | |

| Year 2 | 15 | 26.7 | 73.3 | -- | |

| Year 3 | 23 | 17.4 | 82.6 | -- | |

| Goal-Directed | X2(4) = 3.7[a] n = 66 p = .45 | ||||

| Year 1 | 28 | 78.6 | 17.9 | 3.6 | |

| Year 2 | 15 | 86.7 | 13.3 | -- | |

| Year 3 | 23 | 95.7 | 4.3 | -- | |

| Note: The TIM rubric includes five levels of technology integration (Entry, Adoption, Adaptation, Infusion, and Transformation). In the data for this study, however, no lesson proposals were rated higher than Adaptation; thus, the two higher levels were omitted from this table. “--” indicates that no ratings were observed at the level (column) for that academic term (row). [a] SPSS reported contingency table cells with an expected count less than 5. | |||||

The ratings varied for each TIM criteria and the majority of ratings for Active and Authentic dimensions were observed at the Adoption level. The majority of ratings for Collaborative and Goal-Directed dimensions were at the Entry level. Ratings of the Constructive dimension were mixed with the majority of Year 1 ratings at the Entry level, and the majority of Years 2 and 3 were at the Adoption level. When examining the ratings for statistically significant differences by year, we found the ratings of Active to be the only difference in frequency distribution to be significant, X2(4) = 11.7, p = .02. Notably, however, contingency table analysis may have been impacted in some instances by a low frequency of observations at some levels.

Comparing TIM Ratings by Academic Experience

Whereas the first analysis focused specifically on the ratings of those students who were pursuing a degree in a teacher preparation program, considering how the TIM ratings of lesson proposals for VR technology differed according to the academic experience of the student was also important. To this end, two analyses were conducted to compare the ratings of VR lesson proposals according to academic major (teacher education majors vs. noneducation majors) as well as academic class standing (lower- vs. upper-class students).

Although evaluating these differences separately for each academic year that used a different version of the VR headset would have been preferable, data were insufficient to support an analysis that was separated by academic year. For example, in Years 1 and 2, no freshman or sophomore participants were enrolled from majors other than a teacher education program. As such, these analyses were conducted using the ratings for all study participants in all three academic years.

Table 4 displays the frequency distribution (shown as a percentage of the row total) of ratings for each TIM category. The data for this analysis were limited to study participants who were pursuing a teacher education major, and the academic standings were reduced to compare 1st- or 2nd-year students to 3rd- or 4th-year students. The analysis revealed that the frequency of Entry-level ratings was higher for students who were at a more advanced stage in their academic program in all TIM dimensions except Goal-Directed. The highest TIM level observed was Adaptation, although ratings at this level were consistently the least frequently occurring and were not observed in the Collaborative dimension. Only in the Collaborative dimension, however, was the distribution of ratings statistically significant. In that dimension, the majority of lesson proposals for 1st- and 2nd-year students were rated at the Adoption level, whereas the majority of lesson proposals for students were rated at the Entry level.

Table 4 Chi-Square Analysis and Comparison of TIM Ratings of VR Lesson Proposals by Academic Class for Students Pursuing a Teacher Education Major

| TIM Dimension | n | Entry (%) | Adoption (%) | Adaptation (%) | Chi-Square Test of Independence |

|---|---|---|---|---|---|

| Active | X2(2) = 1.47 p = .48 | ||||

| 1st/2nd year | 39 | 30.8 | 51.3 | 17.9 | |

| 3rd/4th year | 27 | 44.4 | 44.4 | 11.1 | |

| Collaborative | X2(2) = 3.80 p = .05 | ||||

| 1st/2nd year | 39 | 46.2 | 53.8 | -- | |

| 3rd/4th year | 27 | 70.4 | 29.6 | -- | |

| Constructive | X2(2) = 0.08 p = .96 | ||||

| 1st/2nd year | 39 | 41 | 51.3 | 7.7 | |

| 3rd/4th year | 27 | 44.4 | 48.1 | 7.4 | |

| Authentic | X2(2) = 5.40 p = .07 | ||||

| 1st/2nd year | 39 | 15.4 | 84.6 | -- | |

| 3rd/4th year | 27 | 29.6 | 63 | 7.4 | |

| Goal-Directed | X2(2) = 2.36 p = .31 | ||||

| 1st/2nd year | 39 | 89.7 | 7.7 | 2.6 | |

| 3rd/4th year | 27 | 81.5 | 18.5 | -- | |

The data collected regarding the participants in the study included one variable that indicated whether the participant was pursuing a major in a teacher education program. As shown in Table 5, 66 participants were majoring in a teacher education program, and 27 students were majoring in a noneducation field. When examining the ratings for these two groups, the distribution of ratings was similar throughout each TIM category.

The highest and lowest frequency of ratings in the categories of Collaborative, Authentic, and Goal-Directed learning was the same for both education and noneducation majors. In the Active and Constructive learning categories, however, the majority of ratings of work by education majors was at the Adoption level as compared to Entry level for noneducation majors. The Chi-square analysis indicated that the differences in these distributions were not statistically significant.

Table 5 Chi-Square Analysis and Comparison of TIM Ratings by Academic Major for VR Lesson Proposals

| Rating | Major | n | Entry | Adoption | Adaptation | Chi-Square Test of Independence |

|---|---|---|---|---|---|---|

| Active | X2(2) = 2.90 p = .23 | |||||

| Education | 66 | 36.4 | 48.5 | 15.2 | ||

| Noneducation | 27 | 55.6 | 33.3 | 11.1 | ||

| Collaborative | X2(2) = .89 p = .35 | |||||

| Education | 66 | 56.1 | 43.9 | -- | ||

| Noneducation | 27 | 66.7 | 33.3 | -- | ||

| Constructive | X2(2) = .27 p = .87 | |||||

| Education | 66 | 42.4 | 50 | 7.6 | ||

| Noneducation | 27 | 48.1 | 44.4 | 7.4 | ||

| Authentic | X2(2) = .82 p = .67 | |||||

| Education | 66 | 21.2 | 75.8 | 3 | ||

| Noneducation | 27 | 29.6 | 66.7 | 3.7 | ||

| Goal-Directed | X2(2) = .45 p = .80 | |||||

| Education | 66 | 86.4 | 12.1 | 1.5 | ||

| Noneducation | 27 | 85.2 | 11.1 | 3.7 | ||

| Note. -- indicates that no observations were made at the TIM level indicated in the column heading. | ||||||

Discussion

Veletsianos (2016) described the shifting environment of emerging technologies in education and argued that both practices and technologies mature and evolve over time. By examining the work of preservice teachers as they were asked to integrate a prototype VR HMD and then two commercially available VR HMDs, we sought to assess the products of this work while these devices were rapidly evolving. As such, this study adds to the research regarding VR HMDs in education and, specifically, the ways preservice teachers were able to integrate different forms of this technology into educational practice.

Additionally, as one of only a few studies that has used the TIM in research in preservice teacher education, this study provides additional evidence of the value of that instrument for the course and as a research tool. The results of this study are most useful to practitioners and researchers in teacher education who are seeking to develop or enhance similar course activities using emerging technologies such as VR HMDs, in which preservice teachers are observing or creating learning activities.

Although Veletsianos (2016) argued that newness is not a defining quality of emerging technology, the results of this study were that higher levels of technology integration were observed during the first year that VR HMDs (specifically the Oculus Rift DK2) were used. Results showed that statistical differences were detected in the distribution of ratings in the Active TIM dimension that described the level to which learners were “using technology as a tool rather than passively receiving information.”

Although other differences were not found to be statistically significant, the highest levels of technology integration for all TIM criteria were observed when the technology was not yet commercially available. The exact causal relationship between the newness of the technology and the student performance in the course activity remains unclear. Moving forward, however, we see a need for the practice of introducing VR HMDs technology to preservice teachers to continue to evolve along with the technology.

The approach used in this study was similar to that of Kieran and Anderson (2014), who examined the work of junior and senior-level undergraduates, in that the TIM was introduced to students during the course as a model for planning learning activities involving technology and also used as a research tool. The results of this study are also similar in that observed TIM ratings of the lesson planning artifacts were mostly rated at the Entry and Adoption levels. The results of this study, however, revealed that 1st- and 2nd-year students had a higher level of technology integration on the Active, Collaborative and Authentic TIM criteria than did 3rd- and 4th-year students. This result suggests that the possibility of unique characteristics related to academic experience in this teacher education program that may have impacted their knowledge or attitudes toward technology integration.

Limitations

The findings of this research should be viewed within the context of several limitations. The sample of the study was drawn from the students enrolled in a course during three different academic years in which these emerging technology activities were included. This approach limited the number of participants in the study, and groups were not equivalent in terms of academic level, major, or other characteristics, such as technological, pedagogical, and content knowledge. Also, although the same instructor taught the course each year using a consistent course design, differences in the course instruction may have existed that influenced the results.

Last, the VR HMD technology that was used in each year of the course differed. Although this change was of interest to the study, the software available for each version of the VR HMD was different; thus, the demonstration sessions changed each year. These limitations impact the degree to which the participants were representative of the population of students at this or similar institutions as well as the types of analysis that could be conducted with the data.

Recommendations for Future Research

With respect to research on the use of VR HMDs in preservice teacher education, future studies would benefit from a more robust examination of contextual factors that influence technology integration. For example, this study examined preservice teachers’ use of VR HMDs in a course that was focused on K-12 educational technology. If this technology were used in a course focused on teaching methods for a specific content area such as science or social studies, perhaps preservice teachers would then envision learning beyond the entry and adoption levels of technology integration.

An additional consideration for future research is the use of the TIM as a research and evaluative tool. This study has demonstrated the use of the TIM in a preservice teacher education context much like the study by Kieran and Anderson (2014). Our process for using the TIM ratings, however, differed somewhat from the previous study. We examined each criterion (e.g., Active, Collaborative, etc.) as a separate ordinal measurement, whereas Kieran and Anderson used the TIM ratings as an ordinal measure and also calculated an overall mean rating of all TIM criteria that was then used in the statistical analysis. Given the structure of the TIM rubric, we suggest that future use of the TIM ratings as five separate ordinal measures is most appropriate and consistent with the development of the TIM as described by Harmes et al. (2016).

Implications for Teacher Education

With respect to the specific course in which the VR HMDs were used, opportunities exist to improve the course activities to foster creative thinking that will lead to a broader range of technology integration. We found the TIM to be a valuable guide for preservice teachers in planning technology-enhanced learning activities, yet it was used in this study as a summative assessment instrument. In the future, we plan to use the TIM in as a self-evaluation and peer-evaluation tool through a process of reflection and revision of lesson planning work similar to the Kieran and Anderson (2014) study.

Additionally, we see the criteria of the TIM as a framework for the types of learning activities that preservice teachers must experience themselves; thus, the technology demonstration session element of the course will be refined to demonstrate not only the features of the technology, but also to demonstrate pedagogical approaches such as the social and cultural storytelling experiences described by Jowallah et al. (2018).

Foulger et al. (2017) articulated the challenges of teacher education programs to create technology experiences for preservice teachers. They asserted that “teacher educators must establish new roles for themselves and be held accountable for providing all teacher candidates with equitable, high-quality technology experiences in the courses they teach” (p. 417). This study demonstrated how preservice teachers were introduced to a technology that, when first introduced, was not yet available for purchase. Experiences with emerging technologies are a unique opportunity for teacher education programs to leverage the resources available in higher education, such as the technology lab used in this study, to advance the integration of technology by preservice teachers as they prepare to enter the field.

References

Aldunate, R., & Nussbaum, M. (2013). Teacher adoption of technology. Computers in Human Behavior, 29(3), 519–524.

Alexander, B., Ashford-Rowe, K., Barajas-Murphy, N., Dobbin, G., Knott, J., McCormack, M., Pomerantz, J., Seilhamer, R., & Weber, N. (2019). EDUCAUSE Horizon report: 2019 higher education edition. EDUCAUSE. https://library.educause.edu/-/media/files/library/2019/4/2019horizonreport.pdf

Allsopp, M. M., Hohlfeld, T., & Kemker, K. (2007, November). The Technology Integration Matrix: The development and field-test of an internet based multi-media assessment tool for the implementation of instructional technology in the classroom [Paper presentation]. Florida Educational Research Association Annual Meeting, Tampa, FL.

Barbour, D. (2014). The technology integration matrix and student engagement: A correlational study (Unpublished doctoral dissertation). Northcentral University.

Becker, S. A., Freeman, A., Hall, C. G., Cummins, M., & Yuhnke, B. (2016). NMC/CoSN Horizon report: 2016 K-12 edition. The New Media Consortium. https://library.educause.edu/~/media/files/library/2017/11/2016hrk12EN.pdf

Bourgonjon, J., De Grove, F., De Smet, C., Van Looy, J., Soetaert, R., & Valcke, M. (2013). Acceptance of game-based learning by secondary school teachers. Computers & Education, 67, 21–35.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. The Mississippi Quarterly, 13(3), 319–340.

Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35(8), 982–1003.

Florida Center for Instructional Technology. (n.d.). The technology integration matrix. https://fcit.usf.edu/matrix/matrix/

Foulger, T.S., Graziano, K.J., Schmidt-Crawford, D., & Slykhuis, D.A. (2017). Teacher educator technology competencies. Journal of Technology and Teacher Education, 25(4), 413-448.

Freeman, A., Adams Becker, S., Cummins, M., Davis, A., & Hall Giesinger, C. (2017). NMC/CoSN Horizon report: 2017 K-12 edition. The New Media Consortium. https://library.educause.edu/~/media/files/library/2017/11/2017hrk12EN.pdf

Harmes, J.C., Welsh, J.L., & Winkelman, R.J. (2016). A framework for defining and evaluating technology integration in the instruction of real-world skills. In S. Ferrara, Y. Rosen, & M. Tager (Eds.), Handbook of research on technology tools for real-world skill development (pp. 137-162). IGI Global.

Harris, J., Grandgenett, N., & Hofer, M. (2010). Testing a TPACK-based technology integration assessment rubric. In D. Gibson & B. Dodge (Eds.), Proceedings of SITE 2010–Society for Information Technology & Teacher Education International Conference (pp. 3833–3840). Association for the Advancement of Computing in Education.

Herold, B. (2014). Oculus Rift fueling new vision for virtual reality in K-12. Education Week, 34(2), 10–11.

Herold, B., & Molnar, M. (2018). Virtual reality in K-12 raising high hopes and serious concerns. Education Week, 37(19). https://www.edweek.org/ew/articles/2018/02/08/virtual-reality-for-learning-raises-high-hopes.html

Hew, K. F., & Cheung, W. S. (2010). Use of three-dimensional (3-D) immersive virtual worlds in K-12 and higher education settings: A review of the research. British Journal of Educational Technology: Journal of the Council for Educational Technology, 41(1), 33–55.

Johnson, L., Becker, S. A., Estrada, V., & Freeman, A. (2015). NMC Horizon report: 2015 K-12 edition. Austin, TX: The New Media Consortium. https://library.educause.edu/~/media/files/library/2017/11/2015hrk12EN.pdf

Jowallah, R., Bennett, L., & Bastedo, K. (2018). Leveraging the affordances of virtual reality systems within K-12 education. Distance Learning, 15(2), 15–26.

Kieran, L., & Anderson, C. (2014). Guiding preservice teacher candidates to implement student-centered applications of technology in the classroom. In M. Searson & M. Ochoa (Eds.), Proceedings of SITE 2014–Society for Information Technology & Teacher Education International Conference (pp. 2414-2421). Association for the Advancement of Computing in Education.

Ligorio, M., & van Veen, K. (2006). Constructing a successful cross-national virtual learning environment in primary and secondary education. AACE Journal, 14(2), 103-128.

Liu, F., Ritzhaupt, A. D., Dawson, K., & Barron, A. E. (2017). Explaining technology integration in K-12 classrooms: A multilevel path analysis model. Educational Technology Research and Development, 65(4), 795–813.

Marangunić, N., & Granić, A. (2015). Technology acceptance model: A literature review from 1986 to 2013. Universal Access in the Information Society, 14(1), 81–95.

Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., & Davis, T. J. (2014). Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis. Computers & Education, 70, 29–40.

Meigs, R. (2010). The development and pilot of the technology integration matrix questionnaire (Unpublished doctoral dissertation). Baker University, USA. https://www.bakeru.edu/images/pdf/SOE/EdD_Theses/Meigs_Russell.pdf

Milman, N. B. (2018). Defining and conceptualizing mixed reality, augmented reality, and virtual reality. Distance Learning, 15(2), 55-58.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017.

Nebel, S., Schneider, S., & Rey, G. D. (2016). Mining learning and crafting scientific experiments: A literature review on the use of Minecraft in education and research. Journal of Educational Technology & Society, 19(2), 355–366.

Perlman, J. (2002) An introduction to performance assessment scoring rubrics. In C. Boston (Ed.), Understanding scoring rubrics: A guide for teachers (pp. 5-13). ERIC Clearinghouse on Assessment and Evaluation.

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2), 4–14.

Stemler, S. (2004) A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Practical Assessment, Research, and Evaluation, 9. https://scholarworks.umass.edu/pare/vol9/iss1/4

Veletsianos, G. (2010). A definition of emerging technologies for education. In G. Veletsianos (Ed.), Emerging technologies in distance education (pp. 3-22). AU Press Athabasca University. http://www.aupress.ca/books/120177/ebook/01_Veletsianos_2010-Emerging_Technologies_in_Distance_Education.pdf

Veletsianos, G. (2016). The defining characteristics of emerging technologies and emerging practices in online education. In G. Veletsianos (Ed.), Online learning: Emerging technologies and emerging practices (pp. 3-16). AU Press Athabasca University. http://www.aupress.ca/books/120258/ebook/01_Veletsianos_2016-Emergence_and_Innovation_in_Digital_Learning.pdf

Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204.

Welsh, J., Harmes, J. C., & Winkelman, R. (2011). Florida’s Technology Integration Matrix. Principal Leadership, 12(2), 69–71.

![]()