In this action research study, we explored how one elementary science teacher educator used transcript coding of simulated classroom discussions as a pedagogical approach to learn about her elementary preservice teachers’ (PSTs’) abilities to notice key aspects of scientific argumentation within the context of a small group discussion. PSTs enrolled in a science methods course in their elementary teacher education program engaged in transcript coding before and after facilitating their own argumentation discussions.

They facilitated these discussions in the computer technology-based Mursion® simulated classroom environment with five upper-elementary student avatars. The first transcript focused on an argumentation discussion by “Paul” (a pseudonym), a PST from a previous class, which all PSTs in the course coded prior to facilitating their own discussion in the simulated classroom. Each PST then coded their own transcripts after facilitating an argumentation discussion on the same topic with the same group of student avatars.

One purpose of the study was to examine how the PSTs’ coding of two discussion transcripts generated in the simulated classroom could be used to provide the teacher educator with insights regarding the nature of the PSTs’ noticing related to engaging students in scientific argumentation. Another purpose was to investigate PSTs’ perspectives on the utility of transcript coding as reported in reflection videos created by each PST.

In the following section, the study is situated within the literature on scientific argumentation and teacher noticing. Next, details are provided about the study’s context, including the simulated classroom environment we used, research questions, and methods. Findings are then shared from analyzing the PSTs’ transcript coding and the PSTs’ perceptions on the utility of transcript coding for supporting their own learning. The paper ends with a discussion about the affordances and challenges of using transcript coding as a pedagogical approach to learn about PSTs’ noticing abilities, as well as implications for key decisions that teacher educators across content areas should consider when engaging PSTs or in-service teachers in transcript coding.

Engaging Students in Scientific Argumentation

“Engaging students in argument from evidence” is one of the eight practices identified in the Next Generation Science Standards (NGSS Lead States, 2013) as integral for developing students’ scientific literacy and helping them understand how scientific knowledge is constructed. Productive argumentation in science involves engaging students in two related aspects: argument construction and argument critique.

Argument construction focuses on students’ engagement in generating, defending, and refining scientific claims using evidence and reasoning, while argument critique involves students in comparing and critiquing one another’s ideas as they build toward consensus (Mikeska & Howell, 2020). Research has shown that with teacher support K-12 students can learn how to compare contrasting explanations, offer rebuttals to discredit competing ideas, use varied evidence to support scientific arguments, and collaborate to construct and refine scientific arguments (Berland & Hammer, 2012; Bravo-Torija & Jimenez-Aleixandre, 2018; McNeill, 2011; Osborne et al., 2016).

Facilitating discussions is one instructional practice used to provide opportunities for students to engage in argument construction and argument critique. One goal of an argumentation discussion in science is to reach consensus, which likely entails students being convinced to alter their thinking through others’ arguments and rebuttals and the presentation of evidence and reasoning. Such discussions are facilitated by the teacher and are ideally student centered, encouraging direct student-to-student exchanges as students share and critique one another’s ideas.

Teachers, especially novices, typically have limited opportunities to learn how to facilitate argumentation discussions or to observe and identify key features of this teaching competency (Banilower et al., 2018; Berland, 2011; Goodson et al., 2019; Simon et al., 2006). These limited opportunities to observe, try out, or make sense of this instructional practice are especially pronounced in elementary classrooms, due to the limited time devoted to science instruction in the elementary grades.

Recent research has examined the use of different approaches to help teachers learn how to engage students in productive scientific argumentation. One of the more recent approaches was the development and use of a multiyear professional development program where practicing teachers completed a summer institute, practicum, and sessions during the school year to develop their knowledge and ability to engage their students in scientific argumentation (Osborne et al., 2019). During their professional learning activities, teachers learned about and tried out three teacher-related (i.e., ask, press, and link) and three student-related (i.e., explain/claim, coconstruct, critique) classroom practices they could use to foster students’ engagement in scientific argumentation. Findings examining the use of different versions of this practice-based professional development program indicated positive impact on changes to teachers’ instructional practice to support students’ engagement in scientific argumentation, although the added benefit of the practicum component was not realized in this study.

Another recent study developed a set of multimedia modules that teachers used to develop their knowledge about scientific argumentation and learn instructional strategies to engage their students in productive scientific argumentation (Marco-Bujosa et al., 2017; McNeill et al., 2016, 2018). In particular, teachers had opportunities to examine written and video artifacts showing students engaged in high-quality scientific argumentation and highlighting the ways in which teachers supported such interactions. The teachers also had opportunities to apply what they learned to planning their own lessons to support students in engaging in scientific argumentation. Study findings suggested positive outcomes from teachers’ use of these multimedia modules.

At the core of these approaches is the use of targeted supports to help teachers learn how to attend to and interpret students’ ideas to inform their instructional decision-making; doing so is at the heart of being able to productively prompt and support scientific argumentation. Translating such decisions into action requires that teachers have access to and can leverage argumentation-focused teaching moves to support their students’ engagement in argument construction and critique.

Some work, for example, has advocated for the use of language frames to support students in knowing how to engage in key components of scientific argumentation, like making a claim, providing and asking for evidence, offering a counter claim, inviting speculation, and reaching consensus (Ross et al., 2009). Other research has examined the specific teaching moves PSTs use to prompt and encourage students to engage in argument construction and critique, such as encouraging students to reference observations or data, draw upon their prior experiences, explain the reasoning for their agreement or disagreement with another’s idea, or consider the relevancy of specific evidence (Mikeska & Howell, 2020).

Pedagogical approaches are needed, however, that support teacher educators in better understanding and characterizing PSTs’ abilities to notice — and ideally make use of — these argumentation-focused teaching moves. Our study was designed to address this need by examining how one such approach, the use of transcript coding coupled with the Mursion simulated classroom environment, was used by one elementary science teacher educator to characterize her PSTs’ noticing abilities regarding the use of teaching moves to engage students in argument construction and argument critique. If PSTs can accurately notice and identify their own and others’ use of argumentation-focused teaching moves during science discussions, then they are more likely to be equipped to add these moves to their instructional repertoire and draw upon them flexibly when facilitating such discussions with their students.

Noticing in Teaching

As a pedagogical approach, transcript coding has the potential to provide a window into PSTs’ noticing capabilities related to core teaching practices like facilitating argumentation-focused discussions. Noticing entails teachers’ attending to important classroom interactions, connecting those interactions to principles of education, and interpreting those interactions to inform instructional decisions (van Es & Sherin, 2002; Figure 1).

Figure 1

Three Aspects of Noticing (van Es & Sherin, 2002)

Noticing is not a simple practice to master (Ball, 2011). Novice teachers, including PSTs, may not notice the most salient interactions (Abell et al., 1998; Jacobs et al., 2010; Star & Strickland, 2008; Talanquer et al., 2013). Compared to more experienced teachers, they are less likely to connect what they notice to broader principles and, therefore, have a more difficult time answering Shulman’s (1996) question, “What is this a case of?” (van Es & Sherin, 2002, p. 574).

The noticing literature in science education is about 10 years old (Chan et al., 2020). It has included investigations of teachers’ noticing about student thinking (e.g., Luna, 2018; Luna et al., 2018) and teachers’ responses to and interactions with students (Seidel et al., 2011; Sezen-Barrie & Kelly, 2017). A finding relevant to our work is that while teachers tend to notice some science or engineering practices (e.g., the ways students carry out investigations or design solutions), they may not notice as readily practices related to data analysis and engaging in argument from evidence (Dalvi & Wendell, 2017; Luna et al., 2018; Talanquer et al., 2013).

Promising evidence indicates that teachers can and do improve their noticing practice with experience and intervention. One effective strategy is through analysis of instructional videos (e.g., Barnhart & van Es, 2015; Benedict-Chambers & Aram, 2017; Rosaen et al., 2008). Analysis of video enables classroom interactions to be revisited, slowed down, and reflected upon (van Es & Sherin, 2002). Teachers may analyze the videos of unknown others, as in curated video cases (e.g., Abell & Cennamo, 2003), their own videos, or their peers’ videos. There are advantages to each, such as engagement with one’s own context, learning from those with similar experiences, and learning from experts (Gaudin & Chaliès, 2015; Seidel et al., 2011).

Video analysis is supported through various means, including through communities of practice and video clubs, in which teachers share and discuss their videos (Gonzalez & Vargas, 2020; Hawkins & Park Rogers, 2016; Luna & Sherin, 2017; van Es & Sherin, 2008). Discussions about videos may be scaffolded through the use of reflective frameworks (e.g., Gelfuso, 2016; van Es et al., 2014). Teachers can be supported during video analysis to narrow and focus their scope of noticing by using a framework to structure observations (Star & Strickland, 2008), annotating their videos (McFadden et al., 2014), or applying a set of codes to video or transcript segments (Kucan, 2007, 2009; Mitchell & Marin, 2015; Tripp & Rich, 2012).

The point of learning to identify salient interactions teachers come to see as connected to key educational principles is to inform and impact future instructional decisions (van Es & Sherin, 2002). For example, Benedict-Chambers and Aram (2017) examined how PSTs’ noticing within teaching rehearsals in methods classes led to revisions to their lesson plans to be used in elementary classrooms. The video clubs described here enabled teachers to engage in repeated cycles of identifying salient interactions, connecting those to broader principles, and then applying these ideas to instruction (which, in turn, may be analyzed and used to inform future practice).

González and Vargas (2020), for example, asserted that their study showed “a case in which teachers’ analysis of videos from their own classrooms, anchored in the lessons that they planned and implemented, promoted change in instructional practices” (p. 26). In our study, we use transcript coding to examine what aspects of their own and others’ instructional practice PSTs noticed in the context of facilitating argumentation-focused science discussions.

Study Context

Incorporating Argumentation Discussions Using Simulated Classrooms

In spring 2020, I (first author Lottero-Perdue) participated in a larger study led by the second author (Mikeska) that examined how PSTs learned to facilitate argumentation discussions in science and how teacher educators like me support PSTs in doing so. My primary role was to support PSTs’ learning by developing and implementing preparation and debrief/reflection activities to support each PST’s facilitation of three 20-minute argumentation discussions throughout the semester.

A unique aspect of these discussions is that they occurred in a simulated classroom with five upper-elementary student avatars: Mina, Will, Jayla, Emily, and Carlos (Mikeska & Howell, 2020; Figure 2). Each PST — either on campus in a room with a monitor and computer station or at home using their own computers (during online learning due to COVID-19) — engaged in these discussions with the avatars in real time. The avatars are enacted by a human-in-the-loop called a simulation specialist who hears, sees, and responds to the PSTs as the five student avatars during the discussion. This simulation specialist is highly trained on not only how to voice and puppet the avatars, but also on what the students’ scientific ideas are at the beginning of the discussion and ways those may change given teacher moves and convincing peer contributions. They are also trained to be consistent from discussion to discussion in the way the avatars respond.

One of the multiple benefits to using simulated classrooms as approximations of practice (Dieker et al., 2014) is that they reduce the complexity of the instructional environment. In the Mursion classroom, the students can be well behaved and free of off-task behavior to reduce the need for classroom management. Rather, the discourse between the students and teacher and among the students is the focus as PSTs learn to navigate the challenge of facilitating an argumentation discussion. The idea of using technology-based approximations of practice that reduce complexity is not exclusive to Mursion. For example, online or smartphone-based chats have been used to allow teachers to practice questioning techniques (Chao et al., 2016; Wang et al., 2021).

Another benefit of the Mursion simulated environment is that it involves highly trained simulation specialists who enact the avatars as students, making the student responses consistent and representative of what students might say. A more traditional approximation of practice in teacher education involves some PSTs taking on the roles of students while other PSTs teach. A recent study has suggested that PSTs develop eliciting strategies similarly in simulated and peer-based approximations of practice (Lee et al., 2021).

Additionally, the simulated classroom environment creates a situation in which the discussion context can be held constant across PSTs. This context enabled me as their teacher educator to examine how different PSTs noticed and used different questions and prompts to encourage argument construction and critique with the same students (avatars), in the same amount of discussion time (20 minutes maximum), and with respect to the same discussion topic.

The Changing Matter Discussion

All three discussions were on the topic of matter (Mikeska et al., 2021a; 2021b; 2021c; 2021d). The third topic, Changing Matter, is this focus in this paper. In Changing Matter, each PST was to facilitate a discussion to help the student avatars “construct an argument about whether a new substance is formed from the mixing of two different substances” and to “build consensus about what evidence indicates that a new substance has been formed” (Mikeska et al., 2021d, p. 28).

Prior to the discussion, the student avatars made observations about what happens when the following are mixed: (a) baking soda and pepper, (b) white vinegar and baking soda, and (c) white vinegar and milk. They also made a claim about whether a new substance was formed from each combination and explained how they made their decision. Students came to the discussion with varying ideas.

In preparation for the discussion, each PST received a written document with background information and student work samples. Also, to prepare to facilitate the discussion, each PST watched a video of a PST from one of my prior methods courses, Paul, facilitating the Changing Matter discussion in the simulated classroom. A full copy of the Changing Matter science task (Mikeska et al., 2021a), including both the teacher facing document and the simulation specialist training materials, as well as example discussion videos and transcripts can be accessed through the online Qualitative Data Repository (https://data.qdr.syr.edu/dataverse/go-discuss) after creating a free account.

Figure 2

Elementary Student Avatars by Mursion

Transcript Analysis and Coding During the Course

While transcript coding is commonplace for researchers, it is not as familiar to the PSTs I have taught. I, therefore, provided scaffolding to introduce PSTs to the idea of what can be gleaned about teaching and learning through analyzing and coding transcripts of teaching interactions with accompanying video.

I began by introducing transcript analysis — that is, looking closely at teacher prompts and subsequent questions and student responses — as a whole class without formal coding for the first of the three discussions. Then, the PSTs coded transcripts before and after the second discussion and before and after the third discussion, the discussion of focus for this study. For each discussion, instruction focused on different aspects of argumentation discussions: attending to student ideas; facilitating a coherent and connected discussion; encouraging student-to-student interactions; developing students’ conceptual understanding; and engaging students in argumentation, specifically argument construction, critique, and consensus building (Mikeska et al., 2019). The third discussion (Changing Matter) was the first time I had asked the PSTs to code for argument construction and critique.

For this third discussion, transcript coding involved searching for evidence of teacher questions or prompts that encouraged students to share their constructed arguments (claims and evidence-based reasoning) and engage in argument critique. The first search for this evidence was within the transcript of Paul’s Changing Matter discussion as the PSTs prepared to facilitate the discussion. The second was when they coded their own transcripts after facilitating the Changing Matter discussion in the simulated classroom.

For coding Paul’s and their own transcripts, I assigned a particular color of highlighting for PSTs to use to mark these teacher questions and prompts — gray highlighting for argument construction and green for argument critique. I also shared that PSTs could use both colors in the same sentence if the teacher was prompting argument construction and critique simultaneously. I also asked the PSTs to code for examples of building consensus (pink highlighting); however, for brevity, that coding is not reported in this paper.

For both Paul’s transcript and their own transcripts, I told the PSTs that I would be grading their transcript coding for accuracy. To grade Paul’s transcript, I compared my own coded transcript of Paul’s discussion, which identified what I considered to be the most obvious instances of questions and prompts to encourage argument construction and critique, with each of theirs. For both argument construction and critique, respectively, I indicated whether the PSTs captured all or most instances, some instances, or no instances and scored accordingly. I also offered brief feedback to the PSTs, especially if their coding was incomplete. After I graded PSTs’ coding of Paul’s transcript, I provided the PSTs with a copy of my coding of Paul’s transcript for their reference prior to their facilitation of the Changing Matter discussion.

“Incomplete coding” represents cases in which a PST did not code or missed some of the instances of a construct. For example, if a PST did not code Paul’s question (“So would anybody like to share their claim?”) as an instance of encouraging argument construction, their coding of argument construction for the Paul’s transcript would be incomplete. My feedback to one PST about argument construction noted that, while the PST identified some instances of argument construction, “there were many other places where Paul asked about claims/evidence” that were not coded.

In my grading of my PSTs’ coding of Paul’s transcript, I noticed that one PST coded some of Paul’s questions for argument construction that were accurate, but coded other questions for argument construction that were not accurate. I referred to this as coding “nonexamples” or coding beyond the meaning of the construct. For example, this PST coded the following question as an example of encouraging argument construction: “Can anybody tell me what the three states of matter are?” Although Paul probed for related ideas about the states of matter, this question was not about a claim or evidence in support of a claim.

Grading PSTs’ coding of their own transcripts was more challenging. I examined the gray (argument construction) and green highlighting (argument critique) questions and prompts. I also read through the other text that had not been coded for argument construction or critique. I used this information to provide a score and feedback about the PSTs’ coding of argument construction and critique. I did the best I could given the time constraints to identify accurate coding, incomplete coding, or coding nonexamples of argument construction and critique. I suspected that a deeper and more time-intensive analysis of the PSTs’ coding of their own and Paul’s transcripts would reveal more about how they collectively noticed and understood these constructs, particularly with respect to the first two aspects of van Es and Sherin’s (2002) model of noticing: identifying salient classroom interactions and connecting classroom interactions with teaching principles.

Research Questions

Two research questions (RQs) guide this study:

- What did PSTs code as instances of teacher prompts/questions to encourage argument construction or critique within transcripts of another PST’s discussion and of their own argumentation discussions in a simulated classroom?

- What were PSTs’ perceptions about what they learned from coding others’ and their own argumentation discussion transcripts in a simulated classroom?

Additionally, this study explored what the answer to these questions suggested about improving PST noticing of argument construction and critique.

Methods

This study may be best characterized as an action research study (Kitchen & Stevens, 2008; Saldaña & Omasta, 2018). I sought to better understand how the PSTs in my course coded teacher prompts/questions to encourage argument construction and critique to improve my subsequent instruction in future methods courses. This action research was bolstered by the participation of coauthors who contributed to a collaborative, thorough, iterative qualitative analysis of the data.

Participants

All 19 PSTs in my junior-level elementary science methods course in the spring 2020 semester facilitated the discussions and participated in the study. The spring 2020 semester was one in which we started the semester in a face-to-face format for a course that included a weekly school-based field placement in addition to methods activities and facilitating discussions in the Mursion simulated classroom. However, due to the COVID-19 pandemic, after the 6th week, the course moved exclusively online and the field placement ended. The simulated discussions were able to continue, with PSTs facilitating discussions via Zoom from their homes.

All participants were elementary education majors. Most identified as female (18 of 19; 95%) and one identified as male. PSTs identified as White (68%), Black (16%), Asian (11%), or Hispanic (5%). Throughout the paper PSTs’ names are replaced with pseudonyms.

Data Collection

To examine noticing within transcripts (addressing RQ1), we (all three authors) gathered two transcripts that each PST coded as required class assignments. Both transcripts were related to the Changing Matter discussion. The first was a transcript of the Changing Matter discussion that was conducted by a PST, Paul, who had been in one of my prior classes. We collected one coded transcript from each of the 19 PSTs. The second was each PSTs’ own transcript from the Changing Matter discussion. We again collected 19 of these transcripts, but one was significantly incomplete; thus, we were able to analyze 18 of PSTs’ own transcripts.

To examine PST perspectives on transcript coding (addressing RQ2), we analyzed a course assignment in which PSTs were asked to create and upload a 3-minute video response to four questions about transcript coding. PSTs uploaded it to Flipgrid (www.flipgrid.com) to share with me and — only after all responses were submitted — their peers.

The questions asked (a) what they learned from coding their own transcripts and the value of these experiences as an emerging teacher, (b) for an example of either a strength or area of improvement they learned from coding their own transcript, (c) what they learned from and the value of coding others’ transcripts, and (d) for an example of what they learned about teaching strategies and approaches from coding someone else’s transcript. These questions were intended to address their transcript coding experiences throughout the semester, all of which occurred with respect to discussions within the simulated classroom and included their coding of Paul’s and their own Changing Matter discussion. All 19 PSTs completed this assignment.

Data Analysis for RQ1: PST Transcript Coding

Identifying Researcher-Coded Instances

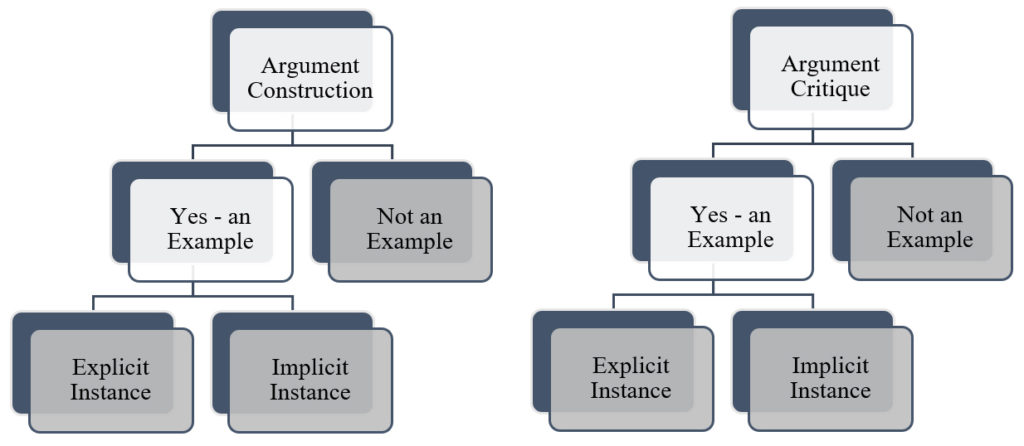

The first and second authors began by analyzing Paul’s transcript. We coded every teacher turn or utterance — what we referred to as an “instance” — in the discussion as (a) encouraging argument construction or not encouraging argument construction, or (b) encouraging argument critique or not encouraging argument critique. We also created subcodes for encouraging argument construction or critique: “explicit” and “implicit.” Both types accurately reflected the teacher’s attempts to engage the students in argument construction or critique.

Explicit instances did so in a more obvious way. An example of an explicit instance for argument construction is Paul’s question: “Who would like to share their claim first about this?” Implicit instances encouraged construction or critique in a nuanced way or involved the teacher in restating a student contribution. An example of an implicit instance for argument construction in Paul’s transcript was, “Did the smell stay the same or … change?” This followed a student’s affirmative response to Paul’s previous question about evidence that a new substance had been created when vinegar and baking soda were mixed.

Figure 3 depicts the six codes — shaded in dark gray — that we used to code Paul’s transcript. Using this coding scheme, we individually coded Paul’s transcript in a digital spreadsheet for instances of the PST engaging students in argument construction and argument critique, discussed differences in our coding, and determined a final consensus “researcher-coded” version of the transcript. Out of 47 total teacher utterances, or contributions, that were preceded or followed by student contributions in Paul’s transcript, we identified 11 explicit instances of argument construction and three explicit instances of argument critique. We also identified 14 implicit instances of argument construction and six implicit instances of argument critique.

Figure 3

Researcher Coding Scheme With Six Shaded Codes

An example of our coding is shown in Table 1. This excerpt from Paul’s transcript shows how we coded only teacher utterances, a limitation discussed later. We considered the context of what students shared before and after each teacher utterance while coding.

Table 1

Researcher-Coded Excerpt From Paul’s Transcript

| Speaker | Utterance | Argument Construction | Argument Critique |

|---|---|---|---|

| Paul (Teacher) | So let's work backwards now to investigation number two. Who would like to share their claim first about this? | Yes - Explicit | No |

| Will | I will. | - | - |

| Paul (Teacher) | All right Will. | No | No |

| Will | I think that the second one, the baking soda and vinegar did make something new. | - | - |

| Jayla | Well, I disagree. | - | - |

| Will | Oh, why? | - | - |

| Jayla | Well, because there wasn't anything new made because we were left with what we started with. | - | - |

| Will | What do you mean? | - | - |

| Jayla | Well, we had the white powder and the liquid leftover in the cup after the bubbles went away. | - | - |

| Will | I still think it's something new. | - | - |

| Paul (Teacher) | By a show of hands, who agrees with Carlos and Will on this statement, that there was something new created? Okay, and then Mina and Jayla, you disagree with that? | Yes - Implicit (asking to state a claim by raising hand) | Yes - Explicit |

| Jayla | Yeah. | - | - |

| Paul (Teacher) | All right. Explain to Will, Emily and Carlos, what you believe. | Yes - Explicit | Yes - Implicit (subtle reference to Mina/Jayla’s disagreement) |

| Note. Paul is the teacher in this transcript while Jayla and Will are two of the students in the simulated classroom. | |||

We used a similar process to generate a researcher-coded transcript identifying explicit and implicit instances when the PSTs prompted students to engage in argument construction and critique within their own transcripts. To do so, we independently coded five randomly selected PSTs’ transcripts. Our intercoder accuracy — including agreement about explicit instances, implicit instances, and nonexamples — for argument construction across all 210 teacher turns within the five PST discussions was 76%; for argument critique, it was 91%.

We resolved differences in coding for this subset of transcripts and refined our coding rules. I then used the refined set of coding rules to code the remaining PST transcripts. I returned to the PST transcript we initially coded and Paul’s transcript to ensure consistency in our coding, recoding as necessary. One PST did not code the teacher turns within the transcript and, thus, their transcript was not included in this analysis. The result was 19 unique researcher-coded transcripts: one for Paul’s transcript and 18 for the PSTs’ own transcripts. Table 2 shows the numbers of researcher-identified explicit instances of argument construction and argument critique for both PSTs’ own and Paul’s transcripts.

Table 2

Summary of Explicit Instances for Argument Construction and Critique Within Transcripts

| Argument Type | Explicit Instances in Paul’s Transcript (n = 19 PSTs) | Explicit Instances in PSTs’ Transcripts (n = 18 PSTs) | ||

|---|---|---|---|---|

| - | Number of Explicit Instances | Total Explicit Instances Across PSTs | Number of Instances Per Transcript Mean (SD) [c] | Total Explicit Instances Across PSTs |

| Argument Construction | 11 | 209 [a] | 11.6 (4.8) | 209 [d] |

| Argument Critique | 3 | 57 [b] | 3.4 (2.6) | 61 |

| [a] This number comes from multiplying 11 explicit instances by 19 PSTs who coded Paul’s transcript. [b] This number comes from multiplying three explicit instances by 19 PSTs who coded Paul’s transcript. [c] Note that M and SD are included here since there were different numbers of instances of argument construction and critique for each PST. [d] The total number of instances here is coincidentally the same number as the total explicit instances for Paul’s transcript. | ||||

Comparing PST Coding to Researcher-Coded Explicit Instances

Next, we compared the researcher-coded transcripts to the PSTs’ coding by identifying where each PST did or did not code each explicit instance and, if applicable, included nonexamples in their coding. We calculated percentages of explicit instances that were and were not coded, as well as percentages of PSTs who coded or missed explicit instances or coded non-examples.

Within the tables in the findings section, we aggregated the data to be able to share data similarly for PSTs’ coding of Paul’s and their own transcripts. This approach simplifies the presentation of data, since a specific number of researcher-coded instances for argument construction and critique for Paul’s transcript was obtained, but that number was different for each of the PSTs’ own transcripts.

In the findings section, we not only reference data in these tables but also provide insights regarding how coding varied across participants (e.g., sharing the range of the percentage of explicit instances of argument construction that the 19 PSTs coded, as well as the mean and standard deviation of those percentages). The percentages shared in this article help to describe our participant responses and are not meant to indicate generalizability or statistical significance.

Finally, we analyzed the explicit instances that were missed by one or more PSTs to identify patterns that suggested what explicit instances PSTs tended to miss in their coding. We also examined any patterns in the nonexamples PSTs tended to associate with argument construction or critique.

Data Analysis for RQ2: PST Perceptions About Transcript Coding

To analyze the Flipgrid video responses, we transcribed them and created a priori structural codes for each of the four questions. For each of these codes, the first and third author first independently generated emergent subcodes to describe the data, assigning one or more subcodes to each PST’s response; compared the subcodes; and came to consensus about a final list of subcodes to assign to each response. The second author reviewed the codes, subcodes, and assignments; slight changes were made to improve the coding based on the second author’s review.

Ultimately, we identified 23 codes organized into five themes to describe PSTs’ perspectives on coding their own transcripts and 27 codes organized into five themes regarding coding others’ transcripts. We combined similar codes in our presentation of findings in this paper, presenting 20 codes relevant to PSTs coding others’ or their own transcripts.

Findings

This section is organized into three main parts. First, the PSTs’ coding accuracy of the explicit instances of argument construction and critique is addressed. PSTs coded most instances accurately. Missed instances of argument construction were somewhat more likely to be those that encouraged students to share their reasoning, as compared to sharing their claims or evidence. We did not identify evidence that PSTs missed coding particular kinds of questions or prompts to encourage argument critique.

Second, PSTs sometimes coded nonexamples for both argument construction and critique. This represents an area in which I could improve my instructional practice in the future to help PSTs enhance their understandings of encouraging argument construction or critiquing an argumentation discussion.

Third, we address PSTs’ perspectives on the value of transcript coding using their own and others’ transcripts. This coding moves beyond linking constructs with transcript excerpts accurately and precisely. It provides some evidence that the PSTs valued engaging in transcript coding to reflect and prepare for future discussions.

Accurate and Missed Codes

Table 3 summarizes coding accuracy for both argument construction and argument critique within both Paul’s transcript and the PSTs’ own transcripts across the PSTs. (See Table 1 for how the number of total explicit instances were determined for Paul’s transcript and PSTs’ own transcripts.) In summary, PSTs coded between 61% and 84% of the explicit instances of argument construction and critique. In the sections that follow, we report on the existence of patterns with respect to the types of questions and prompts that the PSTs missed.

Table 3

Accurately Coded and Missed Explicit Instances Across PSTs

| Argument Type | Paul’s Transcript [a] | Own Transcript [b] | ||||

|---|---|---|---|---|---|---|

| Total Explicit Instances | Accurately Coded Instances | Missed Instances | Total Explicit Instances | Accurately Coded Instances | Missed Instances | |

| Argument Construction | 209 [c] | 61% | 39% | 209 [c] | 73% | 27% |

| Argument Critique | 57 | 75% | 25% | 61 | 84% | 16% |

| [a] 19 PSTs coded Paul’s transcript. [b] 18 PSTs coded their own transcript. [c] The total number of instances here is coincidentally the same. | ||||||

Accurate and Missed Codes for Argument Construction

Each PST coded between 0% and 100% (M = 61%; SD = 31%) of the 11 explicit instances within Paul’s transcript. Only one participant coded all 11 explicit instances of Paul prompting students to engage in argument construction. Each instance was coded by 47% to 74% of participants. As shown in Table 2, PSTs collectively missed 39% of explicit instances of argument construction in Paul’s transcript.

Across the 18 PSTs who coded their own transcripts, 209 researcher-coded explicit instances of argument construction could have been coded. (This number, 209, is coincidentally the same number of total explicit instances across the 18 PSTs’ transcripts as there were for Paul’s transcript — 11 instances multiplied by 19 PSTs who coded Paul’s transcript).

This result demonstrates that the PSTs utilized questions and prompts to encourage argument construction in their discussions. Each PST coded between 17% and 100% (M = 73%; SD = 17%) of the five to 22 explicit instances of argumentation construction (M = 11.6; SD = 4.8) within each of their transcripts. Altogether, PSTs missed 27% of explicit instances of argument construction in their own transcripts.

To search for patterns of what kinds of questions or prompts PSTs tended to miss in their coding, we combined the explicit instances of argument construction that PSTs collectively missed in Paul’s transcript (82 instances) and their own transcripts (56 instances), totaling 138 instances. We categorized these missed instances as prompts/questions that attempted to elicit (a) claims, (b) evidence, or (c) reasoning or justification. The data reported in Table 4 suggests that PSTs may be more likely to miss explicit instances that aim to elicit reasoning or justification from students; however, we cannot make this assertion with statistical significance.

Table 4

Missed Explicit Instances of Argument Construction in Paul’s and PSTs’ Own Transcripts

| Missed Code Category | Percentage of Missed Instances (n = 138) [a] | Examples From Paul’s and PSTs’ Transcripts |

|---|---|---|

| Claim | 30% | Who wants to share their claim first? [About vinegar and milk experiment] [Paul] Back to Jayla and Mina, can you guys tell me what you thought for number two? Did you guys think that it was a new substance or the same? [Savannah] |

| Evidence | 30% | Jayla you claimed that just because the bubbles went away, that it didn't create something new, correct? … But there was another evidence that it actually changed, that something. [Paul] So what I’m getting is that you mixed together two liquids and that something else was formed. Can someone describe what else was formed? [Aliyah] |

| Reasoning | 48% | All right. Will and Carlos, do you guys mind explaining your point to each other? [Paul] Emily, I would like it for you to explain your answers as to why you thought that there was nothing new in this investigation. [Haley] |

| [a] Some instances were coded as evidence and reasoning; thus percentages do not add to 100%. | ||

Accurate and Missed Codes for Argument Critique

We found only three explicit instances of argument critique within Paul’s transcript, which represents 57 total explicit instances of argument critique that all 19 PSTs could have coded. Of these 57 instances, the PSTs collectively coded 76% and missed 24%. Each PST coded between 0% and 100% (M = 75%; SD = 35%) of the three explicit instances within Paul’s transcripts. Further, over half of participants (58%) identified all three explicit instances of Paul prompting students to engage in argument critique. Each instance was coded by 74% to 79% of participants (Table 5).

Table 5

PST Coding of Explicit Instances of Argument Critique in Paul’s Transcript

| Researcher-Coded Required Instances | Percentage PSTs (n = 19) | |

|---|---|---|

| Coded | Not Coded | |

| Does anybody agree with what Emily said? Jayla, Mina, would you like to add on to what Emily was saying that she doesn't... Why don't you guys believe that something new was created? | 79% | 21% |

| By a show of hands, who agrees with Carlos and Will on this statement, that there was something new created? Okay, and then Mina and Jayla, you disagree with that? | 74% | 26% |

| So there was a more chemical change in that …? Does anybody still have any disagreement about that, Jayla, Mina? | 74% | 26% |

| Note: All three explicit instances in Paul’s transcript are included in this table. | ||

Each of these instances encouraged students to state their agreement or disagreement with another student or group. In two of the three instances, Paul also asked why students agreed or disagreed. Given the low number of explicit instances of argument critique in Paul’s transcript, their similar nature, and the similar percentage of coding and not coding these instances, we did not identify any patterns that would suggest why some PSTs did not code some of the instances.

Across the 18 PSTs who coded their own transcripts, we identified 61 explicit instances of argument critique within 16 of the PSTs’ transcripts. Two transcripts did not include prompts or questions to encourage argument critique. Of the 61 explicit instances, the 16 PSTs coded 84%. Each of the 16 PSTs who encouraged argument critique in their discussions coded between 0% and 100% (M = 81%; SD = 26%) of the one to nine explicit instances (M = 3.2; SD = 2.2) within their transcripts.

The PSTs did not code 10 explicit instances. We observed two types of missed instances: (a) those that asked if students agreed or disagreed with other students (similar to the explicit instances in Paul’s transcript) and (b) those that asked students to respond to another student’s ideas. As shown in Table 6, most of these missed instances were the first type. In summary, our analysis did not reveal particular types of prompts/questions that PSTs struggled to associate with argument critique.

Table 6

Missed Explicit Instances of Argument Critique in PSTs’ Own Transcripts

| Missed Code Category | Percentage of Missed Instances (n = 10) | Example |

|---|---|---|

| Agree or Disagree Language | 80% | So does anyone else agree or disagree with Jayla's claim? [Abigail] |

| Respond to Another Student’s Ideas | 20% | So Carlos is saying it looks different. Mina, what do you think about that? Do you think if something looks different, it's a new substance or not? [Emma] |

Coding Nonexamples

To examine how PSTs coded nonexamples of argument construction or critique, we first tallied the number of teacher utterances that we coded as nonexamples — that is, neither explicit nor implicit examples — of argument construction or critique. Paul’s transcript had 475 nonexample teacher utterances for argument construction (25 utterances in his transcript multiplied by 19 PSTs) and 722 nonexamples for argument critique (38 utterances multiplied by 19 PSTs). For PSTs’ own transcripts coded by 18 PSTs, there were 472 nonexamples for argument construction and 772 nonexamples for argument critique. Table 7 summarizes the coding of nonexamples by PSTs and references these numbers. Note that more PSTs coded more nonexamples — for both argument construction and argument critique — when coding their own transcripts than when coding Paul’s transcript. One reason for this result is that more types of teacher utterances were identified to analyze and, thus, more opportunity for error in coding.

Table 7

Summary of Nonexamples Coded by PSTs

| Argument Type | Paul’s Transcript | Own Transcript | ||

|---|---|---|---|---|

| Number of PSTs who Coded Nonexamples (n =19) | Nonexamples across PSTs (No. of nonexamples coded out of total possible nonexamples) | Number of PSTs who Coded Nonexamples (n =18) | Nonexamples across PSTs (No. of nonexamples coded out of total possible nonexamples) | |

| Argument Construction | 4 | 1% (6 of 475) | 13 | 10% (47 of 472) |

| Argument Critique | 16 | 6% (43 of 722) | 18 | 11% (83 of 772) |

Coding Nonexamples of Argument Construction

As shown in Table 7, only 1% of the teacher utterances in Paul’s transcript and 10% of the teacher utterances in PSTs’ transcripts were incorrectly coded as argument construction by PSTs. While only four of 19 PSTs (21%) coded nonexamples for argument construction with respect to Paul’s transcript, 13 of 18 PSTs (72%) coded nonexamples for argument construction when coding their own transcript. We analyzed the nonexamples in Paul’s transcript and the PSTs’ transcripts for common themes that grouped the nonexamples by the aim of the prompt (e.g., asking or checking for consensus). We identified three categories (Table 8). In this analysis, we combined the six nonexamples in Paul’s transcript with the 48 nonexamples in PSTs’ transcripts, for a total number of 53 nonexamples coded by PSTs.

Table 8

Nonexamples of Argument Construction Coded by PSTs in Paul’s and Their Own Transcripts

| Category | Percentage of Nonexamples (n = 53) | Nonexamples of Argument Construction |

|---|---|---|

| Asking or checking for consensus | 49% | Okay, so for the second investigation of white vinegar and baking soda, what have we decided? Have we decided that it's a new substance? What consensus are we making? [Haley] |

| Inquiring about observations (apart from being in support of a claim) | 28% | Let's talk about what we saw for the baking soda and pepper. So what did you notice? What did you observe? [Emily] |

| Asking questions about related science content (e.g., states of matter) | 28% | Can we see a gas? [Paul] |

| Other [a] | 4% | Okay. Will do you have anything to add onto that? [After Emily shares something that Will had not yet considered.] [Patrick] |

| Note: Some nonexamples were coded in more than one category. [a] This category includes instances that were not similar to other instances and thus not categorized. | ||

The most frequently coded nonexample category were instances in which the PST was checking for consensus. We also asked PSTs to code for teacher questions and prompts to build consensus, but these data are not reported in this article; most of these instances were also coded as such. Other categories of coding nonexamples of argument construction, relevant to over one quarter of instances each, entailed (a) asking students to share what they noticed in an investigation without those observations being used as evidence with respect to a claim and (b) asking questions about scientific concepts or definitions (most often about the states of matter).

Coding Nonexamples of Argument Critique

As shown in Table 7, 6% of the teacher utterances in Paul’s transcript and 11% of the teacher utterances in PSTs’ transcripts were incorrectly coded as argument critique by PSTs. In both cases, a relatively high percentage of PSTs coded nonexamples with respect to argument critique, that is, 16 of 19 (84%) for Paul’s transcript and 18 of 18 (100%) for their own transcript.

As we did for argument construction, we analyzed these instances of coding nonexamples for argument critique within both Paul’s and PSTs’ own transcripts, which included 126 nonexamples (Table 9). We identified nine categories that described the aim of the question and captured more than one instance each. Three of these instances were the same as categories for coding nonexamples of argument construction, that is, questions or prompts that were about observations (but not being in support of a claim; 30% of nonexamples of argument critique and 28% of nonexamples of argument construction); checked for consensus (26% of nonexamples of argument critique instances and 49% of nonexamples of argument construction); and asked about science content (4% of nonexamples of argument critique and 28% of nonexamples of argument construction).

Additionally, PSTs’ coding of nonexamples of argument critique included questions and prompts about argument construction, including eliciting claims, evidence and reasoning (46% of nonexamples); asking students to convince one another of their arguments (17%); and asking students if they had revised their ideas (12%). Also, statements such as, “Would you like to add onto that?” were included in over one third (39%) of nonexamples. Importantly, in our researcher coding process, we checked to see if such “add onto” statements followed prior explicit requests by the teacher to engage in critique or followed from students engaging in critique; however, these nonexamples did not.

Table 9

Nonexamples of Argument Critique Coded by PSTs in Paul’s and Their Own Transcripts

| Category | Percentage of Nonexamples (n = 126) | Nonexamples of Argument Critique |

|---|---|---|

| Asking students about claims, evidence, or reasoning | 46% | Does anybody have anything they'd like to add to Carlos's claim or something different that they observed? [Haley] |

| Asking students to add on to what others said (but not clearly about critiquing an argument) | 39% | Emily, do you have anything to add to what Jayla is saying? [Kayla] |

| Inquiring about observations or what was noticed in the investigation (apart from being in support of a claim) | 30% | A white gloopy solid thing? Okay, and anybody else have anything to add to that? [Stephanie] |

| Asking or checking for consensus | 26% | Okay … have we decided that it's a new substance? What consensus are we making? [Haley] |

| Asking students to try to convince another student or group [a] | 17% | Do one of you want to explain to Jayla and Mina … why it did make something new? [Chloe] |

| Asking students if their ideas have changed (argument revision) [a] | 12% | You're changing your mind? How about you Will? [Paul] |

| Asking questions about related science content (e.g., states of matter) | 4% | So we have a liquid, a solid, and the bubbles. Emily, what did you say before? What were bubbles proof of? [Alyssa] |

| Subtle questions about what students are thinking | 3% | What are you thinking, Jayla? [Hannah] |

| Asking students to restate another student’s idea | 2% | Awesome. Can anyone repeat what your classmate said in different words? [Savannah] |

| Note: Some nonexamples were coded in more than one category. [a] These are aspects of argument construction. | ||

PSTs’ Perceptions About Transcript Coding

In their Flipgrid videos, the PSTs described the utility of coding others’ transcripts and their own transcripts. As described in the following sections, PSTs mentioned broader benefits of transcript coding; some also mentioned specific benefits, including how they benefitted from reflecting on their own or borrowing ideas from others’ questions and prompts to encourage argument construction and critique. Over half of the PSTs specifically mentioned that transcript coding would help their future teaching practice.

Perceptions About Coding Others’ Transcripts

The PSTs in this study had multiple opportunities to code others’ transcripts, including Paul’s transcript for the Changing Matter discussion, another peer’s transcript for the Changing Matter discussion (not included in this analysis), and one other transcript used to prepare for the second of three argumentation discussions during the semester. Most participants (79%) said that when coding others’ transcripts, they noticed various teaching approaches, techniques, or strategies they had not previously considered. Emily’s Flipgrid response is a good example:

So from my peers I was able to learn about different methods and ways to facilitate the discussion that worked well for them and addressing the five features. Coding others’ transcripts … [gave] me the opportunity to evaluate which methods they applied that were successful for them and how I could apply it into my own discussion.

Note that the “five features” of high-quality argumentation discussions that Emily mentioned include encouraging argument construction and critique, student-to-student interactions, and other aspects of argumentation discussions (Mikeska et al., 2019).

Participants also noticed specific aspects of discussions within others’ transcripts. The most frequently mentioned strategy was encouraging direct student-to-student talk (58%). The participants also mentioned their noticing of when the other teacher prompted students to engage in argument construction (16%), argument critique (42%), and building consensus (26%).

Grace learned some specific ideas from Paul’s transcript that she aimed to apply in her own Changing Matter discussion:

While I was coding Paul’s transcript, actually, I noticed … supporting students in speaking directly to one another … encouraging the students to engage in one another and one another’s ideas, and … supporting students in evaluating and critiquing one another’s ideas – I thought he did an incredible job at that.… The students were very respectful when they were evaluating and critiquing one another. He had the students engaged with one another’s ideas, and they were able to speak directly to each other. After reading his transcript and coding it, I used it as an example for facilitating my [Changing Matter] discussion, and I thought it was very beneficial and it was very helpful, and I thought that with that I was successful in facilitating [the Changing Matter discussion] myself.

Elizabeth mentioned that she appreciated Paul’s attention to argument critique in his discussion framing: “I really liked the way that he … explained that critiquing one another’s claims isn’t necessarily critiquing that person. It’s just critiquing their evidence and their ideas.” After facilitating the Changing Matter discussion, Haley appreciated coding her peer’s Changing Matter transcript. Haley explained,

Something I learned … was asking the students to explain their thoughts to one another directly. The example from my peer’s transcript that I’m referring to is when Will, Emily and Carlos all believed that a new substance was made, and Jayla and Mina did not. So my peer asked them to explain why, with detail, they thought something new is created.

Some (21%) of participants said that the transcript served as a model for facilitating an argumentation discussion, while others (26%) said that coding others’ transcripts served as a basis of comparison with respect to their own facilitation of discussions. Most participants (68%) suggested that coding others’ transcripts helped them prepare for future discussions.

Three aspects of others’ discussions mentioned frequently in Flipgrid videos were how other teachers demonstrated how they used the five features of argumentation discussions (37%); organized the discussion (47%); and used specific language or phrases (21%). The latter two of these examples — organization and language/phrasing — and six other specific aspects of discussions were only noticed in others’ transcripts, not participants’ own transcripts. Table 10 summarizes findings about participants’ perceptions about coding others’ transcripts and their own transcripts.

Table 10

Preservice Teacher Specific Topics Discussed in Flipgrid Videos (n = 19)

| PST noticed and discussed when the teacher was… | PSTs Who Mentioned Each Aspect with Respect to Others’ Transcripts | PSTs Who Mentioned Each Aspect with Respect to Their Own Transcripts |

|---|---|---|

| Evidence of noticing within both own and other’s transcripts | ||

| Encouraging direct student-to-student talk | 58% | 74% |

| Engaging students in argument construction | 16% | 26% |

| Engaging students in argument critique | 42% | 21% |

| Framing the discussion and setting clear expectations for students | 11% | 21% |

| Eliciting ideas from students | 5% | 21% |

| Posing good questions | - | 16% |

| Encouraging students to reach consensus | 26% | 11% |

| Using clear language to communicate | - | - |

| Demonstrating features of argumentation [a] | 37% | - |

| Evidence of noticing within own transcripts | ||

| Using wait time | - | 11% |

| Supporting student, not teacher, evaluation of ideas | - | 11% |

| Making a specific (teacher) contribution to the discussion | - | 5% |

| Evidence of noticing within others’ transcripts | ||

| Organizing the discussion | 47% | - |

| Using talk moves in general or used specific talk moves (e.g., restating) | 26% | - |

| Helping students to consider how their ideas changed | 21% | - |

| Using formative assessment strategies | 21% | - |

| Using specific language/phrasing | 21% | - |

| Documenting student ideas | 11% | - |

| Encouraging students to engage with one another’s ideas | 11% | - |

| Correcting content errors | 5% | - |

| [a] This code captures references to features of argumentation in general (e.g., Emily shared that coding others’ transcripts helped her learn to “address the five features”). | ||

Perceptions About Coding Their Own Transcripts

The 19 PSTs in the class had two opportunities to code their own transcripts — once after the second argumentation discussion (not analyzed in this study) and once after the Changing Matter discussion. Within their Flipgrid videos, participants identified four primary reasons that coding their own transcripts was a valuable activity, which were to identify their improvement areas or weaknesses (100% of participants); strengths (79%); aspects of their teaching that they may not have noticed without the coding process (26%); and their habits or teaching styles (11%).

Hannah said, “You can really learn what your strengths … [and] weaknesses are. You can learn over time areas of growth, areas that still need improvement and … your [teaching] style.” Kayla said coding helped her to see “things that I may have not known or seen if I didn’t code… or may have not noticed while in the actual discussion.” Participants also mentioned seeing how they have grown over the semester in their ability to facilitate argumentation discussions (58%) and helping to improve their confidence in teaching (16%). Most promising is that more than half of participants said that coding their transcripts would prepare them to facilitate future discussions in either a real or simulated classroom (53%).

In their responses, participants also mentioned more specific aspects of facilitating the discussion that they came to notice through coding their own transcripts. The most frequent specific response mentioned (74%) was about examples of or the need to encourage direct student-to-student talk during an argumentation discussion. Participants also mentioned their noticing through transcript coding of when they were encouraging argument construction (26%), argument critique (16%), and consensus building (11%). Referencing both student-to-student interaction and argument construction, Isabella said,

Through coding my own transcripts over the semester, I was able to learn how to improve my use of the five features of high-quality discussion for argumentation. As an emerging teacher, this helped me improve how to encourage student-to-student interactions, and have students make claims and back up their claims with reasoning and evidence.

Emma also reflected on how coding her own transcripts helped her to consider encouraging students to evaluate one another’s ideas rather than her doing the evaluating:

So this [transcript coding] is a worthwhile task for emerging teachers because it helps us to see our strengths and our weaknesses and how we can improve on our weaknesses for our next discussion. So, for example, when I coded my transcript for [the simulated discussion prior to Changing Matter] … I recognized an instance where I evaluated a student idea. Emily said the particles were in everything, and I said, yes, particles are in everything, and this was me evaluating her ideas. So it helped me to improve, because I knew if a similar situation came up, I could ask another student to share what they thought about Emily’s idea instead. This helped me to address 5B [argument critique] in my next discussion [Changing Matter] because I came prepared with more questions to prompt students to evaluate each other’s ideas.

Note that “5B” was the notation we used to indicate argument critique.

Discussion and Implications

To not only facilitate but notice classroom interactions that involve student engagement in argumentation is challenging for novice teachers. Findings from our argumentation-focused study suggest that most participants were able to notice and code most of the explicit instances of the teacher (Paul or themselves) encouraging argument construction and critique; however, PSTs’ coding was incomplete, and they missed some explicit instances. Our findings suggest that there was a slight tendency for PSTs to miss more instances of argument construction when those instances were eliciting students’ reasoning as compared to instances that elicited evidence or a claim.

Although somewhat speculative given that we did not conduct significance tests, we noticed that coding accuracy was higher for argument critique than for argument construction. Perhaps pressing for evidence-based reasoning is more nuanced and challenging to notice and identify within specific teaching moves as compared to moves to encourage argument critique.

Our analysis also revealed ways in which PSTs overextended what they included as examples of argument construction and argument critique. Having too broad of a conception of one of these constructs erodes the connections between the three fundamental pieces of van Es and Sherin’s (2002) noticing framework. The connection between the classroom interaction — or in this case, the teacher prompt or question that is used to encourage student construction or critique — is not strongly connected to the teaching principle of what it means to elicit argument construction or critique.

Further, having a weaker connection between classroom interactions and specific principles is less likely to result in future instruction in which the principle is applied productively. For example, students are unlikely to engage in argument critique if the prompt that the teacher employs for this strategy is simply to ask if other students “have anything to add on.” A more effective prompt that we want the teacher to associate with argument critique is asking students to state their agreement or disagreement with another group’s idea and share their reason for their agreement or disagreement. Having associated that prompt with the construct of encouraging argument critique will be a useful scaffold to support instruction that aims to encourage scientific argumentation.

The Fliprid video findings suggested that participants found value in both coding their own and others’ transcripts; one did not seem to be valued above the other. Coding their own transcripts enabled them to notice their strengths and improvement areas. It also helped make apparent how their prompts and questions affected student responses — some of which they had not noticed prior to coding. For example, as mentioned in the findings, one PST noticed how she had evaluated a student’s response and reflected on how in the future she would instead ask another student to do the evaluating.

Coding others’ transcripts gave the participants good ideas, different approaches, and new possibilities. Similar to other researchers (e.g., Gaudin & Chaliès, 2015), we found utility in using both PSTs own and others’ transcripts. This approach was especially helpful in our context because the PSTs and others facilitated the same Changing Matter discussion. Further, some aspects of teaching were noticed by the participants only with respect to their own transcripts, and some aspects were mentioned only with respect to others’ transcripts.

Importantly, over half of PSTs mentioned in their Flipgrid videos that coding their own and other’s transcripts was beneficial to their future teaching in simulated or real classrooms. Some provided specific examples of how they used some of Paul’s approaches when facilitating their own Changing Matter discussion or how their coding led them to approach a subsequent simulated classroom discussion in a different way.

Situating these findings within the three-part noticing framework by van Es and Sherin (2002) makes apparent the potential value of this pedagogical approach. PSTs prepared for their Changing Matter discussions, in part, by identifying which of Paul’s teaching moves encouraged argument construction and critique — connecting classroom interactions and principles/constructs. Another aspect of the PSTs’ preparation for the Changing Matter discussion was including questions and prompts to encourage argument construction and critique, many of which were from Paul’s discussion, within a graphic organizer to prepare for the discussion.

This finding contributes to the third part of van Es and Sherin’s (2002) framework in which teachers interpret interactions that are connected to classroom principles to inform future instructional decisions. These instructional decisions were realized when the PSTs had an opportunity to facilitate the same Changing Matter discussion as did Paul in the simulated classroom. Certainly, not all of the preparation for this discussion came from coding Paul’s transcript; however, some did. Further, the cycle started again as PSTs coded their own Changing Matter transcripts, which occurred about 1 week prior to facilitating their final argumentation discussion in the class that was a part of the larger aforementioned research project.

This cycle provided a rich window to identify the strengths and potential areas of improvement to target in future instruction. In particular, findings suggest that more targeted focus on contrasting accurate examples and nonexamples, along with emphasizing the boundaries of argument construction and critique teaching moves, would likely be useful in future instruction.

This study represented a deep look into student work in the first author’s class — an analysis that is not feasible in the midst of a semester in which transcript coding assignments need to be turned around in a matter of days to help PSTs prepare for and debrief from their simulated classroom discussions. As an action research study, it provides recommendations for my own future instruction and potentially for others who are helping PSTs learn to facilitate the ambitious teaching practice of facilitating argumentation discussions. One of these recommendations is to make the transcript coding a more collaborative process among PSTs, so that they can grapple with coding decisions together. Similarly, pairs of PSTs may coanalyze one another’s transcripts to encourage more informed transcript coding.

Another recommendation is to help PSTs better hone what is and is not captured by a construct, such as encouraging argument construction or encouraging argument critique. This honing could involve presenting a mixed list of examples and nonexamples together and having PSTs identify them as either examples or nonexamples for a particular construct.

Finally, to encourage examination of teacher prompts and questions in context, it is important to share these examples and nonexamples, not simply as teacher prompts/questions, but as transcript excerpts that include both teacher prompts and student responses and — when relevant — prior student contributions prior to the primary teacher question or prompt being analyzed. This idea arises from our wondering whether PSTs considered this context as they coded, perhaps leading to coding nonexamples.

One limitation of this work is that our transcript coding focused more on teacher prompts than on student responses. This choice was made to reduce the complexity of the coding task. Future PST coding activities could involve PSTs finding examples not only of teachers encouraging argument construction or critique but also of students engaging in argument construction or critique. Another limitation is that the Flipgrid video responses were shared with me, their professor, and thus may be biased toward sharing the positive aspects of transcript coding more than any negative aspects or confusion during coding.

Like others, this study demonstrates that transcript and video coding and analysis have utility for supporting teacher learning (Kucan, 2007, 2009; Mitchell & Marin, 2015; Tripp & Rich, 2012). Obtaining access to videos and transcripts can be difficult, whether those classrooms are simulated environments or real classrooms. We currently provide a link to videos and transcripts in a free access repository of science and math argumentation discussions for teacher educators to use during instruction (Mikeska et al., 2021a; 2021b; 2021c). We hope that these and other videos and transcripts will provide additional opportunities for teachers to practice and refine their noticing skills to support student learning.

Finally, the technology that undergirds this study — the Mursion simulated classroom environment — provided opportunities for PTSs to analyze another’s transcript prior to facilitating an argumentation discussion, facilitate that discussion with the same learning goal and the same students in the same simulated classroom environment, and then analyze their own and a peer’s transcript for that discussion. In other words, while transcript coding can be done to analyze discussions that occur in real classrooms, the simulated classroom environment provided a unique opportunity to analyze others’ and one’s own discussion transcripts within the same context. This exciting technology provides multiple learning opportunities for teacher education and will likely continue to shape our field (Mikeska & Howell, 2020).

Author Note

This research was funded by the National Science Foundation (Grant No. 1621344). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

References

Abell, S. K., Bryan, L. A., & Anderson, M. A. (1998). Investigating preservice elementary science teacher reflective thinking using integrated media. Science Education, 82(4), 491. https://doi.org/10.1002/(SICI)1098-237X(199807)82:4<491::AID-SCE5>3.0.CO;2-6

Abell, S. K., & Cennamo, K. S. (2003). 5. Videocases in elementary science teacher preparation. In S. Brophy (Ed.), Using video in teacher education (Advances in Research on Teaching, Vol. 10;pp. 103-129). Emerald Group Publishing Limited. https://doi.org/10.1016/S1479-3687(03)10005-3

Ball, D. L. (2011). Foreward. In M. G. Sherin, V. R. Jacobs, & R. Philipp (Eds.), Mathematics teacher noticing: Seeing through teachers’ eyes (pp. xx-xxii). Routledge.

Banilower, E. R., Smith, P. S., Malzahn, K. A., Plumley, C. L., Gordon, E. M., & Hayes, M. L. (2018). Report of the 2018 NSSME+. Horizon Research, Inc.

Barnhart, T., & van Es, E. (2015). Studying teacher noticing: Examining the relationship among pre-service science teachers’ ability to attend, analyze and respond to student thinking. Teaching and Teacher Education, 45, 83-93. https://doi.org/10.1016/j.tate.2014.09.005

Benedict-Chambers, A., & Aram, R. (2017). Tools for teacher noticing: Helping preservice teachers notice and analyze student thinking and scientific practice use. Journal of Science Teacher Education, 28(3), 294-318. https://doi.org/10.1080/1046560X.2017.1302730

Berland, L. K. (2011). Explaining variations in how classroom communities adapt the practice of scientific argumentation. Journal of the Learning Sciences, 20, 625–664. http://doi.org/10.1080/10508406.2011.591718

Berland, L. K., & Hammer, D. (2012). Framing for scientific argumentation. Journal of Research in Science Teaching, 49(1), 68-94. https://doi.org/10.1002/tea.20446

Bravo-Torija, B., & Jimenez-Aleixandre, M. P.. (2018). Developing an initial learning progression for the use of evidence in decision-making contexts. International Journal of Science and Mathematics Education, 16(4), 619-638. http://doi.org/10.1007/s10763-017-9803-9

Chan, K. K. H., Xu, L., Cooper, R., Berry, A., & van Driel, J. H. (2020). Teacher noticing in science education: Do you see what I see? Studies in Science Education, 1-44. https://doi.org/10.1080/03057267.2020.1755803

Chao, T., Murray, E., & Star, J. R. (2016). Helping mathematics teachers develop noticing skills: Utilizing smartphone technology for one-on-one teacher/student interviews. Contemporary Issues in Technology and Teacher Education (CITE Journal), 16(1), 22–37. https://citejournal.org/volume-16/issue-1-16/mathematics/helping-mathematics-teachers-develop-noticing-skills-utilizing-smartphone-technology-for-one-on-one-teacherstudent-interviews

Dalvi, T., & Wendell, K. (2017). Using student video cases to assess pre-service elementary teachers’ engineering teaching responsiveness. Research in Science Education, 47(5), 1101-1125. https://doi.org/10.1007/s11165-016-9547-5

Dieker, L. A., Straub, C. L., Hughes, C. E., Hynes, M. C., & Hardin, S. (2014). Learning from virtual students. Educational Leadership, 71(8), 54-58.

Gaudin, C., & Chaliès, S. (2015). Video viewing in teacher education and professional development: A literature review. Educational Research Review, 16, 41-67. https://doi.org/10.1016/j.edurev.2015.06.001

Gelfuso, A. (2016). A framework for facilitating video-mediated reflection: Supporting preservice teachers as they create ‘warranted assertabilities’ about literacy teaching and learning. Teaching and Teacher Education, 58, 68-79. https://doi.org/10.1016/j.tate.2016.04.003

Gonzalez, G., & Vargas, G. E. (2020). Teacher noticing and reasoning about student thinking in classrooms as a result of participating in a combined professional development intervention. Mathematics Teacher Education and Development, 22(1), 5-32.

Goodson, B., Caswell, L., Dynarski, M., Price, C., Litwok, D., Crowe, E., Meyer, R., & Rice, A. (2019). Teacher preparation experiences and early teaching effectiveness: Executive summary (NCEE 2019-4010).National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education.

Hawkins, S., & Park Rogers, M. (2016). Tools for reflection: Video-based reflection within a preservice community of practice. Journal of Science Teacher Education, 27(4), 415. https://doi.org/10.1007/s10972-016-9468-1

Jacobs, V. R., Lamb, L. L. C., & Philipp, R. A. (2010). Professional noticing of children’s mathematical thinking. Journal for Research in Mathematics Education, 41(2), 169-202. http://www.jstor.org/stable/20720130

Kitchen, J., & Stevens, D. (2008). Action research in teacher education: Two teacher-educators practice action research as they introduce action research to preservice teachers. Action Research, 6(1), 7-28. https://doi.org/10.1177/1476750307083716

Kucan, L. (2007). Insights from teachers who analyzed transcripts of their own classroom discussions. The Reading Teacher, 61(3), 228. https://doi.org/10.1598/RT.61.3.3

Kucan, L. (2009). Engaging teachers in investigating their teaching as a linguistic enterprise: The case of comprehension instruction in the context of discussion. Reading Psychology, 30(1), 51-87. https://doi.org/10.1080/02702710802274770

Lee, C., Lee, T., Dickerson, D., Castles, R., & Vos, P. (2021). Comparison of peer-to-peer and virtual simulation rehearsals in eliciting student thinking through number talks. Contemporary Issues in Technology & Teacher Education, 21(2), 297-324. https://citejournal.org/volume-21/issue-2-21/mathematics/comparison-of-peer-to-peer-and-virtual-simulation-rehearsals-in-eliciting-student-thinking-through-number-talks

Luna, M. J. (2018). What does it mean to notice my students’ ideas in science today?: An investigation of elementary teachers’ practice of noticing their students’ thinking in science. Cognition and Instruction, 36(4), 297-329. http://doi.org/10.1080/07370008.2018.1496919

Luna, M. J., Selmer, S. J., & Rye, J. A. (2018). Teachers’ noticing of students’ thinking in science through classroom artifacts: In what ways are science and engineering practices evident? Journal of Science Teacher Education, 29(2), 148-172. https://doi.org/10.1080/1046560X.2018.1427418

Luna, M. J., & Sherin, M. G. (2017). Using a video club design to promote teacher attention to students’ ideas in science. Teaching and Teacher Education, 66, 282-294. https://doi.org/10.1016/j.tate.2017.04.019

Marco-Bujosa, L., Gonzalez-Howard, M., McNeill, K., & Loper, S. (2017). Designing and using multimedia modules for teacher educators: Supporting teacher learning of scientific argumentation. Innovations in Science Teacher Education, 2(4). https://innovations.theaste.org/designing-and-using-multimedia-modules-for-teacher-educators-supporting-teacher-learning-of-scientific-argumentation/